|

Table of Contents:New feature: TALKBACKS! (This does not include the columns--"The Mailbag", "News Bytes", "The Answer Guy", "More 2-Cent Tips" and the Back Page--because these consist of many unrelated topics in one article. We are exploring what kind of discussion forum would be most appropriate for them.)

|

||||||||

| TWDT 1 (gzipped text file) TWDT 2 (HTML file) are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version. |

|||

![[tm]](../gx/tm.gif) , http://www.linuxgazette.com/ , http://www.linuxgazette.com/This page maintained by the Editor of Linux Gazette, Copyright © 1996-2000 Specialized Systems Consultants, Inc. |

|||

Write the Gazette at

|

Contents: |

Answers to these questions should be sent directly to the e-mail address of the inquirer with or without a copy to [email protected]. Answers that are copied to LG will be printed in the next issue in the Tips column.

Before asking a question, please check the Linux Gazette FAQ to see if it has been answered there.

Tue, 01 Feb 2000 12:16:28 -0200

Tue, 01 Feb 2000 12:16:28 -0200

From: Clovis Sena <>

Subject: help in printing numbered pages!

Hi,

I usualy print a lot of documentation. One thing that I would like to make is that my print jobs gets the pages to be numbered. So at the bottom of the pages we could see "page 1/xx" , etc. I had looked a while for info in how to set this up, but could not find. The printtool just dont do it. Maybe i should to create a filter, but what commands must i use to make this heapppens???

A other thing that would be nice is that is we could also print the location of the document, or the url, if it be a web page, like /usr/doc/html/mydoc.html or http://www.linuxjournal.com/article/example.html. Could this be done???

Any help will be welcome. Thanks for reading this.

Tue, 1 Feb 2000 16:37:07 +0200

Tue, 1 Feb 2000 16:37:07 +0200

From: Knobel Alex <>

Subject: samba problim

hi and thank's fore your Help :0) will my english is "so bad so forgive me". will I have Linux Box With Kernel 2.2.12-20 Red Hat Linux & I Have Install The Samba Frome The Same CD I Have Red The HOWTO Samba & I Try What IT But I Dis'nt Anderstand How The Windows Shod See The Linux Box Will Frome The Linux I Can See The Network & see The Share But the NT Dosn't See The Linux In The smb.conf I Write The Follow

[global] workegroup = staff " i have a group cald staff" netbios name = samba ......

Tue, 01 Feb 2000 11:46:50 -0500

Tue, 01 Feb 2000 11:46:50 -0500

From: Stan Wilburn <>

Subject: Modem problem with 5.2

I have recently setup Linux 5.2 on a PC at home. The setup went great with only one problem: I cannot get the modem to dial so that I can access the Internet. Looking through various websites on Linux, I found that if your modem is on com3 there is some additional setup that is required. Can you help me with this? I have mapped the com3 port to /dev/cua2 and by the manual that is all I thought I had to do. I also ran statserial on this port and I get the correct information here also. What could be wrong? I can hear the modem clicking like it is trying to dial, but it will never dial the number. Thanks.

Tue, 01 Feb 2000 15:48:28 MST

Tue, 01 Feb 2000 15:48:28 MST

From: Sipe <>

Subject: Linux on IBM Aptiva S6H

I have an IBM Aptiva S6H (214) with an IBM MM75 Multimeda monitor. I cannot seem to get my X server (Xfree86 -- current version #.#.5) with mandrake 6.1. I have an onboard ATI Mach64 3D Rage Pro card as well as a voodoo2, which supports rather high res. I've set custom frequencies (horiz: 69khz vert: 120khz) and picked my card from the card database. Still, when i run x windows, it's saying monitor res not supported and no monitor found, specifically "fatal server error" "no monitor". If somebody could help me out i would REALLY appreciate it! If you need more information about my computer or versions, please email me ([email protected]). I am desparate for help, i've tried irc dalnet and efnet #linux, friends, and checked many forums.

Wed, 2 Feb 2000 01:46:20 -0500

Wed, 2 Feb 2000 01:46:20 -0500

From: Anthony H. Downey, Jr. <>

Subject: IP Masquerade Connection Problems

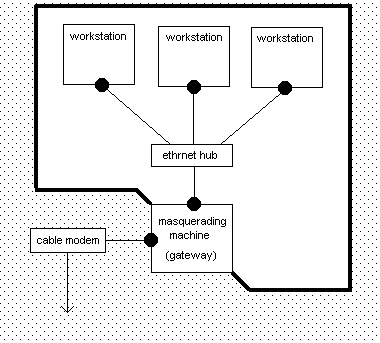

I've been running Linux for a few years now, using mostly RedHat distributions. I'm currently running RedHat 6.1. Recently, I decided to connect my small office (4 workstation nodes and 2 servers) to the internet via IP Masquerading rather than having each workstation dial directly (as was done previously).

IP Masquerading works fine, save for one small problem that's turning into a real annoyance. I'm using PAP authentication with the ISP. Whenever I initiate a connection, the modem dials, waits until whatever timeout is specified, then hangs up without ever achieving a complete connection. The two modems handshake, but the login to the ISP is never successfully completed. Since I've specified for it to reconnect on a line drop, it immediately redials and then successfully connects and logs in on the second try.

This is 99.9% consistent, meaning that it nearly always requires two dial attempts to get a successful connection. On rare occaisons, it will connect on the first try, but this is apparently only after I've just downed the interface within the past few minutes. I don't have it configured for demand dial at this point -- I normally bring the link up and down manually via ifup ppp0 and ifdown ppp0. Eventually this will be set to demand dial once I'm confident of the configuration.

I suspected the ISP at first, but then I tried a Mandrake 6.5 distribution on the same equipment (with essentially the same config files), and it connects the first time every time. I'd much rather use RedHat 6.1, however, for various reasons. I've even tried a few different modems, both 56K and K56Flex, and the results are the same.

Does anyone have any ideas as to why this would be happening and how to correct it?

TIA.

Wed, 02 Feb 2000 11:15:32 -0500

Wed, 02 Feb 2000 11:15:32 -0500

From: John Sanabria <>

Subject: Tip & Tricks

Hello:

I'm currently work with the domain .edu.co at Universidad de los Andes, Bogota, Colombia. I want to know how can do when the people enter the url, uniandes.edu.co, the browser go at www.uniandes.edu.co. eg. i can type the url linuxtoday.com, and my browser show me www.linuxtoday.com.

Where do i modify my dns entry in order to obtain this behavior?

Thanks in advance.

PS: Sorry for my poor english.

Wed, 2 Feb 2000 17:41:31 -0500

Wed, 2 Feb 2000 17:41:31 -0500

From: Rob C <>

Subject:

Hi,

I was wondering what you have to do to get your Diamond Supraexpress 56i modem recognized and configured under Linux. I also need to get a PPP connection setup on my linux system to access my internet. I am positive that it isn't a winmodem.

I am a really new newbie at this, so i'll need lotsa explanating here.

Thanks.

Thu, 03 Feb 2000 21:26:00 +0100

Thu, 03 Feb 2000 21:26:00 +0100

From: Ivo Naninck <>

Subject: Question to be published.

Hello LG,

Can you please put this in your next issue?

Anyone who has a hint on how to get a Linux DHCP client to get a lease from a DHCP server over a Token Ring network? I have already tried a LOT, believe me. Things like dhcpcd, dhclient and pump all do not work. There is also VERY little response on this matter from several related news groups.

--

Best regards, Ivo Naninck.

Neckties strangle clear thinking.

-- Lin Yutang

~

:wq!

Thu, 03 Feb 2000 23:08:31 +0100

Thu, 03 Feb 2000 23:08:31 +0100

From: JA <>

Subject: WindowsNT vs. Linux

I've made a comparison running the same Fortran program compiled with GNU g77 compiler with identical options.

The results is unexpected: the program ran under WinNT4.0 20 sec, and under Linux/Slackware7.0 27 sec.

Why?

Jacek Arkuszewski, Switzerland

Thu, 03 Feb 2000 22:34:56 -0500

Thu, 03 Feb 2000 22:34:56 -0500

From: BLOODICE <>

Subject: Linux & win98 internet connection sharing

im just wondering if it is possible to connect linux to the internet, over a network through a win98 machine using internet connection sharing?

if there is a way, can you tell me if i cant do it that way is there another way i can do it?

also...do you happen to know of any cable modem software(for linux) that will run RoadRunner modems

one more thing...are there any tutorials on my first question that i should look at?

Mon, 26 Apr 1999 14:23:10 +0530

Mon, 26 Apr 1999 14:23:10 +0530

From: Sreelal T S <>

Subject:

My opl3-sax 32-bit soundcard was not supported by redhat linux6.1.I tried configuring it using sndconfig.Please send your responses.My address is [email protected]

Fri, 4 Feb 2000 17:43:38 -0600

Fri, 4 Feb 2000 17:43:38 -0600

From: Jeffrey T. Ownby <>

Subject: 5250 terminal for AS400 connection

I am adding a Linux box to a network consisting of several Win9X and NT machines that use either IBM Client Access or Rumba to connect to our AS400. Is there a program similar to either one of these that can provide terminal emulation on Linux. Any info appreciated!

Later,

Jeffro

Wed, 09 Feb 2000 09:18:14 +0100

Wed, 09 Feb 2000 09:18:14 +0100

From: Vipie - Your Leader <>

Subject: Multiple video cards

Hello,

I have a question, have you any idea where I could find info about running multiple video cards and monitors under linux. eg. 2 SVGA cards or a SVGA and a VGA card ... and how should one configure these ??

Wed, 9 Feb 2000 10:37:33 +0200

Wed, 9 Feb 2000 10:37:33 +0200

From: Mahdy <>

Subject: dos boot disk that can logon to linux

i know that my quistion is silly but i didn't find in the wep solution will i'm trying to make a boot disk fore dos that can see my linux box in my linux box i have install samba v2.0.5 will frome win 95/98/NT i can see the linux but frome dos i cant i need this thing to restore the Ghost files (image of disk) to the station until now i do this thing thru the NT server & in the client machien i run the NET.exe file to make this connection ? is there application fore dos that i can use and to be in one floppy disk 1.44 MB ?

Thank's

Hijaze Mahdy

Wed, 09 Feb 2000 12:42:27 +0000

Wed, 09 Feb 2000 12:42:27 +0000

From: thierry.lamant <>

Subject: Configuring Xfree for i810 chipset

I have successfully introduced Linux and XFree (now 3.3.5) in my company for the development of Aircraft Simulators on PC. I would like now to use it on my home computer, a Compaq Presario 5456 which include a i810 chipset. I can see that XFree 3.3.6 is supporting i810 mother board, but that does not work better than 640*480 when I try to install it (Win98 is running good at least in 1024*780). SuperProbe cannot detect the hardware.

Is there something special to do? Shall I wait for XFree 4?

Thanks in advance for any help.

Best Regards (and thousands thanks for the usefull Gazette)

Tue, 8 Feb 2000 23:05:15 -0200

Tue, 8 Feb 2000 23:05:15 -0200

From: pedroanisio <>

Subject: Clenning Lost+Found?

I having problem with my filesystem lately. And I have a lot o file in my lost+found, and I am having some problem to cleaning up those file which corrupted.

b---rwS-wt 1 30291 357 88, 118 Dec 8 2023 #586 b--sr-S--t 1 27968 27968 115, 109 Apr 29 2032 #592 br-xrwS-wt 1 14963 25715 108, 97 Apr 12 2031 #683 br-xrw---- 1 29555 21622 115, 115 May 10 2031 #713 br-S--S--x 1 26483 14641 116, 97 Apr 12 1998 #732 br-xr-xrw- 1 10604 24864 101, 114 Feb 13 1996 #741564 c---r----- 1 8224 25632 32, 32 Jan 29 2029 #741565 br-sr-xrwx 1 29806 24864 116, 32 Oct 15 2021 #741567

I tried rm -rf but it didnīt work, it result in

rm: cannot unlink `file': Operation not permitted

what can I do? Thank You,

[When people have gotten weird permissions like that, it's often the sign of serious disk corruption. I would definitely back up any data you can right now just in case the drive fails in the near future. Is the drive otherwise working OK? I would be inclined to buy a new drive and install a fresh copy of Linux on it, and then later decide whether to trust this drive enough to continue using it. Hard drives and power supplies are the most failure-prone components of any PC. Fortunately, nowadays hard drives are cheap to replace.You can run "file" on the files to see what types they are, and "less" to see if they have any contents worth saving. -Ed.]

Thu, 10 Feb 2000 15:07:36 -0800

Thu, 10 Feb 2000 15:07:36 -0800

From: Chris Dumont <>

Subject: linuxconf

I've been trying to use linuxconf (ver 1.14 on a RH 6.0 install) and have now screwed it up in my computers at work and at home in the same way. When I'm ready to leave linuxconf it wants to "sync" the configuration with the system. Every time I run linuxconf now it says that it must execute "/etc/rc.d/rc5.d/S85gpm start". And then, even after it does so, it still says it's not in sync and it wants to do it again. I guess that it thinks it must start the mouse even though it's already running.

How do I fix this?

A possibly significant piece of information is that in both cases when I originally installed Red Hat I chose the wrong mouse driver and had to play around to find the right one.

Fri, 11 Feb 2000 22:43:26 +0100

Fri, 11 Feb 2000 22:43:26 +0100

From: Daniel Lüscher <>

Subject: Linux-Mandrake 7.0

Hello! What about Linux-Mandrake 7.0? It would be worth an article or even an interview with the developers.

Greetz Pat

Sat, 12 Feb 2000 14:14:40 +1000

Sat, 12 Feb 2000 14:14:40 +1000

From: Tony Smith <>

Subject: Help wanted etc.

Hi

This little problem has been sending me nuts for two days now. I sure hope someone in the LG community can help

I wonder if someone would mind helping me with a mystery that is causing me to pull my hair out......

I have been using a Linux server based on RedHat 5.2 for some time to connect to the Internet and provide masquerade for a bunch of Windows boxes on a private network behind it.

Today I decided to redo the server to incorporate a few more sophisticated things like DNS, DHCP and HTTP serving for a prototype intranet.

All went very well with one small hiccup. There seems to be an undocumented change in the behavior of ppp between RedHat 6.1 and 5.2.

Using 5.2 invoking "ifup ppp1" will result in the server making one and one only attempt to connect to the internet.

This is exactly what I want

Using 6.1 if the dialout attempt fails, it simply loops and tries again and again until it either connects or is stopped.

I don't want this.

I have compared all the relevant (I hope) scripts between 5.2 and 6.1 but I cannot find a difference that would account for the changed behavior.

I have the awful feeling I've missed something important (it's been a long day), could someone please tell me what it is?

Sun, 13 Feb 2000 04:59:29 +0200

Sun, 13 Feb 2000 04:59:29 +0200

From: FreeDoM <>

Subject: ZOLTRIX modem

Hi My name is Ozgur; I use redhat Linux but I dont use modem I have 56K Zoltrix (Rockwell cheap set) modem Winmodem but this modem dont run under Linux could u help me? If u have file this problem pls send me!

Tnx for helping.

Sun, 13 Feb 2000 22:01:33 -0600

Sun, 13 Feb 2000 22:01:33 -0600

From: Kim Updike <>

Subject: Inexpensive, powerful db's for Linux?

To develop a distributed database application that runs on Linux, what inexpensive, powerful databases might work best?

E-mailiing me at [email protected] with any advice would be much appreciated. -Kim

Sun, 13 Feb 2000 10:53:21 -0500

Sun, 13 Feb 2000 10:53:21 -0500

From: Art <>

Subject: Drivers

I have a question. Where can I download an update driver for a "Diamond Monster 3D" voodoo card? The present software I have is Version 1.08 and DOS 6. My OS is Win98 Second Addition 64Mg Ram and Celeron 300 with an I intel 740 video card. I thank you in advance for your reply. Art

Tue, 15 Feb 2000 08:14:24 -0500

Tue, 15 Feb 2000 08:14:24 -0500

From: William Aycock <>

Subject: Window Managers and Window App Development

[There's an lg-announce mailing list. See the Linux Gazette FAQ at http://www.linuxgazette.com/lg_faq.html http://www.linuxgazette.com/lg_faq.htmlKDE and GNOME are graphical "environments" (that is, a set of custom applications, widgets and rules that all have a common "look and feel"). A window manager is just one aspect of this. In my opinion, KDE is currently "ahead". But I don't tell people, "Use KDE, it's #1." I use KDE at work, but only because it's the default, and I don't use 90% of its features. At home I use fvwm2.

Some people want to see Linux have a single standard desktop. However, I think most people are glad there's a choice, because different people want different things. Indeed, with some people running Linux for games (i.e., they need a 3D video card and fast drivers) and other people running it on 486s as servers, different people have very different software needs. KDE and GNOME tend to appeal to people who want a standard look and feel a la Windows and the Mac. But those who hack with the older programming tools are never going to give them up. As the geek joke goes, "Who needs a GUI when you've got Emacs?"

February's Linux Journal had an in-depth look at KDE and at GNOME. Both articles are on-line at http://www.linuxjournal.com/lj-issues/issue70-Ed.]

Wed, 16 Feb 2000 20:45:06 +0100

Wed, 16 Feb 2000 20:45:06 +0100

From: Dennis Johansen <>

Subject: Quakeworld server as a deamon

I want to set my RedHat Linux 6.1 up as a QuakeWorld server on my lan. Iīts a k6-200 with 64 mb ram.

And it works fine when i start it, but how do i run it as a deamon ?

Please help.

Best Regards

Fri, 18 Feb 2000 08:47:02 +0200

Fri, 18 Feb 2000 08:47:02 +0200

From: Halil Cem Tonguc 998027 <>

Subject: I NEED PIII PATCH.

Where can I found a patch for PIII????

Fri, 18 Feb 2000 23:46:14 -0800

Fri, 18 Feb 2000 23:46:14 -0800

From: wesley <>

Subject: make virtuald

I am trying to compile virtuald using make virtuald here is the error I get "Makefile:14: *** missing separator. Stop. "

I did a cut and paste of the code from http://www.linuxdoc.org/HOWTO/Virtual-Services-HOWTO-3.html in the section 3.4 Source then used ftp to put it on the server in order to compile it.

what am I missing??

thanks for your time and help

Wes

Sat, 19 Feb 2000 23:53:46 +0000

Sat, 19 Feb 2000 23:53:46 +0000

From: Ari Hyvölä <>

Subject: pppd daemon dies

Hello, I am a beginner in Linux world and would like to test Linux ISDN ability. I have ASUSCOM 128 modem connected to RS-232 port. ISP, IP and DNS are correctly set up but kppp hangs up while logging onto network with the error message "pppd daemon died unexpectedly" and the log says "cannot open logfile". The modem tests run through. I have Corel Linux 2.2.12 and the Kernel should include neccessary isdn and ppp -options. Lock option has been turned off. I appreciate any help - from Corel pages support can not be found.

Sun, 20 Feb 2000 14:19:25 +0100

Sun, 20 Feb 2000 14:19:25 +0100

From: Santiago Cepas <>

Subject: Memory undetected

Hello there.

I've got some problems with my RAM: Linux only detecs 64 M when I have 128. My specs are: SuSE Linux 6.3, kernel 2.2.13, runnin in a AMD k6-2 350 box. The weirdest thing is that the whole 128 M are in the same DIMM slot. The bios seems to detect 128 M, and also that other op. system which in don't want to name. Could anyone help me here?

Thanks

[Linux cannot auto-detect memory above 64MB. You have to tell it explicitly how much memory you have at the boot prompt, or putappend = "mem8M"in your /etc/lilo.conf file and re-run lilo (if you're using lilo; see the lilo documentation).The problem is that the BIOS detects the extra memory but it doesn't tell Linux about it. It's a limitation in the PC BIOS design. I don't know how that other OS probes for memory.

If you ever reduce the amount of memory you have, remember to tell lilo first. Otherwise, the system will segfault at boot time when it tries to use memory that isn't there. You can always type "mem=64M" at the boot prompt to override what lilo thinks.

Information from the Linus himself about situation is in the BootPrompt HOWTO . -Ed.]

Mon, 31 Jan 2000 22:33:26 +0000

Mon, 31 Jan 2000 22:33:26 +0000

From: T.J. Rowe <>

Subject: glibc compilation problem

Hello everyone,

I'm making glibc 2.1.2 from the source tarball for use in building a system from the ground up (to get rid of all those statically linked programs I've started with). Well, when compiling either 2.1.2 or 2.1.1, I get following error and the compilation stops:

common/db_appinit.c: In function `__db_appname':

common/db_appinit.c:479: fixed or forbidden register 0 (ax) was spilled

for class

AREG.

common/db_appinit.c:479: This may be due to a compiler bug or to

impossible asm

common/db_appinit.c:479: statements or clauses.

common/db_appinit.c:479: This is the instruction:

(insn 902 901 903 (parallel[

(set (reg:SI 2 %ecx)

(unspec:SI[

(mem:BLK (reg:SI 5 %edi) 0)

(const_int 0 [0x0])

(const_int 1 [0x1])

] 0))

(clobber (reg:SI 5 %edi))

] ) 424 {strlensi+1} (insn_list 901 (nil))

(expr_list:REG_UNUSED (reg:SI 5 %edi)

(nil)))

make[1]: *** [db_appinit.os] Error 1

make: *** [db2/others] Error 2

I have pgcc 2.95.2, which I believe fills the requirement for gcc. I'm clueless as to what else can be causing this. Any ideas?

btw, I come up with some other undefined function error when trying to compile glibc 2.0.7pre6, and I don't want to use the older glibc, anyway.

Someone has to know what causes these kinds of errors, right? :)

Mon, 31 Jan 2000 22:40:13 +0000

Mon, 31 Jan 2000 22:40:13 +0000

From: T.J. Rowe <>

Subject: bash problem

I have an interesting problem with my bash prompt. The upper case K and T letters do not echo to the screen, and when I type them it beeps at me. Now, before jumping to conclusions, let me describe what I've done so far. This problem only occurs in bash and bash2, not in tcsh, zsh, csh, etc. I've recompiled and reinstalled bash several times from both the source tarballs and source rpms. I've replaced very thing terminal related that I can think of, such as gettydefs and libtermcap. I've looked at my keymaps repeatedly, and there doesn't seem to be a problem there--and again, it only happens in bash. It has to be something broken which I haven't replaced yet, but I'm not sure what is left. The K and T letters work fine in programs spawned in bash, it's just the prompt itself. This one has just about everyone I know confused. Hopefully someone out there can help or has heard of this odd problem. If anyone would like more details or has any information, please contact me.

Thanks, ~T.J. [email protected]

Wed, 23 Feb 2000 14:45:47 -0500

Wed, 23 Feb 2000 14:45:47 -0500

From: LabRDist <>

Subject: bootup disk?

i have a toshiba 415cs and i formatted the hard drive. I dont have the toshiba companion disk or did i make a boot disk before i formatted it. i need to find a way to get into the computer to activate the d: drive to install windows. I just get an error every time i start saying insert the boot disk. Any help would be appreciated.

Wed, 23 Feb 2000 21:29:29 -0800 (PST)

Wed, 23 Feb 2000 21:29:29 -0800 (PST)

From: napolean <>

Subject: question about linux 5.2 deluxe

i installed linux and then it said it was completed, then i got a prompt saying

[bonethugs@localhost bonethugs]$after entering the password and login name what do i do? and i have one more question can you have linux and windows 98 on one hard drive with out being partitioned in half please e-mail me back please thank you very much

Thu, 24 Feb 2000 10:22:52 -0000

Thu, 24 Feb 2000 10:22:52 -0000

From: Ivica Glavocic <>

Subject: unable to install

Hi

I don't know if this is the proper way to contact you, but this is the address I found searching through Internet for solution for my problem:

PC is PII233 (LX) with 128 MB DIMM SDRAM, 6.4 GB WD HDD, Diamond Speedstar 50 AGP (4MB) VGA, Genius LAN card, Philips 40x CD, ps/2 keyboard and mouse. On it I have installed NT Workstation 4.0 on first partition (4G) formatted as NTFS. NT is working fine.

Now, I wanted to put Linux RedHat 6.1 on second partition. First I checked HCL for my PC - OK. On Internet I found documents how to boot Linux using NT Loader and everything seemed to be fine until i started actual installation booting it from CD. It loaded X graphical display, gave me choice for language and keyboard, and then disk druid started. I could see my NTFS partition as HPFS but I knew this would happen since I red about it before. So out of unused ~2 GB I created swap (129M) and root (2G) partition and after i pressed next, error was displayed "error opening security file ..." and termination and kill signals stopped further installation.

Now, I know that this is not a fatal error (security..), but what is the problem then? I tryed to change partitioning (added /boot partition, many combinations) but nothing seem to work. There is no explanation WHY this happens, on wich module installation fails. Then I got new boot diskette from RedHat, tryed with it, but same thing happened. So I put some boot parameters like MEM8M and changed keyboard type, but still nothing.

Maybe installation log would help, but it is going fast on my screen and I can't read it, is there a way to save it to diskette since I can't put it on NTFS partition? Is this partition a problem somehow? Shouldn't be.

Please answer as soon as you can because it is really urgent for me to get this Linux going.

Thank you in advance

Ivica Glavocic

Thu, 24 Feb 2000 23:52:17 +0100

Thu, 24 Feb 2000 23:52:17 +0100

From: jacek czerwinski <>

Subject: users' information on my netware 4.11

Novell ver >= 5.x has LDAP gateway to his NDS. These are many of LDAP clients (API from Mozilla, OpenLDAP, netscape). caldera has made NDS fo Linux (3 user trial ? - i cant download it from my small city and test ) (www.caldera.com) Novell said that in march/april will release Opensourced API for NDS based on OpenLDAP (www.openldap.org) Maybe LDAP gateway for NDS (in trial wersion ?) is free for download from Novell ? Write after your success ;-)

Thu, 3 Feb 2000 08:43:48 -0800

Thu, 3 Feb 2000 08:43:48 -0800

From: Linux Gazette <>

Subject: FAQs

The following questions received this month are answered in the Linux Gazette FAQ:

Sat, 5 Feb 2000 20:07:59 -0800 (PST)

Sat, 5 Feb 2000 20:07:59 -0800 (PST)

From: Ron Bates <>

Subject: Linux in Grocery Stores...

Hey, I just wanted to tell you guys, I purchased the premiere issue of Maximum Linux magazine with a full install of Linux-Mandrake 6.0 at Pak N' Save in So. San Francisco. To think the day has come when you can purchase a copy of Linux at your local chain grocery store. It's a beautiful world.

Sun, 6 Feb 2000 23:14:00 -0500

Sun, 6 Feb 2000 23:14:00 -0500

From: Pierre Abbat <>

Subject: Gazette crashes Konqueror

I am trying to read the Gazette with kfm 1.167 and several pages crash it, including the Mailbag and 2Ē Tips. Can you help me figure out what's wrong? It's happened before.

phma

Fri, 11 Feb 2000 15:05:04 +0100

Fri, 11 Feb 2000 15:05:04 +0100

From: Richard Vanek (ETM <>

Subject: current issue

Hi

I would like to download always current issue of Linux Gazette to my Pilot. Do you have general link which always point on contents of current issue?

The download is done by avantgo server which prepare a html pages for downloading to Palm Pilot. I would like to setup link where I can always find a new issue. AvantGO will look at it and when it's change I will get a new pages into pilot.

The reason why link to the current issue is simple, if I point to your main page in level 1 of html I will have all issues which is too much for a Palm Pilot memory.

[I added a symbolic link http://www.linuxgazette.com/current pointing to the current issue. I also made index.html in each issue point to the Table of Contents page.Readers, please do not bookmark anything through the "current" link! The bookmark will go dead at the end of the month or point to the wrong article. Instead, use the actual issue directory (e.g.,

issue51. -Ed.]

Fri, 18 Feb 2000 08:35:58 -0800

Fri, 18 Feb 2000 08:35:58 -0800

From: Linux Gazette <>

Subject: Mirror site in India

Sankar wrote:

I am located in India, and keenly interested in hosting a mirror site which would actually be in India. Can you please let me know what would be approximate size required in the server for this?

The WWW directories are approximately 84 MB. Each issue will add 2-3 MB.

The size of the FTP files can be found at http://www.linuxgazette.com/ftpfiles.txt The current size is 43 MB, plus 1-2 MB for each new issue.

18 Feb 2000 10:16:01 -0800

18 Feb 2000 10:16:01 -0800

From: Michael Grier <>

Subject: WebGlimpse Blank Page Syndrome

It has been my experience that Netscape will not display a page if there is a missing closing </table> tag somewhere, especially if there are nested tables. Try appending an extra one at the end of the table.

[You are correct. However, fixing this requires delving into the internals of WebGlimpse and Glimpse, which we do not have the resources to do. It also appears that Netscape is throwing away the bottom of the page when a large result set is returned, and that's where the </TABLE> tag is going. -Ed.]

Fri, 25 Feb 2000 10:46:34 -0500

Fri, 25 Feb 2000 10:46:34 -0500

From: Jeff Rose <>

Subject: Main LG Logo ...

Greetings,

Agreed, I am *not* any type of GIMP-guru [see _my_ horrible non-aliasing problematic logo] ... however, a suggestion:

The Linux Gazette Main Logo is, well, ugly!

Perhaps you could encourage the readership to submit their own logo creations for Linux Gazette and have fun while making an improvement at the same time?

Hope this sounds like a plan,

Jeff

-- ( >- Jeff Rose - everyone's Linux User Group (eLUG) -< ) /~\ http://www.elug.org mailto:[email protected] /~\ | \) *** Freelance Linux/IT Writer *** (/ | |_|_ eFAX: +1.630.604.4130 _|_|

[The staff and readers of LG are divided over whether it's time for a new logo. I myself am undecided. But I can do this:If you or any readers wish to submit artwork for the Gazette, I will put them in a "Linux Gazette Art" article so when we can all see them. They will then be available when I go looking for something to jazz up a page with.

This was how the "Penguin Reading the Gazette" image at right found a home on the LG FAQ page. We had the existing image from the days when Margie was the Editor of the Gazette. (She still retains the title "Ruler of the Gazette"--she refused to give me that title! :) I liked it, used it in one of my Not Linux columns, and then decided it would make a good image for the FAQ.

The LG logo is 600x256, and any new logo should probably be the same size. The Penguin Reading the Gazette is 161x160; most page decorations will want to be more or less that size. Page decorations are a lot more likely to be used in future Gazette issues than logos are, because there can be only one logo. All images should have a Linux Gazette theme in their content somehow.

With this in mind, send in some art! -Ed.]

Fri, 25 Feb 2000 13:07:44 -0500

Fri, 25 Feb 2000 13:07:44 -0500

From: Jason Fink <>

Subject: Pundit Here, Pundit There, Pundits Everywhere

Pundits. Had enough of that word yet? I know I have and I also know there are a few of these so-called experts whose teeth I would like knock out (I guess the web equivalent would be breaking their knuckles or something). In all honesty, since when did becoming an expert one area of technology suddenly make one an absolute know-it-all about all technology? This has suddenly become the new thing to be: an industry pundit, a technology pundit, or whatever. A pundit, by the definition I refer to, is an accomplished expert in a particular discipline. This e-world of ours has suddenly given anyone who has the time and tenacity to become a pundit the opportunity to do so. Those whom have been in the industry a long time have received that label, and rightfully so, for excelling in a particular field. All of a sudden, however, many of these pundits are breaking away from their disciplines and yammering about others. I would rather they did not.

This is the group that really irritates me. They started out as people who knew a lot about something, and in some cases, they outright contributed to making something, setting a trend or engineering a brilliant new process. While these people should be (and have been) commended, after a period of time they suddenly become experts in other fields as well, but now they can forego validation. Why that is, I have no idea. One can relate it to actors/actresses or music stars who suddenly feel they should become involved with politics and that their ideas are right. While there is a difference of sorts, you can see the obvious parallel: I'm a somebody now so everything I say should be taken seriously.

This sort of abuse of journalistic power needs to stop, someone needs to start putting an end to it. The reason it needs to stop is not just ethics, it really has more to do (in my opinion) with quality. If a person is particularly known for having insightful columns on a given subject, chances are, I will read them. The second they step into unknown territory and blunder, they lose credibility in my eyes. While I may be just one person, I highly doubt I am the only one who stops reading the material they write about because they blundered.

Another point many administrators have brought to my attention has been the pointy-haired-boss syndrome. A Glassy-Eyed Suit (IS Department Head) reads a well-known, well-respected pundits column. It must true, right? Those who know no better cannot be protected by the sane. A pundit writing about something outside the sphere of their knowledge is in fact damaging the industry as a whole. Knuckleheads across the world will do a 180 based upon what some guy at some zine printed. Surely this is a good enough reason not to arbitrarily write about something.

Word has it, if you secretly embed the word Linux on Coke cans, Coke sales will skyrocket. Zines across the globe are printing Linux this and Linux that, all for the sake of advertising dollars. That, in itself, is disgraceful. It is bad enough that there are so many 2-bit sites in general The fact that established journalists are using Linux to bring in the hits is repulsive.

So am I being hypocritical? In a sense, yes, because I really do not know much about Journalism, then again, I am not a pundit now am I? This trend has been going on for a long time now. It is my hope, that by seeing this, not only will you as a reader take the extra step to verify the information source, but perhaps those readers out there who are writers will think twice about their next article.

[The thing about Linux on coke cans reminded me of something somebody said at the Python conference. He said, a Linux Janitorial Service could go IPO just because it has the word "Linux" in it. Then the stockholders would be surprised to realize they were serious about the "Janitorial" part. -Ed.]

|

Contents: |

March 2000 Linux Journal

March 2000 Linux JournalThe March issue of Linux Journal is on the newsstands now. This issue focuses on Linux training.

Linux Journal has articles that appear "Strictly On-Line". Check out the Table of Contents at http://www.linuxjournal.com/issue71/index.html for articles in this issue as well as links to the on-line articles. To subscribe to Linux Journal, go to http://www.linuxjournal.com/subscribe/index.html.

For Subcribers Only: Linux Journal archives are available on-line at http://interactive.linuxjournal.com/

BlueCat

BlueCatSAN JOSE, Calif., 7 February 2000 - Lynx Real-Time Systems, Inc., today announced the availability of BlueCat(tm) Linux, Release 1.0, Lynx' version of Linux for high-availability, high-reliability embedded applications. BlueCat Linux is part of the LynuxWorks(tm) suite that allows embedded systems development of both BlueCat Linux and the LynxOS(r) real-time operating system with a common compatible tool set.

BlueCat, a tested and stabilized Linux version based on Red Hat 6.1, is binary compatible with Linux applications, system and development tools and drivers to allow rapid, efficient embedded deployment. Lynx provides cross development support for BlueCat to a variety of popular embedded processors and includes an advanced test methodology to ensure quality and reliability.

"BlueCat and its development environment, LynuxWorks, has established Lynx as a key player in the embedded Linux market," said Rick Lehrbaum of LinuxDevices.com, the embedded Linux portal. "By taking the bold strategy of 'competing' against themselves in delivering BlueCat Linux, Lynx has provided a viable business model that will avoid fragmenting Linux. Real-time support is an important option for embedded developers who are choosing Linux as their operating system."

www.bluecat.com.

Corel

CorelCorel Delivers Macromedia Flash to Linux

Hard Hat

Hard HatBellevue, Wash., February 8, 2000 - GoAhead(R) Software, today announced that MontaVista Software Inc. is shipping GoAhead WebServer with their recently announced Hard Hat Linux(R), a Linux operating system for embedded applications. GoAhead WebServer is an open source, embedded Web server that provides a secure, flexible and free way to access remote devices and appliances via standard Internet Protocols.

GoAhead WebServer leverages embedded JavaScript, a strict subset of JavaScript optimized for small footprint environments. It is the only open source, embedded Web server that uses Active Server Pages (ASP), embedded JavaScript and in-memory CGI processing to deliver a highly efficient method of dynamic Web page creation. GoAhead WebServer also features a ROM packaging utility for Web pages.

GoAhead's web site is http://www.goahead.com. The source code for the GoAhead WebServer is at http://www.goahead.com/webserver/wsregister.htm. Support for GoAhead WebServer is available through a collaborative Usenet newsgroup, news://news.goahead.com, in which GoAhead is an active participant.

Information on Hard Hat Linux is on MontaVista's web site, http://www.mvista.com. (See the picture of a penguin wearing a yellow hard hat in the top left corner of this page.)

Linux for Windows

Linux for WindowsLinux for Windows has been released in the UK. It is based on Linux-Mandrake 6.1, and requires no partitioning or reformatting since it runs from a file within Windows. For details, see the Macmillan software webs site

Linux-Mandrake

Linux-MandrakeNEW YORK - (LinuxWorld); February 2, 2000 - Macmillan USA, the Place for Linux, (http://www.placeforlinux.com), announced it is shipping Complete Linux-Mandrake 7.0 for beginning and intermediate Linux users. Macmillan's new product simplifies the process of installing the Linux operating system for first time users and includes in-depth user reference guides, technical support, and a complete suite of desktop productivity applications. Complete Linux-Mandrake 7.0 is available now at a MSRP of U.S. $29.95.

System Requirements for Complete Linux-Mandrake 7.0: CPU Intel Pentium

Red Hat

Red HatRed Hat Expands European Operations to France and Italy

SPIRO-Linux

SPIRO-LinuxA description of SPIRO-Linux is included in the Configure your network from a web cell phone announcement below.

Storm

StormStorm Linux 2000 Makes European Debut

SuSE

SuSESuSE has appointed Sysdeco Mimer as its first Business Partner in Sweden. Sysdeco Mimer will be providing their MIMER DBMS technology on the SuSE Linux distribution. MIMER is characterised by ease-of-use, high performance, scalability, openness and high availability. Among the thousands of MIMER users all over the world, Volvo, Swedish Telecom, Ericsson, Hammersmith Hospital (UK) and English Blood Authority can be mentioned. MIMER Personal Edition for Linux is available for free download from http://www.mimer.com/download.

LINUXWORLD EXPO 2000, NEW YORK, NY (February 2, 2000) - SCO and SuSE Linux AG today announced plans to deliver SCO's Tarantella web-enabling software to SuSE Linux customers worldwide. SCO will bring Tarantella web-enabling software to the SuSE Linux platform and work with SuSE to market the software to its customers.

TurboLinux

TurboLinuxTurboLinux Adds E-Commerce Suite to New Server

C.O.L.A news

C.O.L.A news Upcoming conferences & events

Upcoming conferences & events| Linux Open Source Expo and Conference |

March 7-10, 2000 Sydney, Australia www.linuxexpo.com.au |

| Game Developers Conference |

March 10-12, 2000 San Jose, CA www.gdconf.com |

| Software Development Conference & Expo |

March 19-24, 2000 San Jose, CA www.sdexpo.com |

| Singapore Linux Conference (SLC) |

March 23-25, 2000 Singapore www.slc.com.sg |

| Colorado Linux Info Quest |

April 1, 2000 Denver, CO thecliq.org |

| Montreal Linux Expo |

April 10-12, 2000 Montreal, Canada www.skyevents.com/EN/ |

| Spring COMDEX |

April 17-20, 2000 Chicago, IL www.zdevents.com/comdex |

| HPC Linux 2000: Workshop on High-Performance Computing with Linux Platforms |

May 14-17, 2000 Beijing, China www.csis.hku.hk/~clwang/HPCLinux2000.html (In conjunction with HPC-ASIA 2000: The Fourth International Conference/Exhibition on High Performance Computing in Asia-Pacific Region) |

| Linux Canada |

May 15-18, 2000 Toronto, Canada www.linuxcanadaexpo.com |

Open Source Equipment Exchange

Open Source Equipment ExchangeHauppague, New York, NY - February 8, 2000 - BASCOM Global Internet Services, Inc. (BASCOM), pioneering developers of Linux-based thin server productivity solutions for small business, today announces an initiative in keeping with the collaborative spirit of the Open Source movement. BASCOM's Open Source Equipment Exchange (osee.bascom.org) is designed to match those donating computer equipment with those in the Open Source community needing to add infrastructure to further their development efforts and spur innovation toward the continued evolution of the Linux operating system.

The OSEE web site will link those donating equipment with those in the Open Source community in need of hardware resources. Since the Linux OS requires relatively modest "horsepower" to drive it, older class computers that have outlived their use in corporate environments will be given renewed life in the hands of Open Source developers.

Help wanted - literally

Help wanted - literallyKen French, BScH, MSc

Consultant, IT Division

Multec Canada Ltd.

200 Ronson Drive, Suite 204

Telephone: (416) 244-2402 ext. 105

Email:

Web: www.multec.ca

Global Linux Event Series

Global Linux Event SeriesMILFORD, CT - 11 February 2000 - Advanstar Communications, Inc., parent company of LINUX Canada and Advanstar Expositions Canada LTD, and Specialized Systems Consultants, Inc., publishers of Linux Journal, announced today a strategic alliance to create a series of global conferences and expositions for the Linux community.

The Linux event series launches in Canada, May 15 - 18, 2000 at the Metropolitan Toronto Convention Centre. The inaugural event, LINUX Canada, will consist of multiple conference sessions and an exhibit hall with leading-edge, Linux-related exhibitions. Three visionary keynote presentations are also confirmed, including: Robert Young, chairman & CEO of Red Hat, Tuesday, May 16; Linus Torvalds, creator of the Linux operating system, Wednesday, May 17; and, Ransom Love, title of Caldera Systems, Thursday, May 18. The event has already gained strong support from the Canadian Linux Users Exchange (CLUE) and the Toronto Linux Users Group (TLUG).

What is the Future of OS?

What is the Future of OS?Portland, OR, February 15, 2000 - Representatives from Caldera Systems, GartnerGroup, Novell Corporation and Red Hat will debate "The Future of OS on Desktop PCs and Servers" on Friday, March 10th at Noon, announced System Builder Summit today. The panel discussion will take place in conjunction with System Builder Summit Spring 2000, being held March 8-11th at the Desert Springs Marriott Resort and Spa in Palm Desert, California.

In addition to the OS panel, the agenda for System Builder Summit Spring 2000 includes Keynotes by Michael Tiemann, CTO of Red Hat; Jim Yasso, Vice President of Intel Corporation; and Dan Vivoli, Vice President of Marketing at nVIDIA. Through roundtable sessions, theater presentations and exhibits, more than 100 technology vendors will offer a preview of year 2000 roadmaps, products, services and programs that cover the entire technology spectrum from the desktop to the server to Internet-enabled computers and communication. Among them are 3COM Corporation, AMD, ATI Technologies, Canon Computer Systems, Conexant, D&H Distributing, Imation, Ingram Micro, Intel Corporation, Logitech, NASBA, Novell, nVIDIA, Quantum and Techworks, among others. http://www.systembuildersummit.com

GetTux.com closes shop

GetTux.com closes shopUnfortunately, due to a lack of funding, GetTux.com is ceasing business. This is a most unpleasant situation, and we are not thrilled to have to send out this notice, but, without major funding, we are unable to advertise, unable to increase staff, and are thus unable to advance as a company. We had meetings with several large investors, but, unfortunately, no one was able to invest in us, while at the same time leaving us control of the company and its direction. What a sad thing the business world can be.

As several of you know, the company was run, on a day to day basis, by me, Scott McDaniel. Website, promotions, product, research and all. We really needed a few other people, and, without that funding, we couldn't continue.

We certainly felt that we offered a unique and useful product, but, again, without the funding we hoped to receive, we were forced to discontinue service. Hopefully, the Linux community will strive (of course it will!), and someone may indeed resurrect the idea of a monthly subscription service. I know that several of you are in Linux related businesses, some of you in the print media, and others are part of Linux related websites.

GetTux.com was formed in the hope that we could advance the use of Linux , and that the ease of use would be promoted by allowing users updates to documentation and applications. We are fans of Linux. We are users of Linux. We are part of the commmunity. And we are sorry we are unable to continue to serve you in this capacity.

In the event that anyone in the community would like to use the GetTux.com domain, or would like the product we were selling, business plan and all, please contact me personally, .

Anyway, back to the sad business: Current subscribers will be given a FULL refund. Consider the first month of your subscription a gift from other Linux aficionados. If you have a refund related question, please email .

Again, my apologies for any inconvenience, and, again, I wish to thank each and every customer that allowed us to serve them.

Linux Links

Linux LinksVA Linux Acquires Andover.Net for $800M

IBM Expands Linux Investment

VA Linux Expands SourceForge Online Development regarding KDE and the CMU Sphinx speech recognition system.

The Atlanta Linux Showcase has a call for papers. Submissions are due April 17 for the Extreme Linux Workshop, and May 1 for the Hack Linux/Use Linux Tracks.

eSoft and Gateway are partnering to advance Linux-based Internet software and services to small and mid-sized businesses.

Python and Tkinter Programming is a new book by John E. Grayson, published by Manning Publications.

www.whichrpm.com is a site helping you to find Linux software in the RPM format. Over 35 000 software packages are indexed, allowing you to find, for instance, a package that lets you talk to your PalmPilot.

Using Linux in Embedded and Real-time System is a white paper on why Linux is ideal for this environment.

Extreme Programming was mentioned in Jason Steffler's article, but I want to mention it again because it's a cool site. It's "a gentle introduction to Extreme Programming", which is one of those strategies for getting product development synchronized with the client's expectations.

Metran Technologies has updated the HTML version of Using Samba, and placed it on a more reliable web server.

The Linux-Net Project

The Linux-Net ProjectThe Linux-Net Project, http://linux_net.tripod.com (temporary URL), seeks to modify Linux to allow your household computing devices to become intelligent "terminals". For instance, your refrigerator may notice that milk is running low and, being a termenal, asks the server to put "Milk" on the shopping list. Now you get the shopping list off of your PDA, which is also a termenal, and see that you should get milk. It could even be extended so that the server will watch how much milk, or any other product, your family drinks and will adjust the ammount you should get based on that.

Cobalt Qube 2 and the Phoenix Adaptive Firewall

Cobalt Qube 2 and the Phoenix Adaptive FirewallCOLUMBUS, OH, (February 1, 2000)-Progressive Systems, Inc., a leading provider of Linux-based network security solutions, and Cobalt Networks, Inc., a leading developer of server appliances, today announced a new firewall appliance solution based on Progressive's Phoenix Adaptive Firewall, the only firewall for Linux to be both ICSA and LinuxLabs certified, and Cobalt's award-winning Qube 2 server appliance. The combination will allow small-to medium-sized businesses, workgroups, branch offices, schools and government institutions greater flexibility in establishing network security policy and design.

With the Phoenix firewall on the Qube 2, Progressive extends both its relationship with Cobalt and its existing offering of Linux-based firewall appliances. The partnership with Cobalt allows Progressive to deliver security solutions designed to meet a range of customer needs and opens Cobalt's customer base to Progressive. The Phoenix firewall on the Qube 2 joins the existing Phoenix firewall on the Cobalt RaQ 2, a Linux-based firewall appliance that allows service providers and resellers to deliver security products and managed services to small-to-medium-sized businesses, branch offices, schools, and government institutions. The addition of the Phoenix on the Qube gives customers an easy-to-use, high functionality premise-based solution that satisfies the needs of individual businesses and institutions. Progressive and Cobalt enjoy synergies in their product and sales organizations, allowing the companies to leverage their respective channel and product strategies.

The Phoenix Qube will retail for $2,495 with unlimited users.

OpenTape.org

OpenTape.orgBoulder, CO - January 31, 2000 - Ecrix Corporation and the Linux Fund today announced OpenTape.org, a new nonprofit web site supporting the open source software movement. OpenTape.org offers users access to technical information about data backup hardware and software for the Linux operating system. All advertising and vendor participation revenues generated by the site go to the Linux Fund, a non-profit organization that supports Linux programmers with development grants and university scholarships.

LinuxLinks.com launches free e-mail service to rival Hotmail

LinuxLinks.com launches free e-mail service to rival HotmailLinuxLinks.com is proud to announce the launch of a free web based email service targeted specifically for the Linux community. This service allows users to send and receive email on any computer that offers a web browser. Now you can deal with email anywhere in the world in a discreet and secure manner whilst at the same time promoting the Linux cause.

Some of the features provided include: a [email protected] email address, lightning fast service, attachments, folders, address book, spam filters and POP mail retrieval. There are many preference options available too allowing users to enhance their messages with different font styles, sizes and colors. A built-in help facility is also provided.

To complement this rich number of features we also provide message searches, personal signatures, aliases, and a personal profile to make a complete FREE web email service.

For more information and to sign up please visit http://www.firstlinux.net/

This service is the latest addition to the FirstLinux network, which currently comprises of LinuxLinks.com and FirstLinux.com.

Lineo to appear in hotel/apartment set-top boxes

Lineo to appear in hotel/apartment set-top boxesLINDON, UTAH-Jan. 18, 2000-Lineo, Inc, developer of embedded Linux system software; Elitegroup Computing, manufacturer of system board products; and Bast, Inc., provider of set-top hardware, today announced an agreement to use Lineo Embedix Linux 1.0 and Embedix Browser for Bast's line of embedded Linux-based set-top hardware. These devices will be installed in hotel rooms and apartment buildings in the United States, Europe and Asia. This Embedix-based Web browser is the first Internet appliance to provide hotel and apartment managers a low-cost, convenient way to give customers easy access to the Internet from an ordinary television set via a broadband network maintained by the property owner. Lineo:www.lineo.com

Elitegroup: www.ecsusa.com, www.ecs.com.tw

Bast: www.bastinc.com.

G.lite: Linux-based DSL modem

G.lite: Linux-based DSL modemSilicon Automation Systems Limited today extended its Synapse range of xDSL products by introducing a G.lite solution for Linux. This is the world's first Linux based internal G.lite modem offering the end-user enhanced data rates over the existing copper lines.

Mr. Deepak Gupta, Vice President., SAS, says, "External ADSL modem solutions available in the market today are expensive and bulky. They are not suitable for the Internet appliance market where cost and size are critical. Synapse G.lite for Linux is an extremely attractive solution for OEMs looking for providing ADSL access to their Linux based appliances. Providing G.lite under Linux is a pioneering step towards mass deployment of ADSL and in firmly establishing it as the technology of choice in the Internet access market," he added.

The Synpase G.lite for Linux modem provides data transfer rates of 1.5 Mb / s downstream and 512 Kb / s upstream while allowing simultaneous access for analog voice telephony. It is fully compliant with the ITU-T G.992.2 standard and has been interoperated with most major DSLAM vendors. Moreover, the performance of the system exceeds those mandated by standards. www.sasi.com

New Red Hat CTO to address system builders

New Red Hat CTO to address system buildersPortland, OR, January 31, 2000 - Michael Tiemann, Chief Technical Officer (CTO) of Red Hat, will address system builders and technology vendors from North America and Europe at the Opening Reception of System Builder Summit on Wednesday evening, March 8th. A technical leader in the industry, Tiemann will provide strategic insight into the open source movement and the impact it will have on current industry and channel models. System Builder Summit takes place March 8-11th at the Desert Springs Marriott Resort and Spa, in Palm Desert, California.

Tiemann was named Chief Technical Officer of Red Hat on January 12th of this year. Prior to this, he was co-founder and acting CTO of Cygnus Solutions, which Red Hat acquired this month. In his new role, Tiemann is responsible for communicating Red Hat's strategic direction to the company's customers and ensuring that Red Hat technologies meet their long term business requirements. www.systembuildersummit.com

C.O.L.A software news

C.O.L.A software news Internet phone for Linux

Internet phone for LinuxPhone is a linux client program that lets you talk with other people on the internet using voice on a full duplex connection. The requirements are:

Your identity is based on your email address. The server maintains a database of who is online and everyone's contact list. You can find out if your friends are on line if they are in your contact list and you're in their contact list. Similar to ICQ.

The program is very new and is command line based. Lots of improvements are planned, should it become popular. The client is free and open source. The matching service is free until further notice.

CodeCatalog and cyclic.com acquired by OpenAvenue

CodeCatalog and cyclic.com acquired by OpenAvenueScotts Valley, CA -- Paul Hessinger, chairman and chief executive officer of OpenAvenue, Inc. (www.openavenue.com), today announced that the company has acquired CodeCatalog and Cyclic.com from SourceGear Corporation. OpenAvenue is a privately-held, business-to-business company specializing in Web-based hosting, management, and distribution of worldwide collaborative software development projects. OpenAvenue hosts application development content provided by individual and corporate content owners, and makes it available to a worldwide community of software developers under open source, community-based, and private licensing.

A Web-based open-source code search engine, CodeCatalog (www.codecatalog.com) provides a fast reference tool to search and browse a source code repository which currently contains more than 20 million lines of open-source code from Linux, Mozilla, KDE, GNOME, and others. All of the most popular open-source projects are represented, searchable, browsable, and cross-referenced against each other. CodeCatalog is built upon a variety of open source technologies, including Linux. OpenAvenue will integrate CodeCatalog into its OAsis infrastructure.

OpenAvenue also acquired SourceGear's Cyclic.com, a popular Web destination for developers using the Concurrent Versions System (CVS), the leading version control tool for open-source developers. OpenAvenue will further SourceGear's initiative to support the open community development of CVS, work to enhance its functionality, and remain committed to its status as free software.

e-speak.net

e-speak.netSAN FRANCISCO, CA--Collab.Net announced today that it has entered into an agreement with Hewlett-Packard Company to build, host and maintain the Open Source software development infrastructure and Web site (www.e-speak.net) for e-speak technology. E-speak, an HP-developed software that is now available as Open Source software, dramatically simplifies the creation, composition, deployment, management, and maintenance of e-services over the Internet.

Collab.Net is providing Web-based development life-cycle management services including code versioning, bug tracking, and email discussion forums that are key to a well-run Open Source software community.

As a part of this effort, Collab.Net is providing a hosting platform consisting of several major, well-known packages of Open Source software combined into a cohesive offering:

- Code Version System (CVS) repositories - Bug tracking using the BugZilla software from the Mozilla Project - Mailing lists, archived and searchable - Source code browsing tools - An administrative interface for "admins" from the e-speak community

iPlanet web server from the Sun-Netscape Alliance

iPlanet web server from the Sun-Netscape AllianceMOUNTAIN VIEW, Calif. - January 19, 2000 - The Sun-Netscape Alliance (Alliance) today announced iPlanet(TM) Web Server, Enterprise Edition 4.1 and the new FastTrack Edition 4.1 software, both of which now include support for the Linux operating system and the latest Java(TM) technologies. With these additions, iPlanet Web Server software now gives users an even wider degree of choice and flexibility in using iPlanet Web Server software on the operating system - or combination of operating systems - that best meets their business and Internet system requirements.

The new iPlanet Web Server, FastTrack Edition 4.1 software was specifically designed to meet the needs of developers and will be offered for free. FastTrack Edition 4.1 contains nearly all the core application development and administrative features of Enterprise Edition 4.1 that developers need to build rich, dynamic Web applications. iPlanet Web Server, Enterprise Edition 4.1 software continues to meet the needs of enterprises and service providers who require a high performing, scalable Web server to power their mission-critical Internet applications.

CNN To Power Web Sites With iPlanet Web Server 4.1 Software "The CNN Web sites have relied on Netscape Web Servers to meet the world's tremendous demand for news ever since CNN.com's initial launch in 1995. We have served billions of pages with Netscape Enterprise Server 2.01, and now it is time to move forward," said Sam Gassel, chief systems engineer for CNN Internet Technologies. "Our initial testing shows that iPlanet Web Server 4.1 software should be a highly scalable and reliable release. As we offer a more dynamic and personalized service, our users will benefit from the improvements in core functionality and new features such as integrated support for the latest Servlet and JavaServer Pages(TM) (JSP) specifications."

A pre-release version of iPlanet Web Server, Enterprise Edition 4.1 software on Linux is currently available on the iPlanet Web site, at www.iplanet.com/downloads/testdrive/index.html, for trial use and feedback. iPlanet Web Server, Enterprise Edition 4.1 software on the Linux, NT and Solaris(TM) Operating Environment is scheduled to be available for purchase in early March, 2000. iPlanet Web Server, Enterprise Edition 4.1 software with support for HPUX, AIX and Tru64 (Compaq/DEC) is scheduled to be available in early April, 2000. iPlanet Web Server, FastTrack Edition 4.1 software is scheduled to be available on all supported operating systems--Linux, Solaris, NT, AIX, Tru64 and HPUX--for free on the iPlanet Web site in early May, 2000. iPlanet Web Server, Enterprise Edition 4.1 software is priced at $1495 per CPU.

Configure your network from a web cell phone

Configure your network from a web cell phoneJanuary 24, 2000 - Wayne, NE - SPIRO-Linux announces the development of a Linux Administration System, SPIRO-Linux WETMINtS, a powerful web-based administration interface for Linux systems.

Using WETMINtS, you can configure DNS, Samba, NFS, local/remote filesystems and more using your web-enabled cellular phone. WETMINtS is simple web-enabled cellular phone software, and consists of a number of CGI programs which directly update system files. WETMINtS supports all SPIRO-Linux and other Linux operating systems. Standard operations available with WETMINtS include:

About SPIRO-Linux

SPIRO-Linux is the only distribution that comes with five easy-to-use Server installations (Mega Server, Web Server, Application Server, Name Server, File and Print Server) and three Workstation installations (General Purpose, Development and Graphics), plus an upgrade path and a custom install. SPIRO-Linux includes an Office Suite which contains a word processor, spreadsheet that is built into the product. In addition, SPIRO-Linux has the capability to import Microsoft Word documents into Linux.

Currently, SPIRO-Linux is pursuing relationships with different OEM manufacturers in need of a more robust and easier to use version of Linux. SPIRO-Linux is published under the GNU general public license.

[Just before press time, I couldn't get to www.spirolinux,com. It could be on http://www.openshare.net according to a web search, but that site is under renovation. However, Walnut Creek has a version of SPIRO-Linux for download at ftp://ftp.cdrom.com/pub/linux/sunsite/distributions/spiro/i386/SPIRO/RPMS/ . If anybody at SPIRO-Linux reads this, please send the correct URL to . -Ed.]

Xi Graphics Product Line for Linux

Xi Graphics Product Line for LinuxDenver - XI Graphics Inc. has announced a new web-based product line of graphics hardware drivers for Linux. In making the announcement, Xi Graphics' National Sales Manager, Lee Roder, said the company would continue to offer its existing Accelerated-X Display Server line of products for Linux and Unix operating systems.

"Desktop Linux is coming fast, and we're going to be there," said Roder. "We produce higher quality drivers more efficiently than anyone else in the business. Linux gamers and developers alike can easily download, demo and purchase our drivers from the Web site at affordable pricess -- it's good for us and it's good for Linux."

The 3D Linux drivers are OpenGL 1.1.1-compliant, support libGLU and libGL, and are available for download from Xi Graphics' web site (www.xig.com) starting at $29 each. A limited number of drivers are currently available, but more are being added weekly.

Roder said each driver is priced according to a numbe of factors such as hardware capabilities of the card, the degree of difficulty developing and testing the driver and the likely volume of sales of the driver.

"Our new line of graphics support for Linux in desktop installations is a result of requests from our customers," said Roder. "It gives them the ability to very quickly get superb graphics support for their specific hardware at a price that is quite economical."

The new 3D Linux driver products are sold only over the Web and are available as freely downloadable, limited-run-time demos. The demos can be run on the customers' hardware to confirm compatibility and performance before purchasing. Customers use a registration system to purchase a key through Xi Graphics' web site. The key is e-mailed back to the customer, unlocks the demo product and converts it into standard product.

Other software

Other softwareMagic Enterprise Edition V.8 is a toolkit to build e-commerce and enterprise software directly on Linux.

clobberd 4.16 is a daemon that monitors user activity and network interface activity. Available at Linux FTP sites.

TotalView 4.0 is the first parallel debugger to support multiple development platforms for both traditional UNIX and Linux.

Babylon remote-access software from Spellcaster is now open-source.

Cyclades has released version 6.5.5 of the Cyclom-Y and Cyclades-Z Linux driver. (In the Tech support section of the web site.)

EZHTML makes the arcane art of HTML accessible to everyone with an easy to use interface and well thought out design. Tags are arranged in categories and are grouped with other related tags. The built in search function makes it easy to find that one tag you are looking for and save time. A free 14-day trial is available.

Igloo FTP has released a beta version of their commercial FTP client, IglooFTP-PRO Beta 1.0.0pre1. Binaries for this, and the source for an earlier version (IglooFTP 0.6), are on the web site.

The Parallel Computing Toolkit from Wolfram Research, Inc. is a Mathematica application package that brings parallel computation to anyone having access to more than one computer on a network or to a multiprocessor computer.

Easy Software Products announces

The Answer Guy

The Answer Guy

Greetings from Jim Dennis

Greetings from Jim DennisMany people have been known to quote Blaise Pascal:

"I have made this letter longer than usual because I lack the time to make it shorter."

In this month's Answer Guy, the Answer Guy himself learns some solid lessons in recovery planning. In the case of his editor, to hit the Save key combination every once in a while. You never know when you're accidentally going to hit the key assigned to "quit this session without prompting". Thus

"I have made this blurb shorter than usual because I lack the time do it over."

Sorry folks! And I wouldn't be the Answer Guy if I didn't have the answer for this. Emacs users, make sure to turn auto-save-mode on for your important buffers before you get rolling, amd set your auto-save-interval to something other than NIL. Screen users, make sure to update -- the newer versions now ask before completely shutting down screen itself. And try not to use a keyboard with \ too close to ] so you're less likely to backwack that fascinating article you were writing.

isapnptools

isapnptoolsFrom David B. Sarraf on Sun, 30 Jan 2000

James:

I recently read this question regarding the ethernet card. You mentioned that the card may be configured to a base address which autoprobe was not looking at. I agree that this may be a problem. You advised the reader to use boot-time parameters. That is a workable approach however I try to avoid it whenever possible.

I have had very good results using isapnptools, which came with my RedHat 5.1 distro. I used it to configure a modem card and a network card. Between the well engineered software and the excellent documentation the process was quite painless and eminently successful. Now I have cards that work and which run at well-defined and well-known addresses and IRQs and I don't need the boot parameters.

That's probably very good advice.

I've never used isapnptools and I usually forget that they even exist. Could you give an example of HOW you use them?

![]() I recently ran into a similar situation with a 3-com card. It had been taken out of a PNP system and put into a stock ISA system. I knew it to be a PNP card but I did not realize that it had been set to use the same IRQ as the floppy drive controller and isapnptools would not detect this card. This caused much head scratching until I put it back in a PNP system and ran 3Com's "disable PNP mode" software. Still, I was successfull at using the card without boot parameters.

I recently ran into a similar situation with a 3-com card. It had been taken out of a PNP system and put into a stock ISA system. I knew it to be a PNP card but I did not realize that it had been set to use the same IRQ as the floppy drive controller and isapnptools would not detect this card. This caused much head scratching until I put it back in a PNP system and ran 3Com's "disable PNP mode" software. Still, I was successfull at using the card without boot parameters.

None of this is meant as a criticism of your initial advice. It is just my experience with an alternative method.

Dave Sarraf

Actually I think I could use more criticism.

Removing an OS

Removing an OSFrom Paully0529 on Sun, 30 Jan 2000

I recently received a laptop which has Red Hat 5.1 installed on it. I would like to remove this OS but have no idea what the login password is. Is there any way around this?

You don't need a user/account password to remove any operating system. So long as you can control the boot sequence of the system (i.e. boot from floppy or CD) then you can boot up into something that will wipe out all that nasty stuff that you don't want on your new laptop's hard disk.

There are also ways for you do force a password change on a Linux box. I've described it several times --- but the basic sequence is something like this:

At the LILO: prompt type:

linux init=/bin/sh rw

... this will boot the system using the "linux" LILO stanza, and force the kernel to bypass the normal bootup process (by loading a command shell instead of the usual init process). It will also force the kernel to mount it's "root" filesystem in "read/write" mode.

You can then type:

mount /usr

... which might not be necessary, and thus might give a (harmless) error message.

Then type:

/usr/bin/passwd

... and provide a new password (which you'll need to repeat twice).

Next you can type the following commands (ignoring some possible, harmless warnings and errors):

sync umount /usr mount -o remount,ro / exec /sbin/init 6

Of course those directions are for people who want to take over a Linux system and preserve the programs, configuration and data on it. In your case you could do something more like the following at the LILO prompt:

linux init=/bin/sh rw

... and when you get a shell prompt just use:

dd if=/dev/zero of=/dev/hda

... (assuming that Linux is on your primary IDE drive).

NOTE: This last command example will WIPE OUT EVERYTHING ON YOUR PRIMARY IDE DRIVE! It will scribble strings of binary zeros (ASCII NUL characters) all over the drive wiping out everything. Don't use this unless that's really what you want to do!

(Note: one some systems you might have to use some other "stanza" name other than "linux" --- hit a [Tab] key at the LILO prompt to see a list of options).

SysAdmins Note: If you want to prevent users from doing these sorts of things to their desktop systems (as a matter of policy for example) then you can set up a LILO password and mark the system as "restricted" in the /etc/lilo.conf file.

Of course this by itself will not be much "protection" -- you'll also have to mark the file as not readable by users other than root, restrict root access to the system, change the CMOS boot sequence to prevent booting from floppies, CD discs and other removable media, and set a CMOS/NVRAM password to prevent the users from changing the boot sequences back. On top of all that you'll have to pick a brand of PC/BIOS that doesn't have any known "backdoor" CMOS passwords and you'll have to lock the cases so that the users can't open them up to short the battery to clock chip leads, or otherwise reset the CMOS registers to their factory state. Those are all hardware security limitations of PCs, Macintosh and many of the other workstations. They are not OS specific issues.

With most operating systems, you can boot up off their installation media and readily wipe out whatever happens to be sitting on the system by simply answer some silly install program warning. (Early versions of MS-DOS were pretty stupid in that they would refuse to remove or overwrite "foreign" or "unknown" partitions in FDISK regardless of a users wishes. I don't know if they ever fixed that. I haven't installed any MS operating system on anything for several years).

InstallShield: Netscape 4.7

InstallShield: Netscape 4.7From Dr.S.Vatcha on Sun, 30 Jan 2000

Dear answerguy

Everytime I attempt to set up netscape4.7 version a browser error 432 comes up saying close uninstallshield and resatrt the setup. i have not to my knowledge opened uninstallshield or that it exists on any files on my pc.

help shahrookh.

The problem here is probably that you are running MS-Windows or some derivative thereof. Last I heard InstallShield is a program for installing software on MS Windows systems. I've never heard of a version for Linux.

I presume also that your copy of Netscape (Navigator, Communicator, whatever you've got) is trying to launch "UninstallShield" to remove the older version of the NS software that you are trying to upgrade from. There's probably some sort of temp or "lock" file that is confusing their uninstaller.

Pretty pathetic programming, really. That sort of thing is one of the reasons I stopped using and supporting MS Windows so many years ago.

(BTW: I'm the Linux Gazette Answer Guy).

Console Goes Comatose After a Few Days

Console Goes Comatose After a Few DaysFrom kodancha on Sun, 30 Jan 2000

sir

i have installed redhat linux 6.1 on piii hardware with fire walls.IT works fine.But every 3-4 days i have to reboot the system because of the following.

Sytems will not take any command.When i type any thing cursor moves but no char appears on the screen.Even cntrl-alt-del is also not working.But all oher clients connected this server has got no problem.I tryied stty sane ,cntrl -c etc but it is not respondig.Can u help me

gjkodancha

Well, you description doesn't give me much to go on.

Is this in X? Are you using a kernel with frame buffer (graphics driven text mode) console support?

Try building a new kernel. Leave the system in text mode and disable any "VESA VGA Graphics" support in the kernel (in menuconfig under the "Console Drivers" menu). Be sure to enable the "Magic SysRq Key" under "Kernel Hacking." Read the docs about this "Magic SysRq" in the /usr/src/linux/Documentation/sysrq.txt file.

Now, after you've built and installed the new kernel, when you reboot with it, use runlevel 3 (Red Hat: text mode multi-user mode) rather than runlevel 5 (multi-user with GUI/xdm login mode).

If the console seems to go comatose again, try using some of the Magic SysRq keys, particularly the p (processor status), t (task list) and m (memory status) diagnostics, and the k and r keys to kill everything on a given console and to "reset" the keyboard driver.

A couple of other things you can do:

Edit /etc/syslog.conf and add a line like:

*.* /dev/tty12

... (and restart your syslog daemon). This will copy all syslog messages to your twelfth virtual console. When you leave your system unattended, switch to that VC. If it appears comatose when you get back, look at the messages at the end.

When you restart your system, look at the tail of the /var/log/messages file. That's where most system warnings and errors are logged.

Also you can try logging in via ssh (or telnet, rlogin or some other insecure protocol) and using the commands: chvt 1 or chvt 2 ... to force the console to switch to another VC. See if that works.

You can also run commands like

stty sane < /dev/tty1

And:

setterm -reset > /dev/tty1