|

Table of Contents:

|

|||||||||||

| TWDT 1 (gzipped text file) TWDT 2 (HTML file) are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version. |

|||

![[tm]](../gx/tm.gif) , http://www.linuxgazette.com/ , http://www.linuxgazette.com/This page maintained by the Editor of Linux Gazette, Copyright © 1996-2000 Specialized Systems Consultants, Inc. |

|||

Write the Gazette at

|

Contents: |

Answers to these questions should be sent directly to the e-mail address of the inquirer with or without a copy to [email protected]. Answers that are copied to LG will be printed in the next issue in the Tips column.

Before asking a question, please check the Linux Gazette FAQ to see if it has been answered there.

Mon, 13 Mar 2000 17:07:37 -0400

Mon, 13 Mar 2000 17:07:37 -0400

From: DoctorX <>

Subject: Suggestions

I am from Venezuela South America and I think that the next issue of "Linux Gazette" will contain something about Latin American Linux Distributions , and others project developed in Latin America

I am a leader of a project named HVLinux HVlinux is a project for make a venezuelan linux distribution based on slackware, it run for now in RAM ( version 0.2.2 ) but the next version will run from HD.

HVLinux HOME : http://www.hven.com.ve/hvlinux

this site is in spanish

It is only a suggestion

Wenceslao Hernandez C.

Wed, 1 Mar 2000 06:47:02 -0800 (PST)

Wed, 1 Mar 2000 06:47:02 -0800 (PST)

From: Jim Coleman <>

Subject: Free ISPs for Linux???

This isn't exactly a burning question but I'd be interested in knowing if anyone in the Gazette readership knows of a free ISP that supports Linux. All of the ones I've checked out so far require Windows and/or Internet Explorer.

Thanks!

Thu, 02 Mar 2000 08:24:30 GMT

Thu, 02 Mar 2000 08:24:30 GMT

From: Brad Ponton <>

Subject: redhat 6.1 'hdc: lost interrupt' problems

i own a panasonic 24x CDROM, a quatum 2.5GB bigfoot and recently purchased a segate 17.2GB.

i am getting 'hdc: lost interrupt' constanly through the install ( which ploads along for about 2 hours ) then ends in what i think is suppoed to be text displayed, only the screen then starts displaying out of sync, drawing lines across the screen which look like very large ( 2 inch, or 4 centimetre ) and unreadable letters. thus the install ends. ( obviously incomplete )

other probs with computer which may have influeenced

a) to install the seagate was extremly hard requiring about an hours worth on fiddling with ide cables and jumper settings, currently the quatum is master of the primary ide, with nothing else, the cdrom is secondary master and the seagate is secondary slave. this is about the ONLY way all devices are detected properly AND the cd is able to boot.

b) -before the segate was installed- i have ( along with other a queer 'randomly order' file display in windows explorer for only some directories ) not beenable to play audio cds, with them either not being detected by windows 'cdplayer' or jumping from track to track when play is pressed, without playing anything. although data and video cd's continue to run fine.

thank you for your time ( and possibly help )

Sat, 4 Mar 2000 01:07:37 +0100

Sat, 4 Mar 2000 01:07:37 +0100

From: Kjell Ø. Skjebnestad <>

Subject: Help Wanted: XF86 3.3.6 vs ATi Rage 128

so. i need some help over here. X is not working properly with my spanking new ATi Rage 128-card ("bar code text"). here's what i have done so far:

downloaded XF86 3.3.6 (binary) (which supports ATi Rage 128) to my Win98 disk. installed a normal installation of Red Hat 6.1 (in text mode) to my linux disk. installed XF86 3.3.6 properly to my linux disk, taking great care not to overwrite the original `xinitrc' provided by Red Hat runned XF86Setup, configured everything there;the XF86Setup-test worked nicely runned `startx', which started Gnome etc. the Gnome Help Browser popped up, but the text... it was all like bar codes (that is, only horizontal lines). but occasionally, perfectly legible text popped up when scrolling. the words `Gnome Help Browser' in the title line was perfectly legible all the time. and yes, i have entered the correct sync rates for my monitor. i presume this is a font problem, but the means to solve it i cannot begin to comprehend. yea, i have clutched my brain rather avidly trying to get my system working properly. i have even tried deleting the font-folder before installing XF86-3.3.6... and making sure that the font-folder was regenerated when installing XF86-3.3.6.

so. in summary, the main problem seems to be the weird behaviour of text when using X ("bar code text"). any help to solve this problem would be greatly appreciated.

Sun, 05 Mar 2000 02:15:18 -0800

Sun, 05 Mar 2000 02:15:18 -0800

From: Dianne Witwer <>

Subject: PLEASE HELP ME!

All right, I have the boot disk failure on my computer. And I was readding the article and I dont have a boot disk or a back up disk. But can I just reinstall windows somehow without using a boot disk. Thanx Josh

Mon, 6 Mar 2000 14:51:05 -0000

Mon, 6 Mar 2000 14:51:05 -0000

From: Anthony W. Youngman <>

Subject: RE: Clenning(sic) lost+found

I notice Ed said "it's often the sign of serious disk corruption". May I beg to disagree? Note that ALL of the files in lost+found are of type b or c. I don't know why or how this corruption occurs, but I found that (1) I couldn't delete them, and (2) the machine gaily carried on working with no sign of any problems.

The problem only went away when the computer was scrapped - it was a 386 and was replaced with a 686MX.

[It sounds like we agree. Perhaps I wasn't clear. Really weird permissions like"c-wx--S-w-"that no sane person would ever do even in their stupidest moments can be a sign that some hardware fault has zapped the system. Data corruption is the result, not the cause. The goal is then to find the cause or scrap the computer. Perhaps the cause was a one-time thing, as apparently happened in your case.The files that wouldn't delete may have had their immutable or undeletable attribute set. The command

lsattr(see manpage) shows which attributes a file has, andchattr -i -uwill remove those attributes. Attributes are like permissions but refer to additional characteristics of files in the ext2 filesystem. However,lsattr /devspews out a whole lot of error messages, so it may be that the command won't help with device files ("b" or "c" as the first character of als -lentry.) -Ed.

Wed, 08 Mar 2000 11:43:04 -0700

Wed, 08 Mar 2000 11:43:04 -0700

From: T.J. Rowe <>

Subject: man-db package compilation problems

First of all, I'd just like to thank all those who replied to my previous postings here at LG and got me unstuck. =) I only like to resort to asking when I've exahsted all my own ideas. That being said, I've come up across another interesting challenge to which I've yet found no solution. Here's the deal:

In compiling the man-db package (as at least one reader correctly guessed, yes, I'm following the LFS linux from scratch howto from here at LG), I get the follow compilation error using both pgcc 2.95.2 as well as egcs 2.91.66 on both my new linux partition and the "main" partition:

cp include/Defines include/Defines.old

sed -e 's/^nls = .*/nls = all/' include/Defines.old > include/Defines

make -C lib

make[1]: Entering directory `/root/project/man-db-2.3.10.orig/lib'

make[1]: Nothing to be done for `all'.

make[1]: Leaving directory `/root/project/man-db-2.3.10.orig/lib'

make -C libdb

make[1]: Entering directory `/root/project/man-db-2.3.10.orig/libdb'

gcc -O -DHAVE_CONFIG_H -DNLS -I../include -I.. -I. -I- -c db_store.c

-o db_store.o

In file included from db_store.c:44:

../include/manconfig.h:298: parse error before `__extension__'

../include/manconfig.h:298: parse error before `('

make[1]: *** [db_store.o] Error 1

make[1]: Leaving directory `/root/project/man-db-2.3.10.orig/libdb'

make: *** [libdb] Error 2

Has anyone gotten this problem before? Any ideas? :)

Thu, 9 Mar 2000 01:13:16 -0500

Thu, 9 Mar 2000 01:13:16 -0500

From: Kent Franken <>

Subject:

I would really like to see case studies on switching to Linux form other platforms.

Here's our platform and some requirements and questions:

We currently use Windows NT Terminal Server Edition. How hard would it be to go to Linux?

- We have two TSE servers with approximately 30 users each logged in on average. In total, we have about 130 users but it is a manufacturing plant and many people share terminals.

- We use Citrix Metaframe, for Load Balancing and failover. Is there a product for Linux that offers this option?

- We use thin clients (Boundless (www.boundless.com) Windows CE terminals with RDP and ICA protocols) and no X. I have not been happy with the CE terminals. I was wondering if an X-term performed better? You can really see how slow CE is if you just click on the start button and move the mouse up and down the program list. I had an old PC with not enough RAM and the ICA client worked much better than the CE ICA client. I just found www.ltsp.org and the x-terms look fantastic (you could do a whole article just on that project).

- Dependability. I have to reboot my TSE servers once a week. Last week a new HP printer driver caused about 40 blue screens of death before we figured out what was going on. Will Linux be better?

- Office productivity software. If we are used to MS Office, what will it be like going to something like star-office?

- Anti-virus programs? Is there an antivirus program to scan mail stores (sendmail POP server)?

- Security. How good is Linux at keeping users honest? With TSE you can delete or overwrite files in the system directories as a user. Can't delete a system file? Just open it in Word and save it and watch us IS guys jump around.

Thu, 09 Mar 2000 19:27:53 +0100

Thu, 09 Mar 2000 19:27:53 +0100

From: Eva Gloria del Riego Eguiluz <>

Subject: HELP, PLEASE!

I have a problem with one partition of my hard disk. Yesterday I installed Red Hat 6.0 and everything was O.K. until I saw that I could not enter in one of my FAT32 partition.

In Windows '98, when I click on E: (the wrong partition) the message is "One of the devices vinculated to the system doesn't work" (or something like that, I read the message in spanish).

I tried to see the hard disk with Partition Magic 3.0 and the error message is:

"Partition table error #116 found: Partition table Begin and Start inconsistent: the hard disk partition table contains two inconsistent descrptions of the partition's starting sector...."

I want to know if I have lost all the information in this partition or in the other hand a way to get the data again.

The other partition of the hard drive are O.K. and I can start Windows O.K. and Linux also, because the wrong partition isn't primary.

Thank you very much,

Sat, 11 Mar 2000 08:40:07 -0500 (EST)

Sat, 11 Mar 2000 08:40:07 -0500 (EST)

From: Ahmadullah Asad <>

Subject: a question...

Hi,

I would like to download the postscript viewer from http://www.medasys-digital-systems.fr/mirror/linux/LG/issue16/gv.html but I cannot connect to this German FTP site. Any help will be appreciated. Also I am totally ignorant of how to compile and run the source if I am successful in downloading this file. I would really appreciate if you can give me some info on that as well.

[Which distribution do you use? It's included in Debian, and it should be included in all the other distributions as well. You can also get the .deb file and convert it to rpm or tgz using the alien program if you have it (in the package "alien"). -Ed.]

Mon, 13 Mar 2000 18:39:52 +0500

Mon, 13 Mar 2000 18:39:52 +0500

From: Choudhry Muhammad Ali <>

Subject: VGA card Problem with Redhat 6.1

hi

I 've been running with Linux 4 a few years now, using RedHat 6.0 distributions. I'm currently upgrading my computer with RedHat 6.1. Recently, Redhat 6.1 Running fine, but one small problem with my vga card...6.1 not configure properly....set automatically my VGA Card on perverse version(sis 5 series)...my card is sis 6 +series

Does anyone have any ideas as 2 why this would b happening and how 2 correct it?

CMA

Tue, 14 Mar 2000 19:07:34 +0100

Tue, 14 Mar 2000 19:07:34 +0100

From: Laurent STEFAN <>

Subject: MIME and mail ?

How to join a file (.html, .gif or whatelse) in a e-mail with the mail program ?

I'm using something like : # ./test.pl | mail someone@somewhere -s"something"

but it result only a full text report in the body.

regards.

Now a good stuff :

I made a bash script that look like this for the mail process:

exec $PROG | mail [email protected] -s "My subject Mime-Version: 1.0 Content-Type: Text/html "

And it works !

So, why not to add a 'boundary=...' with a Multipart/related in Content-Type and while we are at it a 'Content-Transfert-Encoding: BASE64' ?

If you can tell me where there's a BASE64 encoder ... That would be great !

Thu, 22 Jul 1999 15:28:09 +0200

Thu, 22 Jul 1999 15:28:09 +0200

From: Sandeep < >

Subject: Video Card AbigPRoblem

Hello there, i am sandeep from india and i have recently Bought a azza MainBoard with Built in Sound Card (Avance Logic 120) and Video Card (SiS-530(but chip is SiS-5595 (AGP with shared Ram ))) there is a very big problem Linux couldnot recognise the VRAM on the shared Sdram MOdule originally allocated by Award Bios. The sound card also was not recognised by the new Linux 6.0 version but by 5.2v ver of Red Hat it was recognised as Sound Blaster not as my original card as u guys have bveen doing great work i thought that u would be able to solve my problem or direct me to those who can thankiing u guys u r really doing a great work bye My email address is [email protected] (i am calling from a cafe so please do not reply to any other e mail address than given above([email protected])

Tue, 22 Feb 2000 21:53:22 +0100

Tue, 22 Feb 2000 21:53:22 +0100

From: Wim van Oosterhout <>

Subject: ISND PCI128 Trust

Ban anyone tell me how to install my ISDN adaptor? Kind regards Wim van Oosterhout

Fri, 17 Mar 2000 12:00:59 -0500

Fri, 17 Mar 2000 12:00:59 -0500

From: thesun <>

Subject: Mailbag submission: Help Wanted on Japanese text input

Hi,

I'm new to the Linux Gazette but not new to Linux, and I've had a hell of a time trying to find clear, step-by-step info about how to WRITE Japanese under RedHat Linux 6.1. Part of the problem is that I don't READ Japanese well enough to sift through the Japanese sites, but I've found a program called "dp/NOTE" from OMRONSOFT which almost works--apparently, the program is supported by the Japanese version of RedHat 6.1, but I don't know what RPMs to download to get the thing to run under the English version...or even if it will run at all. Are there fundamental differences between the Japanese and the English versions? Should I set up a dual boot system? Ideally, I'd like to just run English RedHat but have a program I can pull up that will allow easy romaji text input and then convert to hiragana, katakana, or Kanji. There's a nice program by NJStar that does that, but it's (barf!) Windoze95 only.

Any suggestions or help would be greatly appreciated.

Thanks,

Fri, 17 Mar 2000 19:20:34 -0500

Fri, 17 Mar 2000 19:20:34 -0500

From: Clark Ashton Smith <>

Subject: Help with group rights and Netscape Composer

I've search deja news, Linux HowTos, and books, and I have not seen this error mentioned. Makes me think it something on my end, but I can't figure out what it is. I'm hoping someone can help.

I am running RedHat Linux 5.2 and Netscape 4.08.

I created a group called web and made a directory

/usr/local/webauth

I set the group and the SGID bit on that directory

chgrp -v web /usr/local/webauth chmod -v 2775 /usr/local/webauth

Now for the problem:

Anyone in the web group should be able to save into that directory. With Netscape composer they can only edit files that already are in that directory!?!

If I use the "Save As" option in Netscape Composer to save into that directory I get the following error:

"The file is marked read-only."

The same error occurs using the "Save" option to save a new file to that directory. BUT, if I open a file from that directory in netscape, edit it, and use the "Save" option, it will write over the old file in that directory. I can edit any existing html files created by anyone in the "web" group.

What on earth is going on? The users belong to the web group and they can all create files in /usr/local/webauth via the touch command or emacs. The users all have a umask of 002. The files they create with touch or emacs are all are created

-rw-rw-r-- username.web filename

They can use emacs to open end edit each others files in /usr/local/webauth, but they can't create new files with netscape composer! They can only edit existing files and save them to the same filename.

The only way I can get "Save As" and "Save" to create new files in /usr/local/webauth is to set the permissions to

chmod -v 2776 /usr/local/webauthor

chmod -v 2777 /usr/local/webauthwhich defeats the whole point of creating special work groups and protecting the files from being written by anyone not in the group.

If you can, please shed some light on this. Thank you.

Mon, 20 Mar 2000 20:05:16 -0000

Mon, 20 Mar 2000 20:05:16 -0000

From: Tony <>

Subject: connecting win98 and Linux

I am relatively new to Linux so please be patient. Can anyone tell me how to connect my win 98 machine with my Linux server. I used to run win 95 and had no problems but since using win98 overtime I try to browse my network from network neighbourhood I am unable to browse the network, I cannot ping the Linux server either. I am sure that I have TCP/IP installed correctly on both machines. Any help anyone can give would be most helpful. Regards A newly.

Fri, 24 Mar 2000 16:23:30 +0300

Fri, 24 Mar 2000 16:23:30 +0300

From: Alexandr Redko <>

Subject: DNS for home mail not working

For some time I was following the guidelines of article by JC Pollman and Bill Mote "Mail for the Home Network", Linux Gazette #45 with the aim to build my verySOHO net.

Here is my setup:

Linux Red Hat Cat 6.0

-------------------------------------------------------------

"host.conf"

-------------------------------------------------------------

order hosts,bind

multi on

-------------------------------------------------------------

"nsswitch.conf"

-------------------------------------------------------------

hosts: files dns

-------------------------------------------------------------

"resolv.conf"

-------------------------------------------------------------

search asup

nameserver 10.0.0.5

-------------------------------------------------------------

"named.conf"

-------------------------------------------------------------

options {

directory "/var/named";

forward first;

forwarders {

196.34.38.1;

196.34.38.2;

};

/*

* If there is a firewall between you and nameservers you want

* to talk to, you might need to uncomment the query-source

* directive below. Previous versions of BIND always asked

* questions using port 53, but BIND 8.1 uses an unprivileged

* port by default.

*/

// query-source address * port 53;

};

zone "." {

type hint;

file "db.cache";

};

zone "asup" {

notify no;

type master;

file "db.asup";

};

zone "0.0.10.in-addr.arpa" {

notify no;

type master;

file "db.0.0.10";

};

zone "0.0.127.in-addr.arpa" {

type master;

file "db.127.0.0";

};

-------------------------------------------------------------

"db.asup"

-------------------------------------------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha

IN MX 10 sasha

sasha IN A 10.0.0.5

sasha IN MX 10 sasha

mail IN A 10.0.0.5

www IN A 10.0.0.5

news IN A 10.0.0.5

localhost IN A 127.0.0.1

asup1 IN A 10.0.0.101

asup1 IN MX 10 sasha

-------------------------------------------------

"db.0.0.10"

-------------------------------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha.asup.

5 IN PTR sasha.asup.

5 IN PTR www.asup.

5 IN PTR mail.asup.

5 IN PTR news.asup.

101 IN PTR asup1.asup.

----------------------------------------------------------

"db.127.0.0"

----------------------------------------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS localhost.

1 IN PTR localhost.

----------------------------------------------------------

----------------------

"firewall"

----------------------

:input ACCEPT

:forward REJECT

:output ACCEPT

-A forward -s 10.0.0.0/255.255.255.0 -d 10.0.0.0/255.255.255.0 -j ACCEPT

-A forward -s 10.0.0.0/255.255.255.0 -d 0.0.0.0/0.0.0.0 -j MASQ

----------------------

# echo 1 > /proc/sys/net/ipv4/ip_forward

-----------------------------------

/etc/sysconfig/network

-----------------------------------

NETWORKING=yes

FORWARD_IPV4="yes"

HOSTNAME=sasha.asup

DOMAINNAMEGATEWAY=""

GATEWAYDEV=""

-----------------------------------

With ppp0 up route

----------------------------------------------------------------------------

10.0.0.5 * 255.255.255.255 UH 0 0

0 eth0

196.34.38.254 * 255.255.255.255 UH 0 0

0 ppp0

10.0.0.0 * 255.255.255.0 U 0

0 0 eth0

127.0.0.0 * 255.0.0.0 U 0

0 0 lo

default 196.34.38.254 0.0.0.0 UG 0 0

0 ppp0

----------------------------------------------------------------------------

DNS debug when making nslookup for my ISP server:

----------------------------

datagram from [10.0.0.5].1026, fd 22, len 31

req: nlookup(www.tsinet.ru) id 1755 type=1 class=1

req: missed 'www.tsinet.ru' as '' (cname=0)

forw: forw -> [196.34.38.1].53 ds=4 nsid`421 id55 3ms retry 8sec

retry(0x4011e008): expired @ 953043527 (11 secs before now (953043538))

reforw(addr=0 n=0) -> [196.34.38.1].53 ds=4 nsid"50 id=0 3ms

datagram from [10.0.0.5].1026, fd 22, len 31

req: nlookup(www.tsinet.ru) id 1755 type=1 class=1

req: missed 'www.tsinet.ru' as '' (cname=0)

reforw(addr=0 n=0) -> [196.34.38.2].53 ds=4 nsid`421 id55 3ms

reforw(addr=0 n=0) -> [196.34.38.2].53 ds=4 nsid"50 id=0 3ms

datagram from [10.0.0.5].1026, fd 22, len 31

req: nlookup(www.tsinet.ru) id 1755 type=1 class=1

req: missed 'www.tsinet.ru' as '' (cname=0)

----------------------------

I'll be very greatful if someone say me what I done wrong.

Regards to All

I appreciated the depth to which you went in talking about your invironment, however, I'm confused about what your problem is. Can you tell me exactly what isn't working and what you're trying to do please? I'd love to help!

Thank you for attention to my petty problem. Sorry to say that I'm in no way closer to it's solution then in the beginning. I got two letters (thanks very much ) from JC Pollmann:

A number of things could be causing the problem:

"db.asup"

-------------------------------------------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha

IN MX 10 sasha

sasha IN A 10.0.0.5

sasha IN MX 10 sasha

mail IN A 10.0.0.5

www IN A 10.0.0.5

news IN A 10.0.0.5

I know they say otherwise, but try using CNAME, eg:

mail IN CNAME 10.0.05

localhost IN A 127.0.0.1

asup1 IN A 10.0.0.101

asup1 IN MX 10 sasha

-------------------------------------------------

"db.0.0.10"

-------------------------------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha.asup.

5 IN PTR sasha.asup.

you can not use CNAMED names for reverse lookup

5 IN PTR www.asup. 5 IN PTR mail.asup. 5 IN PTR news.asup. 101 IN PTR asup1.asup.make those changes and do a named restart and see what happens.

copy this to your rc.local file and reboot:

echo "setting up ipchains" echo "1" > /proc/sys/net/ipv4/ip_forward # allow loopback, always /sbin/ipchains -A input -i lo -j ACCEPT # this allows all traffic on your internal nets (you trust it, right?) /sbin/ipchains -A input -s 10.0.0.0/24 -j ACCEPT # this sets up masquerading /sbin/ipchains -A forward -s 10.0.0.0/24 -j MASQ #you need this for ppp and dynamic ip address echo 1 > /proc/sys/net/ipv4/ip_dynaddr

I did my home work:

---------------

named.conf

---------------

options {

directory "/var/named";

forwarders {

forward.first;

195.34.38.1;

195.34.38.2;

195.34.38.1;

};

/*

* If there is a firewall between you and nameservers you want

* to talk to, you might need to uncomment the query-source

* directive below. Previous versions of BIND always asked

* questions using port 53, but BIND 8.1 uses an unprivileged

* port by default.

*/

query-source address * port 53;

};

//

//

zone "." {

type hint;

file "db.cache";

};

zone "asup" {

notify no;

type master;

file "db.asup";

};

zone "0.0.10.in-addr.arpa" {

notify no;

type master;

file "db.0.0.10";

};

zone "0.0.127.in-addr.arpa" {

type master;

file "db.127.0.0";

};

-------------

db.asup

-------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha

IN MX 10 sasha

sasha IN A 10.0.0.5

sasha IN MX 10 sasha

mail IN CNAME 10.0.0.5

www IN CNAME 10.0.0.5

news IN CNAME 10.0.0.5

localhost IN A 127.0.0.1

asup1 IN A 10.0.0.101

asup1 IN MX 10 sasha

-------------------------

db.0.0.10

-------------------------

@ IN SOA sasha.asup. redial.asup. (

1 ; Serial

10800 ; Refresh

3600 ; Retry

604800 ; Expire

86400 ) ; Minimum

IN NS sasha.asup.

5 IN PTR sasha.asup.

101 IN PTR asup1.asup.

-------------------------

firewall

------------------------

:input ACCEPT

:forward ACCEPT

:output ACCEPT

-A input -s 0.0.0.0/0.0.0.0 -d 0.0.0.0/0.0.0.0 -i lo -j ACCEPT

-A input -s 10.0.0.0/255.255.255.0 -d 0.0.0.0/0.0.0.0 -j ACCEPT

-A forward -s 10.0.0.0/255.255.255.0 -d 0.0.0.0/0.0.0.0 -j MASQ

------------------------

network

------------------------

NETWORKING=yes

FORWARD_IPV4="yes"

HOSTNAME=sasha.asup

DOMAINNAME=

GATEWAY=

GATEWAYDEV=

------------------------

I connect to my ISP by issuing " ipup ppp0 " command, and then my luck ends:

Here is excerpt from tcpdump output:

12:39:23.442252 ppp0 > sasha.asup > 195.34.38.1: icmp: echo request 12:39:23.644703 ppp0 < 195.34.38.1 > sasha.asup: icmp: echo reply .......... 12:39:27.434735 ppp0 > sasha.asup > 195.34.38.1: icmp: echo request 12:39:27.574701 ppp0 < 195.34.38.1 > sasha.asup: icmp: echo reply 12:39:28.014857 lo > sasha.asup.1052 > sasha.asup.domain: 30612+ PTR? 10.0.41.198.in-addr.arpa. (42) 12:39:28.014857 lo < sasha.asup.1052 > sasha.asup.domain: 30612+ PTR? 10.0.41.198.in-addr.arpa. (42) 12:39:28.015356 ppp0 > sasha.asup.domain > 195.34.38.1.domain: 37592+ PTR? 10.0.41.198.in-addr.arpa. (42) ............ 12:39:29.434740 ppp0 > sasha.asup > 195.34.38.1: icmp: echo request 12:39:29.564708 ppp0 < 195.34.38.1 > sasha.asup: icmp: echo reply 13:26:31.045625 lo > sasha.asup.1075 > sasha.asup.domain: 31874+ PTR? 90.10.8.128.in-addr.arpa. (42) 13:26:31.045625 lo < sasha.asup.1075 > sasha.asup.domain: 31874+ PTR? 90.10.8.128.in-addr.arpa. (42) 13:26:31.046140 if21 > sasha.asup.1071 > 198.41.0.4.domain: 16489+ PTR? 90.10.8.128.in-addr.arpa. (42) 13:26:31.464747 if21 > sasha.asup > 195.34.38.1: icmp: echo request 13:26:31.604706 if21 < 195.34.38.1 > sasha.asup: icmp: echo reply

I got it so that there is some problem with masquerading and my packets just don't go any further than my ISP's server.

Regards to All

Fri, 24 Mar 2000 19:13:08 +0200

Fri, 24 Mar 2000 19:13:08 +0200

From: Ivanus Radu <>

Subject: I need an answer, pls help me

Hello!

I am proud to announce this: "I'm a 4 day Linux admin, and i'm doing "fine" :) "

OK. Now to the important problems:

1. I've found that in the Net3-4 HOWTO : "If you are interested in using Linux for ISP purposes the I recommend you take a look at the Linux ISP homepage for a good list of pointers to information you might need and use." in Net3- HOWTO I found this : "11. Linux for an ISP ?

If you are interested in using Linux for ISP purposes the I recommend you take a look at the Linux ISP homepage for a good list of pointers to information you might need and use." but the link is broken... Q: What now? (I am interested in making linux for ISP purposes) 2. A Winmodem solution for Linux users. If U are luky to have an win9x sistem connected to the linuxbox trought an network card, then do this: - install a Wingate like SyGate 2.0 for Win on the Win9x box - In Linux make Default Gateway to link to the Win9x box IP That's all for the moment :)

TNX bye.

Fri, 24 Mar 2000 17:29:16 -0800 (PST)

Fri, 24 Mar 2000 17:29:16 -0800 (PST)

From: Michael Dupree <>

Subject: HELP!!!!!!!!!!

i need some help if u can do so please do. when i am in a chatroom and stuff on aim people use booters witch create errors i need a patch for aim that will stop these errors if u can please do

[What's "aim"? Is it a Linux program? We publish only questions dealing with Linux-related issues. Also, are these "errors" things which crash the browser or are you simply trying to prevent the roommaster from booting you out when maybe s/he has a legitimate reason for doing so?

If you really have a program with a bug in it, we need to know what the program is, who makes it, whether it's standalone or runs with a web browser, under what circumstances the error occurs, what error messages you get, and what kind of computer and version of Linux you have. -Ed.]

Fri, 24 Mar 2000 22:37:50 -0500

Fri, 24 Mar 2000 22:37:50 -0500

From: Walter Gomez <>

Subject: HP682 C jet ink color printer

My 682C HP printer is not workin properly. When a print signal is sent, the printer will move a page in and start flashing the yellow light but not print the file. I have checked everything I could think about with no result. Could you help me? Regards,

Fri, 24 Mar 2000 22:54:58 -0800

Fri, 24 Mar 2000 22:54:58 -0800

From: Taro Fukunaga <>

Subject: Sendmail faster start up!

Hi,

I am having trouble with sendmail. I read an article in the Gazette dated a few? months? ago about setting up sendmail, but I'm still puzzled.

I am running MkLinux R1 (a RedHat 6.0 implementation) and sendmail takes forever to startup. Taking a cue from the article, I stopped the sendmail daemon, started up pppd, and then restarted sendmail. It started up much faster. Also I've noticed that if I don't have the pppd up,sendmail tries to ping my other computer (the two are connected by an ethernet and router). Both machines are at home. The other machine does not have ppp set up yet. Well if I don't want to start pppd immediately on boot, what can I do to make sendmail start up faster?

Sat, 25 Mar 2000 12:39:14 +0200

Sat, 25 Mar 2000 12:39:14 +0200

From: Serafim Karfis <>

Subject: Linux as a mail server

I am trying to find instuctions on how to use my Linux server as a mail server for my company. I have a registered domain name, a permanent connecion to the internet and unlimited number of e-mail accounts under my domain name. Please keep in mind that I am a new Linux user, so any instructions have to be detailed for me to understand. Thank you in advance.

Sun, 26 Mar 2000 23:16:30 +0530 (IST)

Sun, 26 Mar 2000 23:16:30 +0530 (IST)

From: nayantara <>

Subject: insmod device or resource busy

Hi, I'm running 2.2.12.... I wrote a module that goes:

#define MODULE

#include

int init_module(void)

{

printk("<1>It worked!\n");

return 0;

}

void cleanup_module(void)

{

printk("<1>All done.\n");

}

now, i compile this and when i insmod it, it printk's It worked! but then gives me :could not load module device or resource busy. what am i missing? what resource is busy? (I looked through the FAQ's but didn't find anything.....so if i missed it please bear with me)

Thanks, Deepa

Tue, 28 Mar 2000 15:43:10 +0100

Tue, 28 Mar 2000 15:43:10 +0100

From: Luis Neves <>

Subject: Xircom CE3 10/100 and Red Hat 6.1

Hello,

I have a toshiba laptop with Red Hat's 6.1 Linux installed. I also have a Xircom 10/100 Ethernet Adapter. I know that xircom doesn´t provide any drivers for Linux and the compatibility list regarding ethernet adapters from RH 6.1 doesn´t include xircom cards. Is there any workarround ? How can I get it to work ?

Thanks in advance,

Luis Neves.

Tue, 28 Mar 2000 16:16:49 GMT

Tue, 28 Mar 2000 16:16:49 GMT

From: hasan jamal <>

Subject: Re: source code of fsck

I need the source code of "fsck", the file system checker under the /sbin directory. I have searched most of the ftp archives related to linux and did not find anywhere. I got RedHat & SuSe distribution, in none of them I found. I would be grateful if anybody can give me the source code or the ftp site.

Md. Hasan Jamal Bangladesh

It should be included in your distribution. I know only Debian, so when I type "dpkg -S fsck" it shows me fsck and fsck.ext2 are in the "e2fsprogs" package. (There are other fsck modules for different filesystem types in other packages, including "util-linux".) Rpm (and yast?) do a similar thing but with different command-line options. Find the appropriate command on your system and it will show which package the program comes from. Probably e2fsprogs*.srpm or util-linux*.srpm or a similar file will have the source you want.

I got it in SuSE. Thanks a lot for such a quick reply.

Wed, 1 Mar 2000 11:45:34 -0600

Wed, 1 Mar 2000 11:45:34 -0600

From: Scott Morizot <>

Subject: Pine and Pico not "open source"?

I just got around to reading the February edition of Linux Gazette. I was more than a little perplexed by the claim in the article about nano that pico and pine weren't "open source".

While it's true that pine and pico aren't under the GPL, neither are many other open source stalwarts like sendmail and apache. Even a quick read of the license http://www.washington.edu/pine/overview/legal.html

makes it clear that the source can be used for any use, even commercial, that it can be modified, and that it can be distributed. I certainly don't see anything that would prevent it from being considered open source.

Oh, and the source is available, of course. Always has been.

And, although you can't get the pico source separately from the entire source tree, you can build just pico or, if your OS is one of the supported ones, download a pico binary for your systems from the unix-bin directory. ftp://ftp.cac.washington.edu/pine/unix-bin/

Not that nano isn't a perfectly good editor and effort. There can't be too many. But keep the facts about pine and pico straight.

Ummm. GPL'ed software is protected under copyright also. So is sendmail. So is apache. All of those licenses are licenses to use copyrighted software. UW's is just another license to use copyrighted software. But all free software protected by a license is copyrighted software and includes some sort of restrictions on its use. GPL software is no less under copyright than software under any other license. In fact, copyright is what allows the GPL to make the restrictions on use that it does. Software that is not under copyright is in the public domain and absolutely no restrictions may be made on its use at all.

I didn't see anything in the legal notice that would constrain the redistribution of binary versions of pico. And, in fact, binary versions of pico are redistributed with most distributions of Linux.

While it's not GPL, I still fail to see any terms in the UW pine license that cause it not to meet the open source definition.

[The license does not allow you to distribute modified binaries of pine. (Hint: all the Packages in your Linux distribution are "modified binaries", because they undergo customization to adhere to the distribution's overall standards.) This is why it's not "open source". Pine is not included in Debian. Instead, you install special packages which include the source and diffs and compile it yourself. -Ed.

Tue, 29 Feb 2000 11:40:06 +0100

Tue, 29 Feb 2000 11:40:06 +0100

From: Linux Gazette <>

Subject: FAQs

The following questions received this month are answered in the Linux Gazette FAQ:

Thu, 2 Mar 2000 17:17:31 -0800

Thu, 2 Mar 2000 17:17:31 -0800

From: Systems Administrator <>

Subject: Duplicate announcement on lg-announce

Some time ago you had trouble with your mailinglist (lg-announce). Your latest announcement was received twice, I therefore attach a text file with the headers from the two messages. I hope this will help you to fix the problem.

[The latest round of duplicates was caused by the same problem as the ones last fall--a certain subscriber or their ISP had a misconfigured mail program which sent the message back to the list. This address was in the middle of the Received: lines of all the duplicate message samples we saw.There are some mailers (Windoze?) which do not honor the envelope-to field of forwarded mail as they should, but instead think they should send it to everybody in the To: header--even though this has already been done. And majordomo's code to detect this sort of loop appears to be broken. While we work on a solution at the software level, we have unsubscribed that address and complained to the user and his/her postmaster.

The two cases involved different users on different continents. So we cannot guarantee it won't happen again, but will continue to unsubscribe addresses as they are detected. -Ed.

Fri, 3 Mar 2000 01:38:37 -0800 (PST)

Fri, 3 Mar 2000 01:38:37 -0800 (PST)

From: Matthew Thompson <>

Subject: Microsoft OS's, their pricing and Linux

Greetings,

Perhaps someone has brought this up before, but I have YAMSCT (Yet another Microsoft conspiracy theory :). Maybe this whole thing about them not being able to combine the NT kernel with the Win9x series of OS's is a ruse.

If they did that, based on the current anti-trust scrutiny, they'd have to lower the price of WinNT/2000/whatever to the price of Win98/ME. They'd never be allowed to force home users to pay the premium price that businesses are now paying for Win2KPro on the desktop.

So as long as they have 2 separate product lines, they can charge basically double for Win2000 that gets sold to businesses. They would completely lose those higher profit margins if they merged the products.

I know I'm preaching to the proverbial choir, here, but it will take Linux to end this. But only when you can have it *all* (I'm typing this from a telnet session to my Debian box from Win98SE, since I want to use all the features of RealPlayer 7, java, Diablo and Descent 3). I'm hopeful that Mozilla will cure the java problems under Netscpape (does anyone know if this will be the case?), more games are coming out for Linux all the time, but what about multimedia apps? Is there Free project out there to fill this gap? I haven't heard anything about Real releasing a fully functional RealPlayer for Linux, especially as a plug-in.

As much as it pains me to say it, right now I'm afraid Linux *is* lacking on the desktop. Here I am, a Linux evangelist (practically a zealot, ask my friends) and I spend more time in Win98 than I do Linux because of the games and internet apps available.

What can we do about this? Is it enough to send emails to companies like Real to get them to release the same software for Linux that they do for Windows? We certainly can't expect MS to release Media Player 6.4 (which *is* an excellent app, btw) for Linux.

Fri, 3 Mar 2000 16:05:32 -0700

Fri, 3 Mar 2000 16:05:32 -0700

From: Jim Hill <>

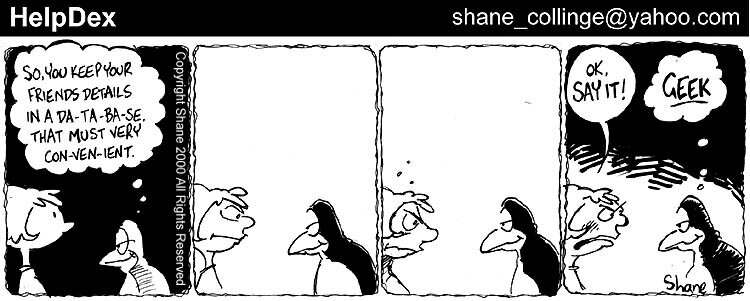

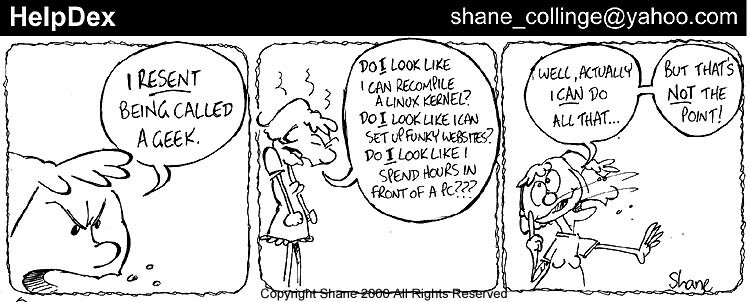

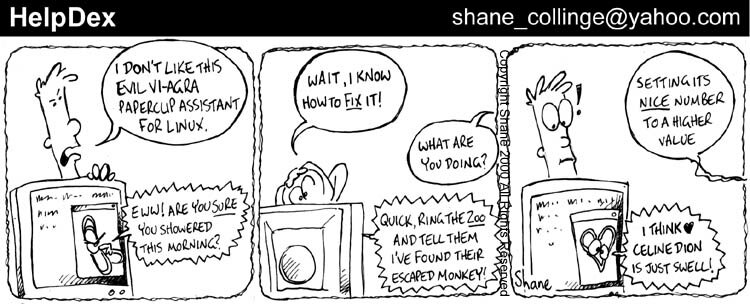

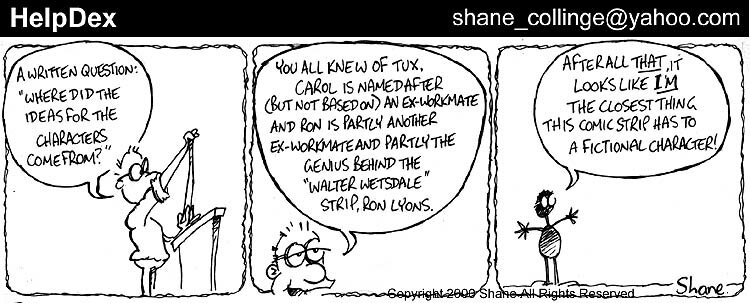

Subject: Drop that comic strip pronto

The guy doing your strip can't draw and isn't funny. One can conceivably get away with a lack of either skill or humor but certainly not both.

Thanks for your 15 seconds.

[Since the Gazette is a do-it-yourself enterprise, if you don't like something, it's up to you to send in something better. :) -Ed.]

Mon, 6 Mar 2000 20:33:03 -0800

Mon, 6 Mar 2000 20:33:03 -0800

From: <>

Subject: RE: Who is Jim Dennis?

From SeanieDude on Tue, 21 Sep 1999 Why the f*ck is your name listed so damn much in hotbot?

Dear SeanieDude:

The reason the Godfath... err, Mr. Jim Dennis appears so often in a HotBot search is that, as the current head of the Maf... err, a large syndicate, he is being investigated due to a totally unfounded accusation: namely, that everyone who has ever been rude to him, *particularly* via e-mail, seems to have suffered unfortunate accidents.

Should your precarious health NOT fail shortly, for some inexplicable reason, take this as a guide for your future behavior:

NEVER be rude to people you don't know anything about, in e-mail or otherwise.

Hoping that you're still around to take good advice, Consigliori Ben Okopnik

Thu, 9 Mar 2000 13:49:49 -0700

Thu, 9 Mar 2000 13:49:49 -0700

From: Vrenios Alex-P29131 <>

Subject: RE: Article Submission

Mike Orr,

I have three articles on your web site so far and you might be happy to know that LG is an inspiration to me. This won't happen over night, but I am starting my own web site magazine about early computers and their use, at http://www.earlycomputing.com/ . You probably have a good deal of experience with the issues surrounding such a venture so any words of wisdom would be greatly appreciated. (Mine will be more a labor of love than a money making enterprise.)

I look forward to contributing to LG again in the future in any event, as the focus of your site and mine are quite different. Thanks in advance.

[I'll help out if I can. I only began editing the Gazette eight months ago, and it's been around for five years. So I can't say much about starting an ezine; just how to keep it going.I'm wondering whether you can get enough articles about early computing to have a regular "zine", or if just a "site" where you can post articles as you receive or write them would be just as well? I am surprised at how many people are willing to contribute to the Gazette. Every month I used to wonder whether I'd get only a few articles that month, but so far I've always gotten plenty to make a full zine. But that will be more difficult with a more specialized topic, and especially at the beginning when you're not as well known.

Feel free to send me any other questions you have. maybe we can make an article or section in the Mailbag eventually about starting a zine. Maybe you'll feel like writing an article about your experience setting up an early computer zine, how you're doing it similar/differently than the Gazette, etc. Not exactly Linux related, but I'm the editor so I can put in anything I want. Plus I know that there is an interest in early computers among Linux folk: we got an article about emulators recently and another person is also writing another article about emulators now. -Ed.]

Sun, 12 Mar 2000 20:52:18 -0500

Sun, 12 Mar 2000 20:52:18 -0500

From: Doc Simont <>

Subject: Gandhi quote

To Whom it may Concern --

I would be interested to know the source of the (great) quote that you use at the top of your web page:

"One cannot unite a community without a newspaper or jounal of some kind."

I am one of a number of volunteers who manage to produce a surprisingly high-quality monthly "newspaper" for our small town in NW Connecticut. It might be something we could use, but our standards prevent us from taking attributions without verification. Too often something attributed to Abraham Lincoln turns out to really have come from Dante (or vice-versa) ;-).

Any pointers toward the source would be very much appreciated.

[It's from the movie Gandhi. We don't know whether Gandhi himself said it. -Ed.]

Mon, 13 Mar 2000 09:49:26 -0700 (MST)

Mon, 13 Mar 2000 09:49:26 -0700 (MST)

From: Michael J. Hammel <>

Subject: correction to your Corel Photo-Paint story

Stephen:

In your C|Net article on Corel's release of Photo-Paint for Linux, (http://news.cnet.com/news/0-1003-200-1569948.html) you mentioned Gimp and Adobe as Corel's most likely competitors. This isn't exactly true. First, Gimp has no marketing or business structure. Not even a non-profit. So, although its a terrific program, it lacks the exposure that a commercial application can get. In the long run, this may hurt it.

(I actually toyed with the idea of trying to form a non-profit or even a for-profit to keep Gimp a strong product, but coming up with a business model for this type of application is difficult. Its not likely selling the OS, where service and support can bring in significant income.)

Adobe's move into Linux is limited, so far, to its PDF and word processing tools. Its not, as far as I know, doing anything about porting is graphics or layout applications (though Frame is probably considered a layout too by many). Corel's not really competing with Adobe in graphics on Linux yet.

Mediascape is about ready to launch its vector based ArtStream for Linux next month. This will be the first entry into the Linux layout tools market. Not long after that, Deneba (www.denebe.com) is expected to launch their Linux version of Canvas 7, a popular Mac image editing tool with vector, layout, and Web development features. These would be Corel's main competitors in the vector graphics arena. Gimp remains a competitor in the raster-based image editing front, but the lack of prepress support and and organizational structure could eventually become a problem.

Fri, 17 Mar 2000 18:36:30 EST

Fri, 17 Mar 2000 18:36:30 EST

From: <>

Subject: subscription

Hello, I'm looking to find a postal address to you...? I do volunteer work with an inmate pen pal site: http://members.xoom.com/crosllinked/index.htm Today I received a request from an inmate, wanting any type of subscription to any site who offers news on Linux Operating Sytems. Thank You for your time. Sandy

[Our address is:

Attn: Linux Gazette Specialized Systems Consultants, Inc. PO Box 55549 Seattle, WA 98155-0549 USA...but I'm not sure what that will gain him. The Gazette is not available by mail, unless a reader (you?) would be willing to print it out and send it to him. Otherwise, he would have to read it online or via the FTP files or a CD.

Of course, Linux Journal is available by mail if he wants that. A text order form is at http://www.linuxjournal.com/subscribe/subtext.txt which you can print and have him mail in. -Ed.]

Wed, 22 Mar 2000 08:12:40 +0100

Wed, 22 Mar 2000 08:12:40 +0100

From: Morgan Karlsson <>

Subject: translation of linux gazette articles

Hello, My name i Morgan Karlsson and I'm a new member of the se.linux.org family. I wonder if it's ok to translate articles from you to swedish and publish them on our website www.se.linux.org? Or even if we get enough people working with it translate every number of you fantastic magazine in swedish. What do you think about this?

[Certainly. We welcome translations. When your site is ready, please fill out the form at http://wwwlinuxgazette.com/mirrors.html so that we can add the site to our mirrors list and people will be able to find you. -Ed.]

|

Contents: |

April 2000 Linux Journal

April 2000 Linux JournalThe April issue of Linux Journal is on newsstands now. This issue focuses on the Internet.

Linux Journal has articles that appear "Strictly On-Line". Check out the Table of Contents at http://www.linuxjournal.com/issue72/index.html for articles in this issue as well as links to the on-line articles. To subscribe to Linux Journal, go to http://www.linuxjournal.com/subscribe/index.html.

For Subcribers Only: Linux Journal archives are available on-line at http://interactive.linuxjournal.com/

SuSE and Mandrake

SuSE and MandrakeNUREMBERG, Germany, PASADENA, USA and METZ, France - 2/14/2000 - SuSE Linux AG, MandrakeSoft and Linbox Inc. are joining forces to develop Linux Network Computing and bring it to the broadest audience. SuSE Linux AG, MandrakeSoft and Linbox Inc. are partnering to develop and include in future versions of Linux distributions the key technologies of the Linbox Network Architecture. All SuSE Linux and MandrakeSoft users will soon be able to set up efficient diskless Network Computing solutions based on Linux.

The Linbox Network Architecture is an open approach to Linux Network Computing based on diskless standard computers on the desktop side, such as the Linbox Net Station, and full featured servers, such as the Linbox Net Server. According to Jean Pierre Laisne', CEO of Linbox Inc., "The Linbox Network Architecture allows users to bring the power of Linux to the desktop at minimal costs while still preserving the user's investments in Windows or MacOS software. Diskless Linbox Net Stations can be set up in a matter of minutes by users with no previous skills in the Linux environment and require no maintenance."

Under the joint partnership, SuSE Linux AG, MandrakeSoft and Linbox will form an open project which will publish the specifications of the Linbox Network Architecture and the required software under open source licensing. Development will be held by the Linbox R&D center in the Lorraine region, France's pioneering region for Linux and Free Software. All Linux users and businesses are welcome to join the LNA open project.

Best

BestTechnology Center Hermia, Tampere, Finland - February 21, 2000

SOT will be releasing their Best Linux operating system to English-speaking users worldwide for the first time. The press conference and publication will take place at CeBIT 2000, Hannover, Germany at 10am, February 24.

"The T-1 beta program was a success. We now have a good reason to expect the same success with the final English release as that we've had in Finland. I am sure that the release will finally dispel the myth of Linux as a server-only operating system, and will show Linux as a real contender for the dominating OS. I hope to see you at the press-release conference at CeBIT 2000" said Santeri Kannisto, CEO, SOT.

The first English version is called Best Linux 2000. SOT will begin shipping boxes after the release. Boxes will be available from well-known Linux-resellers and book stores. Additional information is available at the Best Linux web site, http://www.bestlinux.net

The Best Linux 2000 boxed set includes some new features never seen before in Linux. It includes lifetime technical support and a free update service. Customers are shipped the latest installation CD to guarantee their Best Linux is always up-to-date. A boxed set includes also a 400 page manual, an installation CD, a source code CD, a Linux games CD and a software library CD providing an easy way even for home users to start using a complete Linux system.

Caldera

CalderaCaldera IPO Marks First Linux Disappointment

Mandrake

MandrakeINDIANAPOLIS - March 27, 2000 - Macmillan USA (http://www.placeforlinux.com) announced Secure Server 7.0 for professional server administrators. Macmillan's new product is a secure Linux web server built within the new Linux-Mandrake(tm) 7.0 operating system.

MaxOS (Alta Terra)

MaxOS (Alta Terra)FREDERICTON, NB, March 17 /CNW/ - Mosaic Technologies Corporation and Alta Terra Ventures Corp. have announced an alliance that will see Mosaic's Linux training programs bundled with Alta Terra's MaxOS(TM) Linux operating system.

Bringing Linux to the everyday PC desktop user is a major priority of both Alta Terra and Mosaic. To help Windows users make the transition to Linux, Mosaic, working with India's Sona Valliappa Group, will offer a Linux simulator, which runs in a Microsoft Windows(TM) environment. This allows users to go through the steps of installing and setting up Linux, without leaving Windows. Mosaic will follow up with training programs to help users with tasks within Linux itself.

Mosaic Technologies Corporation

Alta Terra Ventures Corp.

SuSE

SuSECeBIT, Hannover, Germany (February 22, 2000) - SCO and SuSE Linux AG, today announced an agreement to offer SCO Professional Services to SuSE customers, worldwide. The new offering, along with SCO's global reach, will help extend SuSE's growth into new markets. The agreement marks the first time SuSE Linux AG has partnered for professional services on a global level.

The SCO Professional Services offerings are designed to help SuSE's customers and resellers to get started with planning, installation, configuration, and deployment of their new SuSE Linux systems.

TurboLinux

TurboLinuxSanta Cruz, California, March 13, 2000 - Lutris Technologies Inc., and TurboLinux Inc., today announced a joint effort to certify and distribute the Enhydra Java/XML application server for the TurboLinux operating system. The partnership creates a scalable Open Source foundation for enterprise e-business application development and deployment.

Lutris and TurboLinux will work together to promote the Open Source e-business platform. Enhydra will be distributed on the TurboLinux companion CD and listed in the TurboLinux Application Directory as a premier development environment. Lutris will provide support, training and professional services for Enhydra applications running on TurboLinux.

Upcoming conferences & events

Upcoming conferences & events| Colorado Linux Info Quest |

April 1, 2000 Denver, CO thecliq.org |

| Corel Linux Roadshow 2000 |

April 3-7, 2000 Various Locations www.corel.com/roadshow/index.htm |

| Montreal Linux Expo |

April 10-12, 2000 Montreal, Canada www.skyevents.com/EN/ |

| Spring COMDEX |

April 17-20, 2000 Chicago, IL www.zdevents.com/comdex |

| HPC Linux 2000: Workshop on High-Performance Computing with Linux Platforms |

May 14-17, 2000 Beijing, China www.csis.hku.hk/~clwang/HPCLinux2000.html (In conjunction with HPC-ASIA 2000: The Fourth International Conference/Exhibition on High Performance Computing in Asia-Pacific Region) |

| Linux Canada |

May 15-18, 2000 Toronto, Canada www.linuxcanadaexpo.com |

| Converge 2000 |

May 17-18, 2000 Alberta, Canada www.converge2000.com |

| SANE 2000: 2nd International SANE (System Administration and Networking) Conference |

May 22-25, 2000 MECC, Maastricht, The Netherlands www.nluug.nl/events/sane2000/index.html |

| ISPCON |

May 23-25, 2000 Orlando, FL www.ispcon.internet.com |

| Strictly Business Expo |

June 7-9, 2000 Minneapolis, MN www.strictly-business.com |

| USENIX |

June 19-23, 2000 San Diego, CA www.usenix.org |

| LinuxFest |

June 20-24, 2000 Kansas City, KS www.linuxfest.com |

| PC Expo |

June 27-29, 2000 New York, NY www.pcexpo.com |

| LinuxConference |

June 27-28, 2000 Zürich, Switzerland www.linux-conference.ch |

Winners of Design Competition to Speak at Open Source Convention

Winners of Design Competition to Speak at Open Source ConventionFebruary 14th -- Software Carpentry is pleased to announce that O'Reilly & Associates has invited the winners in each category of its design competition to present their work at the 2nd Annual Open Source Software Convention in Monterey, CA, July 17-20, 2000.

So far, 27 individuals and groups have indicated that they will be submitting a total of 39 designs. The deadline for first-round entries is March 31, 2000; for more information, see the Software Carpentry web site at: http://www.software-carpentry.com

RE: LinkUall.com Intro

RE: LinkUall.com Intro Linux Support for Trisignal's Phantom Embedded Modem

Linux Support for Trisignal's Phantom Embedded ModemHANNOVER, GERMANY, Feb. 28, 2000 -- TRISIGNAL Communications, a Division of Eicon Technology Corporation, today announced the availability of its Phantom (TM) Embedded Modem reference design for the Linux operating system.

This new, off the shelf, pre-ported design will be immediately available for license to OEMs, allowing them to quickly bring to market any product running Linux and requiring V.90 modem connectivity, such as Internet appliances. The embedded Phantom design comes with all the necessary modem code and engineering support required for final integration by the OEM. Manufacturers that license this embedded modem design can benefit from TRISIGNAL?s core software code which has an installed base approaching 20 million units.

http://www.trisignal.com.

Computer I/O announces the Easy I/O Server and partnership with MonteVista Software

Computer I/O announces the Easy I/O Server and partnership with MonteVista SoftwareChicago, IL - February 29, 2000 -- Computer I/O Corporation, a provider of software and services that simplify network access to live and real-time data streams, today announced the release of the Easy I/O Server, a Linux-based network I/O server. The Easy I/O Server is a flexible network I/O appliance that leverages the versatility of Linux and Computer I/O's new middleware technology to bring cost-effective, real-time networked data streaming capabilities to embedded and enterprise application developers.

The Easy I/O Server delivers an entirely new approach to the creation and remote access of I/O servers, peripherals, and appliances for telecommunica tions, multi-media, or any other application utilizing real-time data streams. It's I/O middleware technology provides unified interfaces for applications, network access, and real-time data collection and transfer. Computer I/O designed Easy I/O to allow application developers to quickly deploy and access a streaming data server without the need to understand low-level real-time and network programming issues. With its browser-based hardware configuration interface, and universal application programming interfaces, the Easy I/O Server helps reduce software development and maintenance costs, and cuts the time to market for new embedded and enterprise network data applications.

www.computerio.com

A new e-commerce server, and how the company uses Linux

A new e-commerce server, and how the company uses LinuxInternet Technologies, Inc. (Inttek (TM)) has developed a highly effective E-Commerce System with the ability to work directly with Hell's Kitchen Systems CCVS.

For more information, visit our site: www.penguincommerce.com. Visit www.megcor.com for a working commercial example. The megcor.com site sold almost $1000.00 in golf equipment the first weekend it went live. More examples will be coming soon.

The System is hosted on a remote Application Server -- The only required on-site hardware/software is a PC and Web Browser. No knowledge of HTML is needed.

Technical Overview:

Penguin Commerce uses proprietary Software Developed by Internet Technologies, Inc.

Penguin Commerce is based on the following:

Red Hat Linux (currently running on 5.2)

Red Hat Secure Server -- (based on Red Hat Secure Server with modification)

Our secure web servers run a customized version of the Red Hat Secure Server release. We continually upgrade and improve these servers for maximum security.

MySQL Database Engine

PHP Version 4 (including custom Inttek extensions)

Various types of Open Source Image processing software

PGP Encryption Technology

PGP is used throughout our E-Commerce solutions to provide security for sensitive data. For example, before customer credit card numbers are stored in the MySQL database, they are encrypted to ensure privacy in the event that the data transmission to the MySQL server is compromised.

Hell's Kitchen Systems CCVS (including custom network connection program)

HKS CCVS software runs on a separate server, connected to the secure web server via a private Ethernet connection utilizing non-routed IP addresses. This, plus aggressive packet filtering, guarantees that the CCVS server is available for connections from the secure server only. All access to the CCVS server is controlled to prevent unauthorized access to any credit card numbers stored in plain text form.

Connections to the CCVS software are made through the standard Linux inetd service, which calls an intermediary program to translate commands and output between the secure web server and the CCVS software. This intermediate software, written in C with the CCVS C language API, is designed as an extra layer of abstraction, insulating the web programmer from the details of credit card processing, and generalizing the credit card processing interface. This will allow us to present a consistent interface to web designers and programmers, regardless of the details of our credit card processing implementation. Our intent was to keep the credit card intelligence on the CCVS server, not the web server.

An overview of the physical layout is as follows:

(Internet)

|

General Firewall (running RedHat Linux)

|

Apache Web Server running PHP4 and MySql (running RedHat Linux)

|

Secure Server Running the RedHat Secure Server Distribution

|

(Private Ethernet Segment)

|

HKS CCVS Server Running CCVS and custom CCVS connection software

(running RedHat Linux)

|

Modem with phone line to connect to Vendors Merchant Account (For

security purposes, the HKS CCVS Server will not accept incoming phone

calls via the modem)

Milestone achievements of Inttek or Inttek engineers that involved Linux:

Qarbon.com and SuSE Inc. Debuts Linux Viewlet Project

Qarbon.com and SuSE Inc. Debuts Linux Viewlet ProjectQarbon.com, the originator of Viewlets, and SuSE Inc, a leading international Linux distribution, announced the launch of Qarbon.com's "The Linux Viewlet Project." Viewlets are a Web innovation that changes help files and FAQ's into vivid and dramatic "How To" demonstrations that "show" rather than "tell" a user how to perform a specific computing task. The introduction of Viewlets to the Linux community allows Linux developers and users from around the world to create, use and exchange Viewlets, which answer thousands of questions. Keeping with the Linux spirit, Viewlets are free to everyone on the net. Qarbon.com's business model includes a free Viewlet development tool, advertising-based revenue sharing for Viewlet authors and participating web sites,and a Viewlet syndication process designed to promote the use of Viewlets across the web.

To see some of the first Viewlets built around SuSE's Linux 6.3 go to www.teach2earn.com/linux/. Viewlets are expected to be a boon to increasing the use of Linux as users and developers see how Viewlets solve problems, reduces help desk calls and facilitates installation and use.

MyFreeDesk: coming soon to a Jordanian cybercafe near you

MyFreeDesk: coming soon to a Jordanian cybercafe near youMINNEAPOLIS-March 15, 2000- MyFreeDesk.com announced today that Quality Internet of Jordan, Inc., a chain of Internet cafes in the Middle East, will offer ad-free versions of the MyFreeDesk.com web-based office suite on its computers beginning April 1, 2000. Quality Internet customers who traditionally visited the cafes to browse the Internet or play online games will now have the added benefit of a complete office suite of personal computer applications. MyFreeDesk includes a fully featured word processor, spreadsheet, presentation program, database, email manager and Web page editor.

Quality Internet charges its customers an hourly rate to use its computers. MyFreeDesk will receive 50 percent of Quality Internet's proceeds from customers who pay for the ad-free version of MyFreeDesk. Quality Internet expects to open 12 Internet cafes throughout the country of Jordan during the year 2000.

Linux directories from MyHelpDesk

Linux directories from MyHelpDeskMyHelpdesk.com unveiled directories of technical support and productivity information for 20 distributions of the Linux operating system and some of the most popular Linux applications. The free directories will include help for Linux Web browsers, graphical desktop environments and Linux utilities and add-ons.

Each one of the 20 directories contains the Internet's best resources on everything from searchable knowledge bases and FAQs, to upgrade information and bug reports, to training and tutorials. The 20 directories cover the most popular distributions of the Linux operating system, including Caldera Open Linux, Corel Linux, Debian, Linux Mandrake, MK Linux, Red Hat, Slackware, SuSE and WinLinux 2000.

Sair Linux and GNU certification news

Sair Linux and GNU certification newsSair's complete Linux and GNU Certified Administrator (LCA) level of exams are now available worldwide and our self-study guide for Linux & GNU Installation and Configuration has sold close to 40,000 copies. www.linuxcertification.com

LinuxMall: LPI certification

LinuxMall: LPI certificationDENVER - LinuxMall.com, is urging the adoption of certification standards developed by the Linux Professional Institute. The Linux Professional Institute (LPI) is an international non-profit organization dedicated to establishing professional vendor-neutral certification for the Linux Operating System.

Mark Bolzern, President of LinuxMall.com, is a member of the LPI Advisory Council and a sponsor of the organization. LinuxMall.com joins the company of other industry leaders like IBM, Caldera Systems, and Hewlett-Packard in supporting LPI's mission to certify the talent and hard work of Linux professionals throughout the world.

The first exam for LPI certification was launched in January 2000, offering an incentive program of Linux-related utilities to participants. LinuxMall.com has donated over a hundred of incentive items-including t-shirts and Tux the penguin mascots-to participants who have completed the initial phase of testing. In addition, LinuxMall.com provided the fulfillment and distribution of all prizes donated by other LPI sponsors. "Because of the neutrality of LPI certification, businesses will gain a higher level of confidence in the abilities of the professionals they hire," adds Bolzern. "It's far more credible than certification standards established by a single company, such as the MSCE standard."

LinuxMall: Dice.com job searches

LinuxMall: Dice.com job searchesDENVER - LinuxMall.com announces an agreement with EarthWeb's Dice.com that will allow customized Linux job searches directly from the site by simply placing Linux in the job search string. LinuxMall.com will continue to enhance and improve job-related information such as training and education within the LinuxMall.com site.

Under terms of the agreement, LinuxMall.com becomes part of Dice.com's Custom Search Network, which is a targeted group of sites that use the Dice.com job search engine to power their job areas. LinuxMall.com becomes the 16th site to display Dice.com listings on their Web site through Dice.com's Custom Search Network, which includes Red Hat.com, Girl Geeks.com and UserFriendly.org.

LinuxMall: Penguin Power Playing Cards

LinuxMall: Penguin Power Playing CardsTux demonstrates once again that he's playing with a full deck and holding all the cards.

For those late nights at the office, relaxing at home with family and friends, or the perfect gift for the Joker who has everything (hmmm...), LinuxMall.com proudly presents Penguin Power and LinuxMall.com playing cards!

Tux appears in formal dress on face cards, befitting a Linux King, Queen or Jack. The Joker, however, may be in need of a decent haircut. But they're all waiting to be dealt in to your favorite game and fit neatly up most regular-sized sleeves.

These playing cards are just the latest addition to LinuxMall.com's vast array of Linux goodies. Be sure to check out the entire site; LinuxMall.com has everything from beach towels and buttons to bumper stickers and "Born to Frag" T-shirts.

http://www.linuxmall.com/shop/01840

Linux Links

Linux LinksSiteReview.org is a place where wensurfers review and rate web sites. Go post some Linux reviews, and say something nice about Linux Gazette. :)

http://joydesk.com is groupware that provides web-based email, calendar, address book and task list services for web sites. Prominent customers include FreeI.net and www.webmail.ca.freei.net.

IBM Unveils Linux-Based Supercomputer

An interview with MontaVista founder Jim Ready

Why Linux won't fragment like UNIX did Coming soon, to a car near you: Linux-based Internet radios

Linuxcare Establishes Asian Operations

www.destinationlinux.com encompasses everything related to Linux, from games, jokes, contests to Linux information (products, news, training, etc.).

On the FirstLinux site:

Neosystem (France) provides turnkey application servers, training and consulting for Linux.

http://www.balista.com/njp/linux.htm is a personal site by Nicholas Jordan, containing tips, links and advocacy.

LinPeople is the Linux Internet Support Cooperative, a system of free technical support on IRC. The channel is #LinPeople on irc.linpeople.org:8001.

KDevelop 1.1final

KDevelop 1.1finalWe are proud to announce the 1.1final version of KDevelop (http://www.kdevelop.org) . This version contains several new features and many bugfixes. It is intended to be the last release for KDE 1.1.2. We want to concentrate our effort now for KDevelop 2.x (which will work on KDE 2.x).

Summary of changes (between 1.0final and 1.1final):

Please see http://www.kdevelop.org for further information (requirements and download addresses) and http://fara.cs.uni-potsdam.de/~smeier/www/pressrelease1.1.txt for the official press release.

PROGRESS SonicMQ ADDS SUPPORT FOR LINUX

PROGRESS SonicMQ ADDS SUPPORT FOR LINUXNEW YORK-iEC 2000-February 29, 2000-Progress Software Corporation today announced the Developer Edition of its award-winning Progress(r) SonicMQ(tm) Internet messaging server will support the Linux operating system.

"We really wanted to try running SonicMQ on Linux," said Michael Quattlebaum, director of R&D at ChanneLinx.com, a provider of complete e-commerce solutions by linking industries into an interoperable digital marketplace. "I installed it on my Linux server, had it up and running in no time and it worked like a dream. Because SonicMQ is 100% Java, it can run with little or no configuration changes on any platform with a supported JVM. SonicMQ has been great to work with -- a clean implementation of the JMS specification with the added tools needed to make it practical."

SonicMQ is the first-and to date the only-standalone messaging server available from a major software vendor based on Sun's specification for Java-based messaging, Java Message Service (JMS). By providing a standards-based reliable and scalable messaging infrastructure for Internet application interoperation, together with key standards beyond the JMS specification such as eXtensible Markup Language (XML), SonicMQ ensures business-to-business transactions are successfully completed.

www.progress.com

Other software

Other softwareGarlic is a free molecular visualization program, for viewing proteins and DNA.

Mahogany 5.0 e-mail and news client for X11 (GNOME or KDE).

Opera Software has released a i tech preview (that means alpha) version of their web browser Opera.

Java products that run on Linux:

Greetings from Jim Dennis

Greetings from Jim DennisHmm. I had a pretty darn good blurb written up about cool new stuff in 2.4 (still in the pre phase right now) but when I came back to my chair after getting coffee, my cat had taken over the chair and was sitting there, purring innocently.

As I lack the time to do it over (again!) here you go. See you next month.

More 2¢ Tips!

More 2¢ Tips! 2 Cent Trick: handy dictionary

2 Cent Trick: handy dictionaryA writer should never forego looking up a word in the dictionary because it's too much effort. Here's a way to have a dictionary at your fingertips if you're connected to the Internet: Make a shell script 'dict' that does

lynx "http://www.m-w.com/cgi-bin/dictionary?book=Dictionary&va=$*"

Now the shell command

dict quotidian

Brings up a definition of "quotidian".

I use Lynx instead of wget because the dictionary page has links on it I might want to follow (such as alternate spellings and synonyms). I use Lynx instead of something graphical because it is fast.

faq_builder.pl script

faq_builder.pl scriptEverybody who is running a software project needs a FAQ to clarify questions about the project and to enlighten newbies how to run the software. Writing FAQs can be a time consuming process without much fun.

Now here comes a little Perl script which transforms simple ASCII input into HTML output which is perfect for FAQs (Frequently Asked Questions). I'm using this script on a daily basis and it is really nice and spares a lot of time. Check out http://leb.net/blinux/blinux-faq.html for results.

Attachment faq_builder.txt is the ASCII input to produce faq_builder.html using faq_builder.pl script.

'faq_builder.pl faq_builder.txt > faq_builder.html'

does the trick. Faq_builder.html is the the description how to use faq_builder.pl.

faq_builder.pl faq_builder.html faq_builder.txt

winmodems

winmodemsWell I'm a Linux newbie. Been in DP for 25 years and wanted something better. I'm running SuSE 6.3 and having fun. Really close to wiping WINDOZE from my hard disk. I've really enjoyed your site and have found many answers to the many questions that have been starring me in the face. Keep up the good work.

Read another article about winmodems being dead wood as far as Linux is concerned. I got a great deal I thought at Best Buys a 56k modem for 9.95. Of course I should have know it had to be a winmodem. But I stumbled on the site linmodems.org There are alot of people working on this issue. In fact LUCENT has provided a binary for their LT MODEMS. Well I downloaded that guy and I'm running great on my winmodem. There are other drivers available for other modems. Some are workable and some are still in development. I believe it's worth taking a look and spreading the word. You can reach alot more than I can.

Once again great site and thank you for all the helpful hints. I'll continue to be a steady visitor and a Linux advocate.

Fantastic book on linux - available for free both on/offline!

Fantastic book on linux - available for free both on/offline!Hi!

When I browse through the 2 cent tips, I see a lot of general Sysadmin/bash questions that could be answered by a book called "An Introduction to Linux Systems Administration" - written by David Jones and Bruce Jamieson.

You can check it out at www.infocom.cqu.edu.au/Units/aut99/85321

It's available both on-line and as downloadable PostScript file. Perhaps it's also available in PDF.

It's a great book, and a great read!

ANSWER: Re: help in printing numbered pages!

ANSWER: Re: help in printing numbered pages!I usualy print a lot of documentation. One thing that I would like to make is that my print jobs gets the pages to be numbered. So at the bottom of the pages we could see "page 1/xx" , etc. I had looked a while for info in how to set this up, but could not find. The printtool just dont do it. Maybe i should to create a filter, but what commands must i use to make this heapppens???

It depends on the software you used to create the file. If it is a plain text file, you can use "pr" to print it with page numbers in the header:

pr -f -l 55 somefile.txt | lpr

If the file is HTML, there is a utility html2ps that will do what you need. It's available at http://www.tdb.uu.se/~jan/html2ps.html. It converts HTML to PostScript with an option for page numbers and other things.

If you are using a word processor, it may have an option to include page numbers. Let us know what you are trying to print and we may be able to give better help.

See emacs *Tools | Print | Postscript Print Buffer* command... It does exactly what you want!

[ ]s - J. A. Gaeta Mendes Greetings from Brazil!

'man pr'

For fancier output with various bells and whistles

'man nenscript'

(plus maybe a driver script, or two, to get your customizations).

Michal

Try the 'mpage' command.