|

Table of Contents:

|

||||||||

| TWDT 1 (gzipped text file) TWDT 2 (HTML file) are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version. |

|||

, http://www.linuxgazette.com/ , http://www.linuxgazette.com/This page maintained by the Editor of Linux Gazette, Copyright © 1996-1999 Specialized Systems Consultants, Inc. |

|||

This FAQ is updated at the end of every month. Because it is a new feature, it will be changing significantly over the next few months.

These are the most Frequently Asked Questions in the LG Mailbag. With this FAQ, I hope to save all our fingers from a little bit of typing, or at least allow all that effort to go into something No (Wo)man Has Ever Typed Before.

Other archive formats. We need to keep disk space on the FTP site at a minimum for the sake of the mirrors. Also, the Editor rebels at the thought of the additional hand labor involved in maintaining more formats. Therefore, we have chosen the formats required by the majority of Gazette readers. Anybody is free to maintain the Gazette in another format if they wish, and if it is available publicly, I'll consider listing it on the mirrors page.

Zip, the compression format most common under Windows. If your unzipping program doesn't understand the *.tar.gz format, get Winzip at www.winzip.com.

Macintosh formats. (I haven't had a Mac since I sold my Mac Classic because Linux wouldn't run on it. If anybody has any suggestions for Mac users, I'll put them here.)

Other printable formats.

E-mail. The Gazette is too big to send via e-mail. Issue #44 is 754 KB; the largest issue (#34) was 2.7 MB. Even the text-only version of #44 is 146 K compressed, 413 K uncompressed. If anybody wishes to distribute the text version via e-mail, be my guest. There is an announcement mailing list where I announce each issue; e-mail with "subscribe" in the message body to subscribe. Or read the announcement on comp.os.linux.announce.

On paper. I know of no companies offering printed copies of the Gazette.

Yes, yes, yes and yes. See the mirrors page. Be sure to check all the countries where your language is spoken; e.g., France and Canada for French, Russia and Ukraine for Russian.

You're probably looking at an unmaintained mirror. Check the home site to see what the current issue is, then go to the mirrors page on the home site to find a more up-to-date mirror.

If a mirror is seriously out of date, please let know.

Use the Linux Gazette search engine. A link to it is on the Front Page, in the middle of the page. Be aware this engine has some limitations, which are listed on the search page under the search form.

Use the Index of Articles. A link to it is on the Front Page, at the bottom of the issues links, called "Index of All Issues". All the Tables of Contents are concatenated here onto one page. Use your browser's "Find in Page" dialog to find keywords in the title or author's names.

There is a seperate Answer Guy Index, listing all the questions that have been answered by the Answer Guy. However, they are not sorted by subject at this time, so you will also want to use the "Find in Page" dialog to search this listing for keywords.

The Linux Gazette is dependent on Readers Like You for its articles. Although we cannot offer financial compensation (this is a volunteer effort, after all), you will earn the gratitude of Linuxers all over the world, and possibly an enhanced reputation for yourself and your company as well.

New authors are always welcome. E-mail a short description of your proposed article to , and the Editor will confirm whether it's compatible with the Gazette, and whether we need articles on that topic. Or, if you've already finished the article, just e-mail the article or its URL.

If you wish to write an ongoing series, please e-mail a note describing the topic and scope of the series, and a list of possible topics for the first few articles.

The following types of articles are always welcome:

We have all levels of readers, from newbies to gurus, so articles aiming at any level are fine. If you see an article that is too technical or not detailed enough for your taste, feel free to submit another article that fills the gaps.

Articles not accepted include one-sided product reviews that are basically advertisements. Mentioning your company is fine, but please write your article from the viewpoint of a Linux user rather than as a company spokesperson.

If your piece is essentially a press release or an announcement of a new product or service, submit it as a News Bytes item rather than as an article. Better yet, submit a URL and a 1-2 paragraph summary (free of unnecessary marketoid verbiage, please) rather than a press release, because you can write a better summary about your product than the Editor can.

Articles not specifically about Linux are generally not accepted, although an article about free/open-source software in general may occasionally be published on a case-by-case basis.

Articles may be of whatever length necessary. Generally, our articles are 2-15 screenfulls. Please use standard, simple HTML that can be viewed on a wide variety of browsers. Graphics are accepted, but keep them minimal for the sake of readers who pay by the minute for on-line time. Don't bother with fancy headers and footers; the Editor chops these off and adds the standard Gazette header and footer instead. If your article has long program listings accompanying it, please submit those as separate text files. Please submit a 3-4 line description of yourself for the Author Info section on the Back Page. Once you submit this, it will be reused for all your subsequent articles unless you send in an update.

Once a month, the Editor sends an announcement to all regular and recent authors, giving the deadline for the next issue. Issues are usually published on the last working day of the month; the deadline is seven days before this. If you need a deadline extension into the following week, e-mail the Editor. But don't stress out about deadlines; we're here to have fun. If your article misses the deadline, it will be published in the following issue.

Authors retain the copyright on their articles, but distribution of the Gazette is essentially unrestricted: it is published on web sites and FTP servers, included in some Linux distributions and commercial CD-ROMs, etc.

Thank you for your interest. We look forward to hearing from you.

Certainly. The Gazette is freely redistributable. You can copy it, give it away, sell it, translate it into another language, whatever you wish. Just keep the copyright notices attached to the articles, since each article is copyright by its author. We request that you provide a link back to www.linuxgazette.com.

If your copy is publicly available, we would like to list it on our mirrors page, especially if it's a foreign language translation. Use the submission form at the bottom of the page to tell us about your site. This is also the most effective way to help Gazette readers find you.

) To recognize and give thanks to our sponsors, we display their logo.

) To recognize and give thanks to our sponsors, we display their logo.

If you would like more information about sponsoring the Linux Gazette, e-mail .

This section comprises the most frequently-asked questions in The Mailbag and The Answer Guy columns.

Check the FAQ. (Oh, you already are.  ) Somewhat more seriously, there is a Linux FAQ located at http://www.linuxdoc.org/FAQ/Linux-FAQ.html which you might find to be helpful.

) Somewhat more seriously, there is a Linux FAQ located at http://www.linuxdoc.org/FAQ/Linux-FAQ.html which you might find to be helpful.

For people who are very new to Linux, especially if they are also new to computing in general, it may be handy to pick up one of these basic Linux books to get started:

Mailing lists exist for almost every application of any note, as well as for the distributions. If you get curious about a subject, and don't mind a bit of extra mail, sign onto applicable mailing lists as a "lurker" -- that is, just to read, not particularly to post. At some point it will make enough sense that their FAQ will seem very readable, and then you'll be well versed enough to ask more specific questions coherently. Don't forget to keep the slice of mail that advises you how to leave the mailing list when you tire of it or learn what you needed to know.

You may be able to meet with a local Linux User Group, if your area has one. There seem to be more all the time -- if you think you may not have one nearby, check the local university or community college before giving up.

And of course, there's always good general resources, such as the Linux Gazette

Questions sent to will be published in the Mailbag in the next issue. Make sure your From: or Reply-to: address is correct in your e-mail, so that respondents can send you an answer directly. Otherwise you will have to wait till the following issue to see whether somebody replied.

Questions sent to will be published in The Answer Guy column.

If your system is hosed and your data is lost and your homework is due tomorrow but your computer ate it, and it's the beginning of the month and the next Mailbag won't be published for four weeks, write to the Answer Guy. He gets a few hundred slices of mail a day, but when he answers, it's direct to you. He also copies the Gazette so that it will be published when the month end comes comes along.

You might want to check the new Answer Guy Index and see if your question got asked before, or if the Answer Guy's curiosity and ramblings from a related question covered what you need to know.

An excellent summary of the current state of WINE, DOSEMU and other Windows/DOS emulators is in issue #44, The Answer Guy, "Running Win '95 Apps under Linux".

There is also a program called VMWare which lets you run several "virtual computers" concurrently as applications, each with its own Operating System. There is a review in Linux Journal about it.

Answers in either the Tips or Answer Guy columns which relate to troubleshooting hardware, might be equally valuable to Linux and Windows users. This is however the Linux Gazette... so all the examples are likely to describe Linux methods and tools.

The Answer Guy has ranted about this many times before. He will gladly answer questions involving getting Linux and MS Windows systems to interact properly; this usually covers filesystems, use of samba (shares) and other networking, and discussion of how to use drivers.

However, he hasn't used Windows in many years, and in fact avoids the graphical user interfaces available to Linux. So he is not your best bet for asking about something which only involves Windows. Try one of the Windows magazines' letter-to-the-editor columns, an open forum offered at the online sites for such magazines, or (gasp) the tech support that was offered with your commercial product. Also, there are newsgroups for an amazing variety of topics, including MS Windows.

The usual command to ask for a help page on the command line is the word man followed by the name of the command you need help with. You can get started with man man. It might help you to remember this, if you realize it's short for "manual."

A lot of plain text documents about packages can be found in /usr/doc/packages in modern distributions. If you installed them, you can also usually find the FAQs and HOWTOs installed in respective directories there.

Some applications have their own built-in access to help files (even those are usually text stored in another file, which can be reached in other ways). For example, pressing F1 in vim, ? in lynx, or ctrl-H followed by a key in Emacs, will get you into their help system. These may be confusing to novices, though.

Many programs provide minimal help about their command-line interface if given the command-line option --help or -?. Even if these don't work, most give a usage message if they don't understand their command- line arguments. The GNU project has especially forwarded this idea. It's a good one; every programmer creating a small utility should have it self-documented at least this much.

Graphical interfaces such as tkman and tkinfo will help quite a bit because they know where to find these kinds of help files; you can use their menus to help you find what you need. The better ones may also have more complex search functions.

Some of the bigger distributions link their default web pages to HTML versions of the help files. They may also have a link to help directly from the menus in their default X Windowing setup. Therefore, it's wise to install the default window manager, even if you (or the friend helping you) have a preference for another one, and to explore its menus a bit.

It's probably a winmodem. Winmodems suck for multiple reasons:

So, yeah, there can be good internal modems, but it's more worthwhile to get an external one. It will often contain phone line surge suppression and that may lead to more stable connections as well.

Write the Gazette at

|

Contents: |

Answers to these questions should be sent directly to the e-mail address of the inquirer with or without a copy to [email protected]. Answers that are copied to LG will be printed in the next issue in the Tips column.

Before asking a question, please check the new Linux Gazette FAQ to see if it has been answered there.

Sun, 05 Sep 1999 04:09:46 PDT

Sun, 05 Sep 1999 04:09:46 PDT

From: <

Subject: Linux in Algeria

I would like to thank all people who replied to me

I am a third world LINUX user, in my country there is only windoze as an OS, none know about the advantages of LINUX, now I am going to set up a web site about LINUX so help me please, any printed magazine, books, free cd-rom will be a great help. actually my video card is an SIS 5597 so please if any one who can send me a free LINUX distribution( especially RH 6 it's easy to install, I have RH5.1)with XFREE86 3.3.1 to support my video card.

sorry for my silly english, and keep the good work

friendly mimoune

my address is:

MR djouallah mimoune

ENTP garidi, vieux kouba algiers cp 05600

algeria.

Sun, 12 Sep 1999 15:18:59 -0400

Sun, 12 Sep 1999 15:18:59 -0400

From: Jim Bruer <

Subject: Postfix article

I just installed Postfix on Suse 6.1. It seems a much easier mailer to install than any of the others I've read about in your recent issues (which were great btw). There is an active newsgroup and the author of the program responds VERY fast to stupid newbie questions. I speak from experience : ) From debian newsgroup postings it appears that Postfix is going to become their standard. Check it out, I'd love to see an article on it since I'm trying to move beyond the newbie stage and really understand this whole mail business.

Sun, 12 Sep 1999 22:00:49 +0200 (CEST)

Sun, 12 Sep 1999 22:00:49 +0200 (CEST)

From: <

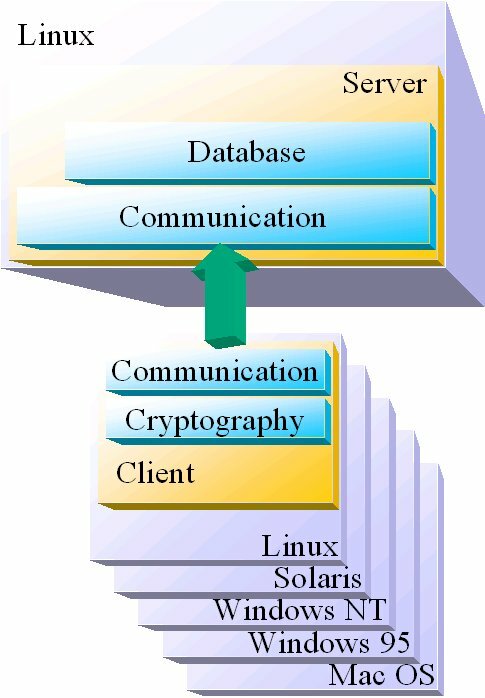

Subject: VPN and Firewall with Linux

Hi,

We are considering investing in a firewall and VPN for our network at work (a 3rd world aid organisation). We haven't deemed it necessary until now when we will upgrade to Novell Netware 5 which is mainly TCP/IP-based. We have funds for investing in Novell BorderManager as well.

However, we have been talking about having our own DNS server as well as firewall. Also we would like to be able to connect our offices around the world to the network by VPN. BorderManager has all these facilities but for a price. Is there some comprehensive Linux source (written, on-line, software) about these issues. Even a list of HOWTOs would be OK.

TIA,

Martin Sjödebrand

[Would somebody like to write an article about connecting several local networks securely over the Internet? There must be some Gazette readers who have such a network running in their office. -Ed.]

Thu, 9 Sep 1999 22:24:11 -0700

Thu, 9 Sep 1999 22:24:11 -0700

From: Lic. Manuel Nivar <

Subject: Descompiler

Hi, My name is Manuel Nivar I dont know how to install a script for irc.chat because I don know how to descompiler. Please Help me.

The Linux Gazette Editor wrote:

Hi. This will be published in the October Gazette. I don't use IRC, so I'm afraid I can't personally offer any suggestions. What does "descompiler" mean? Do you mean you can't compile the program?

Manuel replied:

Yes I dont know how to descompiler

[I'm still hoping somebody will write some articles about IRC. It is certainly a popular topic among Gazette readers. -Ed.]

Thu, 02 Sep 1999 00:48:42 -0400

Thu, 02 Sep 1999 00:48:42 -0400

From: Leslie Joyce <

Subject: Printing lines of black

Hi,

As a newbie to linux,I am having a several problems with getting linux to work properly,mainly now, I am having a problem with my printer HP 693C. I can print, but on every line of copy(words)after the sentence ends,I get a line of solid black .As I am dual booting and my printer works in Win95,I am thinking this is a linux driver problem.I am using the 550 C driver. I am using Caldera 2.2 I used the graphical interface to install the printer . Any thoughts,guesses or ideas as to where to go to find a solution?

Thanks for your time and help

Les.................

Fri, 3 Sep 1999 10:30:24 +1000

Fri, 3 Sep 1999 10:30:24 +1000

From: Binjala <

Subject: Winmodems

It wasn't until I had my own version of MS Windoze -albeit someone else has the registration, disc etc- that I realised how much I'd like to use something else... the I was shown linux, so Ive got RedHat, but now I find I've got Winmodem, Eagle 1740 AGP VGA Card, and Creative Labs Sound Blaster PCI64. I realise the modem sucks, where can I find if the others are compatible? Are they compatible? Can you recommend a replacement 56K modem? The guy who built this box for me has never used Linux, so he's not very useful. Help!

Simon.

[Any modem except a winmodem should work fine. If it says it works with DOS and/or Macintosh as well as Windows, it should be OK.See the Hardware Compatibility HOWTO for details.

An index of all the HOWTOs is at www.ssc.com/mirrors/LDP/HOWTO/HOWTO-INDEX-3.html#ss3.1 -Ed.]

Fri, 03 Sep 1999 12:44:59 +0800

Fri, 03 Sep 1999 12:44:59 +0800

From: Jeff Bhavnanie <

Subject: compling network driver.

I've got the source code for my network card (SiS900), when I issue the compile command as described in the docs, i get no errors and the *.o file is created. When I issue 'insmod sis900.o' i get a list of errors. I'm a complete newbie at compiling things.

Can anyone else compile the source into object file for me? I'm running Mandrake 6.0.

Thanks

Jeff

Fri, 03 Sep 1999 11:06:14 +0000

Fri, 03 Sep 1999 11:06:14 +0000

From: Pepijn Schmitz <

Subject: Help: printing problem.

Hi,

I'm having trouble getting a Solaris box to print on my Linux print server. The Linux box has a printer set up that prints to a Netware network printer. This works, I can print from Netscape, Star Office, etc. I've set up a network printer on a Solaris 7 machine that prints to this machine. This also works, for text files. But when I try to print a page from Netscape, nothing happens, and the following appears in my messages file (maas is my Linux machine with the Netware printer, amazone.xpuntx.nl is the Solaris machine):

Sep 3 12:55:18 maas lpd[9788]: amazone.xpuntx.nl requests recvjob lp Sep 3 12:55:18 maas lpd[9788]: tfA001amazone.xpuntx.nl: File exists Sep 3 12:55:18 maas lpd[9789]: amazone.xpuntx.nl requests recvjob lp Sep 3 12:55:18 maas lpd[9789]: tfA001amazone.xpuntx.nl: File exists

This repeats itself every minute. I checked, and the tfA001amazone.xpuntx.nl file really does not exist anywhere on my system. There is a cfA001amazone.xpuntx.nl file in /var/spool/lpd/lp however, and if I remove this file the next time around it says this in the messages file:

Sep 3 13:00:27 maas lpd[9854]: amazone.xpuntx.nl requests recvjob lp Sep 3 13:00:27 maas lpd[9855]: amazone.xpuntx.nl requests recvjob lp Sep 3 13:00:27 maas lpd[9855]: readfile: : illegal path name: File exists

This happens once. The next minute the four lines I gave earlier reappear, and the cfA001amazone.xpuntx.nl file has reappeared.

I hope someone can help me out here, this has got me stumped! Thanks in advance for anyone who can shed some light...

Regards,

Pepijn Schmitz

Fri, 3 Sep 1999 11:36:57 -0400 (EDT)

Fri, 3 Sep 1999 11:36:57 -0400 (EDT)

From: Tim <

Subject: 2gig file size limit?

Greetings,

I have a box on my network running RedHat 6.0 (x86) that is going to be used primarily for backing up large database files. These files are presently 25 gigs in size. While attempting a backup over Samba, I realized that the file system would not allow me to write a file > 2gig to disk. I tried using an large file system patch for kernel 2.2.9, but that only allowed me to write 16 gigs, and it seemed buggy when it was doing that even. Doing an 'ls -l' would show me that the file size of the backup was about 4 gig, but the total blocks in the directory with no other files there indicated a much higher number like so:

[root@backup ]# ls -l total 16909071 -rwxr--r-- 1 ntuser ntuser 4294967295 Sep 2 19:45 file.DAT

I am well aware that a 64 bit system would be the best solution at this point, but unfortunately i do not have those resources. I know BSDi can write files this big, as well as NT on 32 bit systems.. i am left wondering, why can't linux?

Thanks in advance.

-Tim

Fri, 3 Sep 1999 18:03:22 +0200

Fri, 3 Sep 1999 18:03:22 +0200

From: Service Data <

Subject: Linux.

È possibile sapere qual'è l'ultima versione di linux in comercio? È possibile che sia uscita la versione 6.2?

Attendo Vs. risposta Grazie.

[Hi. Sorry, I don't speak Italian. "Linux" technically refers only to the kernel. The kernel is at version 2.2.12. We track the kernel version on the Linux Journal home page, www.linuxjournal.com. The original site is www.kernel.org.The distribution you buy in a store contains not just the Linux kernel, but a lot of software from a lot of sources. Each distribution has its own numbering system. RedHat is at 6.0. SuSE just released 6.2. The other distributions have other numbers. We list the versions of the major distributions at www.linuxjournal.com, "How to Get Linux".

There are Italian speakers who read the Gazette; perhaps they can give a better answer than this. -Ed.]

Sat, 4 Sep 1999 08:35:51 +0530

Sat, 4 Sep 1999 08:35:51 +0530

From: A.PADMANARAYANAN <

Subject: Reading Linux partitions from NT

Dear sir, can you please tell me how can i access linux partitions from windows NT or 98? is it possible? please help me or point me to any resources man pages or URLs i will work on it!

Thanks in advance!

sincerely

Vijay

Pune, India

[There is a Windows 95 tool to do this, but I have forgotten its name. It wasn't in a very advanced stage the last time I looked at it. It would be easier to go the other way and have Linux mount your Windows partitions and copy the files there so that Windows can see them. Run "man mount" for details. -Ed.]

Sat, 4 Sep 1999 21:40:47 +0530

Sat, 4 Sep 1999 21:40:47 +0530

From: Joseph Bill E.V <

Subject: Chat server

Dear sir,

Is there any chat server for linux users to share their views

Regards,

Bill

Sat, 11 Sep 1999 12:55:54 -0600

Sat, 11 Sep 1999 12:55:54 -0600

From: Daniel Silverman <

Subject: Linux Internet forums

Do you know of any Linux internet forums? If you do, I will be very grateful for their urls.

Sun, 05 Sep 1999 02:34:41 -0300

Sun, 05 Sep 1999 02:34:41 -0300

From: Erik Fleischer <

Subject: How to prevent remote logins as root

For security reasons, I would like to make it impossible for anyone logging in remotely (via telnet etc.) to log in as root, but so far haven't been able to figure out how to do that. Any suggestions?

Sun, 5 Sep 1999 23:27:16 +0200

Sun, 5 Sep 1999 23:27:16 +0200

From: =?iso-8859-2?B?TWljaGGzIE4u?= <

Subject: When RIVA TNT 2 drivers for XWindows ?

When XWindows will work properly with vga's with chipset RIVA TNT 2 ? When I'm trying to use RIVA TNT there are only 16 colors and very,very poor resolution.

Mon, 6 Sep 1999 01:40:38 +0200

Mon, 6 Sep 1999 01:40:38 +0200

From: Per Nyberg <

Subject: Mandrake

Hi, Im thinking of changing to Linux and I will buy Mandrake Linux. Is Red Hat better or is it a good idea of buying Mandrake?

Sun, 05 Sep 1999 19:24:28 -0600

Sun, 05 Sep 1999 19:24:28 -0600

From: Dale Snider <

Subject: neighbour table overflow

I was running quite a long time with NFS and transmission stopped. I get:

Sep 6 00:03:20 coyote kernel: eth0: trigger_send() called with the transmitter busy.

I rebooted the machine I was connected to and I get the below (part of /var/log/messages file. Not all error statements shown):

Sep 6 17:57:04 beartooth kernel: neighbour table overflow Sep 6 17:57:04 beartooth kernel: neighbour table overflow Sep 6 17:57:04 beartooth rpc.statd: Cannot register service: RPC: Unable to send; errno = No buffer space available Sep 6 17:57:04 beartooth nfs: rpc.statd startup succeeded Sep 6 17:57:04 beartooth rpc.statd[407]: unable to register (SM_PROG, SM_VERS, udp).l:df gives:

Filesystem 1k-blocks Used Available Use% Mounted on /dev/hda2 792800 628216 123619 84% / /dev/hda1 819056 369696 449360 45% /NT /dev/hda4 7925082 4892717 2621503 65% /home

I can't find a reference to this error.

Using RH 6.0 on Intel Pentium III 500 Mhz.

Cheers

Dale

Mon, 06 Sep 1999 02:17:37 +0000

Mon, 06 Sep 1999 02:17:37 +0000

From: Patrick Dunn <

Subject: Parallel Port Scanners and Canon BJC-2000

I have two questions...

1)Does anyone have a driver written to work with Parallel port scanners? I have one of these dastardly things that I wish I didn't buy but the price was too good to pass up. It's a UMAX Astra 1220P.

2)I have recently picked up a Canon BJC-2000 inkjet printer and it will print in B&W under Linux using the BJC-600/4000 driver under Ghostscript 5.10 (Mandrake Distro 6.0). Is there a native driver in the works? Color printing under this printer can be problematic.

Thanks, Pat

Tue, 07 Sep 1999 21:59:49 -0500

Tue, 07 Sep 1999 21:59:49 -0500

From: balou <

Subject: shell programming

Could you point me to a good source for shell programming. I would prefer to find something off the internet taht's free. I've tried multiple web searches, but usually just come up with book reviews and advertisements.... If there are no free resources on the web, which book would you recommend for a relatively novice at Linux with experience in basic, logo, fortran, pascal, and the usual msdos stuff.

Wed, 08 Sep 1999 21:35:04 +0700

Wed, 08 Sep 1999 21:35:04 +0700

From: Ruangvith Tantibhaedhyangkul <

Subject: Configure X to work with Intel 810 chipset

Hi again,

I just bought a new computer. It has an "on-board" video card, Intel 810 chipset, or somewhat like that. I couldn't configure X to work with this type of card. First, I let Linux probed, it failed. Then I looked at the list, of course, it wasn't there. Then I tried an unlisted card and configured it as a general svga, it still failed. What to do now?

Wed, 8 Sep 1999 16:57:17 +0200

Wed, 8 Sep 1999 16:57:17 +0200

From: <

Subject: Internet connection problem !

Hi all

I hope someone can lend some advise ...

I have a PII 350 Mhz box with 64 MB ram running RH 6.0. I am using KDE as a wm and am trying to set up a RELIABLE connection to my ISP. I am using a ZOLTRIX (Rockwell) 56K modem, and kppp to dial in to my ISP.

My problem is that my I can never connect consistently.. in other words today it works fine but tomorrow it will throw me out ... It seems to dial in fine but when it tries to 'authenticate' my ID and password it bombs out ! It connects fine every time if I boot into Windoze 98.

Does anyone have any ideas as to why this might be happening ?

Thanks in advance

Regards

Rakesh Mistry

Fri, 10 Sep 1999 13:28:07 +1000

Fri, 10 Sep 1999 13:28:07 +1000

From: Les Skrzyniarz <

Subject: Loading HTML back ssuse

Iam using win98 IE5 and when Itry to load the complete issues eg.Issue 42 it stops loading at some random point on the page, and as such I can not save the complete issues(some not all) even when I come back to it agin at a later time the problem persists. The problem is not at my end as I do not have this problem with any other page on the internet.Can you offer a reason for this or a solution.

Thanks

Les.S

[Hi. This will be published in the October Gazette, and we'll see if any other readers are having the same problem. I have not heard any other complaints about this so far. I have not used Win98 or IE5, so I can't suggest anything directly.

Which site are you reading the Gazette at? Can you try another mirror?

You can try downloading the Gazette via FTP and reading it locally. See ftp://ftp.ssc.com/pub/lg/README for details.

It may be related to the size of the file and a misconfigured router on the network between us and you. issue45.html is 428K. Are any of the other pages you visit that big? -Ed.]

Fri, 10 Sep 1999 13:32:21 -0500

Fri, 10 Sep 1999 13:32:21 -0500

From: root <

Subject: (no subject)

Hi! I have a question for you... Is there an utility like fsck but for Macintosh HFS File systems? I want to recover a damaged one due to power supply problems.

Fri, 10 Sep 1999 13:32:21 -0500

Fri, 10 Sep 1999 13:32:21 -0500

From: root <

Subject: (no subject)

Hi! I have a question for you... Is there an utility like fsck but for Macintosh HFS File systems? I want to recover a damaged one due to power supply problems.

Mon, 13 Sep 1999 10:22:31 -0700

Mon, 13 Sep 1999 10:22:31 -0700

From: MaxAttack <

Subject: Re: hello

I was looking into CS software for linux, And one of the tools i was looking into was software to graph the internet its etc map out the registerd users on the arpnet. I was woundering if u happend to have any infomation in any of your magazines on this topic

The Linux Gazette Editor wrote:

No, I don't know of any such software.

What is it you wish to do? Find out who is on each computer? The Internet doesn't really have a concept of "registered user", because the concept of "What is a user?" was never defined Internet-wide.

In any case, you'd have to poll every box to find out what it thinks its current user is. But this identity has no real meaning outside the local network. For Windoze boxes it may be totally meaningless, because users can set it to anything they want. And how would you even find the boxes in the first place? Do a random poll of an IP range? That sounds like Evil marketoid or cracking activity. In any case, if the machines are behind dynamic IPs, as is common with ISPs nowadays, there's no guarantee you'll ever be able to find a certain machine again even if you did find it once.

Manuel replied:

i was thinking of just pinging all the registerd users at some DNS databases over a period of time. And using some software to create a graphical user interface for it, or such.

The Linux Gazette Editor asked:

Are you talking about a network analyzing program like those products that show an icon in red or page the system administrator if a computer goes down?

I assume by "user" you mean a particular machine rather than a user-person, since the DNS doesn't track the latter.

Manuel replied:

hehe sorry for the confusion what i was trying to pass on what the notion of a software that allows u to track out all the registed Boxes on the internet and graph them into a nice graphical picture. so it looks something like this hopefully this diagram helps:

|---------------|

| InterNIC |

| |

|---------------|

/ \

/ \

/ \

|---------------| |---------|

| linuxstart.com| | blah. |

| | | com |

|---------------| |---------|

|

|

|---------------|

| */Any Sub |

| Domain |

|---------------|

Sun, 12 Sep 1999 17:19:10 -0400

Sun, 12 Sep 1999 17:19:10 -0400

From: William M. Collins <

Subject: HP Colorado 5GB

Using Red Hat 5.2

I purchased a HP Colorado 5GB tape drive on reccomendation of a friend. He helped install RH 5.2. And using a program on the system named Arkeia configured the Colorado from this program. This friend has moved from the area.

My questions are:

Thanks

Bill

Tue, 14 Sep 1999 09:56:39 +0800

Tue, 14 Sep 1999 09:56:39 +0800

From: a <

Subject: program that play Video Compact Disk (VCD)

i have RH 5.1. Is there any program that play Video Compact Disk (VCD)?

Thu, 16 Sep 1999 20:34:14 -0400

Thu, 16 Sep 1999 20:34:14 -0400

From: madhater <

Subject: ahhhhh i heard that

linux will run out of space in 2026 cause of some bs about that i counts in units and the hard drive will be filled this is not true .... right!

[No. Linux, like most Unixes, has a "Year 2032 problem" (I forget the exact year) because the system clock counts the seconds since January 1, 1970, and that number will overflow a 32-bit integer sometime in the 2020s or 2030s.People generally assume we will all have moved to 64-bit machines by then, which have a 64-bit integer and thus won't overflow until some astronomical time thousands of years from now. If 32-bit machines are still common then, expect some patches to the kernel and libraries to cover the situation. (People will have to check their database storage formats too, of course.)

I have never heard of any time-specific problems regarding i-nodes and disk space. A Unix filesystem has both a limit of the amount of data it can hold and the number of files it can contain. The number of files is the same as the number of i-nodes, which is fixed at format (mkfs) time. Run "df -i" to see what percentage of i-nodes are used. Every file and directory (including every symbolic link) uses one i-node. (Hard links to the same file share the i-node.) For normal use it's never a problem. However, if you have a huge number of tiny files (as on a high-volume mail or news server), it may be worth formatting the partition with a larger-than-usual number of i-nodes. None of this has anything to do with the year, though. -Ed.]

Fri, 17 Sep 1999 21:54:30 -0700

Fri, 17 Sep 1999 21:54:30 -0700

From: Ramanathan Prabakaran <

Subject: run-time error on cplusplus programme

I have edited the sourcecode on windows Notepad, compiled on cygwin32 and run the programme. The source code contains fstream class. It is about file input/output. I have created the input file on the same windows notepad. But the programme does not open or read the contents of the infut file.

Help please

[I haven't quite gotten to the point of banning Windoze questions in the Mailbag because it's hovering at only one or two per issue. But I'm starting to think about it.However, I do want to support the use of free/open source compilers on Windows, especially since the Cygnus ones are (ahem) "our" compilers. Are there any better forums for Cygnus-on-Windoze to refer people to? -Ed.]

Sun, 19 Sep 1999 20:12:47 +0200

Sun, 19 Sep 1999 20:12:47 +0200

From: David Le Page <

Subject: Making Linux talk to an NT network

I want to get Linux running on my PC at work, and talking to the NT network for file sharing and printer use. Okay, okay, I know the theory -- get samba up and running, read the manual, and make it all happen. But I'm not a networking guru, and I'm battling to understand samba. And everything I read about it seems to be focused on gettings Win machines talking to Samba servers, not the other way around. Can anyone tell me, in 10 Easy Steps, how to get this done?

Mon, 20 Sep 1999 17:35:23 -0400

Mon, 20 Sep 1999 17:35:23 -0400

From: Mahesh Jagannath <

Subject: Netscape and Java

I am running Netscape Comm 4.51 on Red Hat Linux 6.0. It crashes invariably if I load a site with any Java applet etc. Is there something I am missing or is this a known bug?

Mahesh

Mon, 20 Sep 1999 16:48:35 -0500

Mon, 20 Sep 1999 16:48:35 -0500

From: <

Subject: Modem noises

Hi Folks,

I know this is a nitpick, but for reasons I won't go into, it's keeping me from using Linux as much as I might. Is there a way to divert the modem noises to /dev/null ? Hearing them was a help when I was debugging my connection, but now it's just a disturbance.

Thanks,

Jerry Boyd

[Add "L0M0" to your modem's initialization string. One of these sets the volume to zero; the other says never turn on the speaker. (Of course I forget which is which, which is why I set both. Can you believe how many whiches are in that last sentence?) If there is no existing initialization string, use "ATL0M0" + newline. Each modem program will need this put into its configuration file. For PPP, this would be in your chatscript.I use "L1M1", which means (1) low speaker volume, (2) turn the speaker on only after dialing and off when the connection either succeeds or fails. -Ed.]

Tue, 21 Sep 1999 08:44:19 GMT

Tue, 21 Sep 1999 08:44:19 GMT

From: raghu ram <

Subject: help

sir, I am using apache web server on Linux machine.

My problem is logrotation,to rotatelogs we should have config file given below

/var/log/messages {

rotate 5ge.

weekly

postrotate

/sbin/killall -HUP syslogd

endscript

}

config is over,but my problems is where should be setup.

please help me

Thanks

Raghu

The Linux Gazette Editor wrote:

I don't understand what the problem is. What does "where should be setup" mean?

Raghu replied:

I don't known how to run the configfile?. I went to man logrotate,just he given configfile.

Thu, 23 Sep 1999 13:11:53 +0530

Thu, 23 Sep 1999 13:11:53 +0530

From: neeti <

Subject: linux 6.2 compatible scsi adapters

will somebody pl. tell me the list of SCSI adapters compatible to SUSE lINUX 6.2

thanx

neeti

Thu, 23 Sep 1999 17:32:46 +0200

Thu, 23 Sep 1999 17:32:46 +0200

From: De Miguel, Guillermo <

Subject: Package to install...

Hello everybody,

I have in my notebook installed RedHat 6.0 in a partition of 800Mb with several products installed. As you can suppose, I had to restrict the installation of a lot of packages due to I do not have to much free hard disk space. Sometimes, I am working with my installation, I have problems due to my Linux does not find some file(s). The question is, does somebody know a way to find the package where a file which is not installed is?. I know that there is a option in the rpm command to find the package a file belongs to. However, that file has in the hdd. Has anybody help me ?

Thanks ahead. Guillermo.

Wed, 01 Sep 1999 16:22:28 +0200

Wed, 01 Sep 1999 16:22:28 +0200

From: Alessandro Magni <

Subject: Imagemap

In the need to define hotspots on some images in HTML documents, I found a total lack of programs for Linux that enable you to accomplish this task. Does somebody know what I'm searching for?

Wed, 01 Sep 1999 13:13:02 -0700

Wed, 01 Sep 1999 13:13:02 -0700

From: Jim Dennis <

Subject: Freedom from UCITA: Free Software

In response to Ed Foster's many recent gripes about the UCITA and the risks associated with some proprietary software licensing.

I'm sure he's heard it before but Freedom from the threat of UCITA is only as far away as your local free software mirror site (FTP and/or web based). Linux, FreeBSD (and its brethren) have licenses without any such traps(*).

* (I've appended a brief note on the two most common software licenses to forestall any argument that they DO contain "such traps.")

If the quality of Linux and other free software didn't speak for itself, the UCITA would be an incentive for its adoption.

It's as though the major commercial software publishers are in their death throes and intent on getting in one last bite, kick or scratch at their customers.

I'm not saying that free software and the open source movement is poised to wipe out proprietary software. For most free software enthusiasts the intent is to provide alternatives.

Ironically it seems as though the major proprietary software interests will obliterate themselves. The UCITA that they propose may pass and become the fulfillment of some modern Greek tragedy.

I just hope that free software enthusiasts can provide the improvements and new, free products that may become unavailable if the commercial software industry annihilates itself.

There's much work to be done.

----------------------- Appendix -----------------------------

Some software is distributed in binary form free of charge. Some proprietary software is distributed with the source code available, but encumbered by a license that limits the production of "derivative works." Those are not commonly referred to as "free software" or "open source" by computing professionals and technical enthusiasts.

However, "free software with open source" permits free use and distribution and includes source code and a license/copyright that specifically permits the creation and distribution of "derivative works" without imposition of licensing fees, royalties, etc.

That, of course is a simplification. There are extensive debates on USENet and other technical fora about the exact nature and definition of the terms "free software" and "open source."

However, that is the gist of it.

There are two major license groups for "free/open source" software: BSD (Berkeley Software Distribution) and GPL (GNU Public License).

The BSD license was created by the Regents of the University of California at Berkeley. It was orginally applied to a set of patches and software utilities to UNIX (which was then owned by AT&T). Since then the BSD license has been applied to many software packages by many people and organizations that are wholly unconnected to UC Berkeley. It is the license under which Apache, and FreeBSD are available.

The BSD license permits derivative works, even closed source commercial and proprietary ones. Its principle requirements are the inclusion of a copyright notice and a set of disclaimers (disclaiming warranty and endorsement by the original authors of the software). Many advocates consider it to be the "free-est" license short of complete and utter abandonment (true public domain).

The GPL is somewhat more complicated. It was created by the Free Software Foundation (FSF), which was founded by Richard M. Stallman (a software visionary with a religous fervor and a following to match).

The terms of the GPL which cause misunderstandings and debate revolve around a requirement that "derivative works" be available under the same licensing terms as their "source" (progenitors?).

This is referred to as the "viral nature" of the GPL.

Conceptually if I "merge" the sources to two programs, one which was under the GPL and another which was "mine" then I'm required to release the sources to my software when I release/distribute the derivative.

That's the part that causes controversy. It's often played up as some sort of "trap" into which unwary software developers will be pulled.

One misconception is that I have to release my work when I use GPL software. That's absurd, pure FUD! I can use GNU EMACS (a GPL editor) and gcc (a popular GPL compiler) to write any software I like. I can release that software under any license I like. The use of the tools to create a package doesn't make it a "derivative work." Another more subtle misconception is that I'd be forced to release the sources to any little patch that I made to a package. If I make a patch, or a complex derived work, but only use it within my own organization, then I'm not required to release it. The license only requires the release of sources if I choose to "DISTRIBUTE" my derivative.

One last misconception. I don't have to distribute my GPL software free of charge. I can charge whatever I like for it. The GPL merely means that I can't prevent others from distributing it for free, that I must release the sources and that I must allow further derivation.

The FSF has developed an extensive suite of tools. Their GNU project intends to create a completely "free" operating system. They provided the core "tool chain" that allowed Linus Torvalds and his collaborators to develop Linux. That suite is released under the GPL. Many other software packages by many other authors are also released under the GPL.

Indeed, although the Linux kernel is not a "derived work" and its developers are unaffiliated with the FSF (as a group) it is licensed under the GPL.

There are a number of derivative and variations of these licenses. Some of them may contain subtle problems and conflicts. However, the intent of the authors is generally clear. Even with the worst problems in "free" and "open source" software licenses, there is far less risk to consumers who use that software than there is from any software released under proprietary licenses that might be enforced via the UCITA.

[Jim, you get the award for the first Mailbag letter with an Appendix.There is an article about UCITA on the Linux Journal web site, which contains an overview of UCITA's potential consequences, as well as a parody of what would happen if UCITA were applied to the auto industry. -Ed.]

Fri, 3 Sep 1999 10:32:58 +0200

Fri, 3 Sep 1999 10:32:58 +0200

From: niklaus <

Subject: gazette #45 - article on java

Hey, what's about that buggy article on JDE on linux - i receive nothing more than an floating point error ?!?

PN

Fri, 3 Sep 1999 19:16:19 +0200

Fri, 3 Sep 1999 19:16:19 +0200

From: <

Subject: Re: Ooops, your page(s) formats less-optimum when viewed in Opera

Hi Guys at Linux Gazette.

This mail responds to Bjorn Eriksson's mail in issue #45 / General Mail: SV: Ooops, your page(s) formats less-optimum when viewed in Opera (http://www.operasoftware.com/).

I have the same problem in my Opera. I tested it with Opera 3.1, 3.5 and 3.6 on Windows and the alpha release for BeOS, but every time the same problem. This is my solution for the problem: When defining this:

you use the tag option WIDTH="2" If you change it to WIDTH="1%" it looks better.Jan-Hendrik Terstegge

[I tried his advice, and another Opera user said it worked. -Ed.]

Thu, 02 Sep 1999 13:44:53 -0700

Thu, 02 Sep 1999 13:44:53 -0700

From: <

Subject: misspelling

This month's linux gazette contains what is for my money the most hideous misspelling ever to appear in your pages. The article "Stripping and Mirroring RAID under RedHat 6.0" clearly does NOT refer to an attempt to remove any apparel whatsoever from our favorite distro. STRIP is to undress, STRIPE is to make a thin line, RAID does not concern itself with haberdashery or nudity.

Dave Stevens

The Linux Gazette Editor writes:

OK, fixed.

P.S. STRIP is also used in electronics, when you scrape the insulation off wires.

Mark Nielsen adds:

Oops!

Sorry!

Thanks!

I used ispell to check the spelling, dang, it doesn't help when the word you mispell is in the dictionary.

Mark

Thu, 16 Sep 1999 13:46:22 +0200 (CEST)

Thu, 16 Sep 1999 13:46:22 +0200 (CEST)

From: =?iso-8859-1?q?seigi=20seigi?= <

Subject: Linux Gazette in French

Bonjour

Je voudrai savoir si votre magazine existe en francais sinon ou si vous connaissiez un magazine en francais qui parle de Linux

Merci d avance

[There are two French versions listed on the mirrors page, one in Canada and one in France. There used to be a third version, but it no longer exists. A company wrote me and said they are working on a commercial translation as well, although I have not heard that it's available yet.

Mon, 20 Sep 1999 08:59:40 -0400

Mon, 20 Sep 1999 08:59:40 -0400

From: Gaibor, Pepe (Pepe) <

Subject: What is the latest?

With great interest I got into and perused Linux Gazette. Any new stuff beyond April 1997? and if so where is it.

[You're reading it. :)If the site you usually read at appears to be out of date, check the main site at www.linuxgazette.com. Ed.]

Wed, 22 Sep 1999 9:21:17 EDT

Wed, 22 Sep 1999 9:21:17 EDT

From: Dunx <

Subject: Encyclopaedia Galactica != Foundation

Re: September 99 Linux Gazzette, Linux Homour piece -

Liked the operating systems airlines joke, but surely the footnote about the Encyclopaedia Galactica is in error? The only EC I know is the competitor work to the Hitch Hiker's Guide to the Galaxy in Douglas Adams' novels, radio and TV shows, and coputer games.

Cheers.

Thu, 23 Sep 1999 17:26:30 +0800

Thu, 23 Sep 1999 17:26:30 +0800

From: Phil Steege <

Subject: Linux Gazette Archives CDROM

I just wondered if there was, or if not has there ever been, any thought to publishing a Linux Gazette Archives CDROM.

Thank you for a great publication.

Phil Steege

[See the FAQ, question 2. -Ed.]

|

Contents: |

October 1999 Linux Journal

October 1999 Linux JournalThe October issue of Linux Journal is on the newsstands now. This issue focuses on embedded systems.

Linux Journal now has articles that appear "Strictly On-Line". Check out the Table of Contents at http://www.linuxjournal.com/issue66/index.html for articles in this issue as well as links to the on-line articles. To subscribe to Linux Journal, go to http://www.linuxjournal.com/subscribe/ljsubsorder.html.

For Subcribers Only: Linux Journal archives are now available on-line at http://interactive.linuxjournal.com/

Upcoming conferences & events

Upcoming conferences & eventsAtlanta Linux Showcase. October 12-16, 1999. Atlanta, GA.

Open Source Forum. October 21-22, 1999. Stockholm, Sweden.

USENIX LISA -- The Systems Administration Conference. November 7-12, 1999. Seattle, WA.

COMDEX Fall and Linux Business Expo . November 15-19, 1999. Las Vegas, NV.

The Bazaar. December 14-16, 1999. New York, NY. "Where free and open-source software meet the real world". Presented by EarthWeb.

SANS 1999 Workshop On Securing Linux. December 15-16, 1999. San Francisco, CA. The SANS Institute is a cooperative education and research organization.

Red Hat News (Burlington Coat Factory, Japan, etc)

Red Hat News (Burlington Coat Factory, Japan, etc)DURHAM, N.C.--September 7, 1999--Red Hat, Inc. today announced that Burlington Coat Factory Warehouse Corporation has purchased support services from Red Hat for its nationwide Linux deployment.

Under the agreement, Red Hat Services will provide telephone-based suppor t to more than 260 Burlington Coat stores nationwide (including subsidiaries). Red Hat will configure, install and provide ongoing maintenance for customized Dell OptiPlex (R) PCs and PowerEdge servers running factory installed Red Hat Linux. The Red Hat Linux OS-based systems will host Burlington Coat Factory's Gift Registry and will facilitate all other in-store functions, such as inventory control and receiving.

Durham, N.C.--September 7, 1999--Red Hat, Inc. today announced Red Hat Japan. The new Japanese operation will deliver the company's award-winning Red Hat Linux software and services directly to the Japanese marketplace. Red Hat Japan will feature a new, expanded headquarters and staff, and a new leader for Red Hat's operations in Japan.

In addition, Red Hat has named software industry veteran Masanobu Hirano as president of Red Hat Japan. Prior to Red Hat, Mr. Hirano was president of Hyperion Japan, a subsidiary of Hyperion Solutions, one of the country's most successful online analytical processing (OLAP) solution vendors. He also served as vice president and was a board member of ASCII Corporation, one of Japan's pioneering computer software companies.

Durham, N.C.--September 7, 1999--Red Hat®, Inc., today announced that Gateway has joined its authorized reseller program. When requested by its customers, Gateway will install Red Hat on its ALR® servers for network business environments.

Free Linux tech support via the web (No Wonder!)

Free Linux tech support via the web (No Wonder!)"It might sound crazy, but we have been doing it for almost 3 years."

News from The Linux Bits

News from The Linux BitsThe Linux Bits is a weekly ezine at www.thebits.co.uk/tlb/. It is perfectly suited to offline viewing (no graphics or banners).

A Survey Of Web Browsers Currently Available For Linux

Here's a list of all the Linux browsers and their stage of development. Obviously if you know of one that's not on the list then please let them know.

E-mail signature qutes from :

Oh My God! They Killed init! You Bastards!

Your mouse has moved. Windows must be restarted for the change to take effect. Reboot now? [ OK ]

If Bill Gates had a nickel for every time Windows crashed... Oh wait, he does.

Feature freeze for Linux 2.3: kernelnotes.org/lnxlists/linux-kernel/lk_9909_02/msg00460.html

HAPPY 8TH BIRTHDAY LINUX! On the 17th September 1991, Linus e-mailed his 0.01 kernel to just four people. Doesn't sound like much does it? Well believe it or not this was to be the first public release of Linux.

Linuxlinks.com. The trouble with sites that primarily focus on links to other sites, is that they tend to be thrown together with no real thought and organisation put into them. Fortunately LinuxLinks.com is not one of those sites. A great place to track down information on specific subjects concerning Linux.

LB also has a multi-part review of StarOffice.

Over 4000 UK IT and management training courses online

Over 4000 UK IT and management training courses onlineTraining Pages, an online database of IT and management training courses in the UK, has officially passed the threshold of 4000 entries. At the time of writing, the database detailed 4027 courses from 347 companies. These numbers will almost certainly increase by the time this notice is released.

[Type "linux" in the search box to see their 21 Linux courses. -Ed]

The press release offers a few technical details of the web site:

No other UK training site offers comparable levels of interactivity and dynamic web services. The secret behind the site's functionality is it's integration of open standards, open source software, and a smattering of in-house programming trickery.

By separating the dynamic functions from the presentational elements of HTML, the site can constantly be adapted and improved with minimal human intervention. The programme code currently contains a host of premium features which have yet to be activated. e.g. direct booking, last-minute booking, course evaluation, trainer evaluation, freelance trainers, etc.

Training Pages was developed by GBdirect, a boutique IT consultancy and training company based in Bradford. A detailed case study of how they designed and built the site is available from www.gbdirect.co.uk/press/1999/trainingpages.htm

New IDE Backup Device (Arco)

New IDE Backup Device (Arco)HOLLYWOOD, Florida --Arco Computer Products, Inc., www.arcoide.com, a leading provider of low cost IDE disk mirroring hardware, today announced the DupliDisk RAIDcase, a real-time backup device that offers PC users a simple and convenient way to maintain an exact, up-to-the-minute duplicate of their IDE hard drives.

Photo

If a hardware failure disables one of the mirrored drives, the other takes over automatically. External LEDs change color to indicate a failed drive and an audible alarm alerts the user of the drive failure but there is no interruption of service. The system continues to function normally until the user can find a convenient time to power down and install a new drive. Caddies remove easily to facilitate replacement of failed drives.

The RAIDcase requires neither an ISA nor a PCI bus slot. IDE and power cables provided by Arco connect the RAIDcase to the computer's onboard IDE controller and power supply. Once installed, the RAIDcase operates transparently, providing continuous automatic hard disk backup and disk fault tolerance. All data sent from the PC to the primary drive is automatically duplicated (concurrently) to the mirroring drive but the system (and end user) sees only the primary drive.

The RAIDcase uses no jumper settings and requires no driver, TSR or IRQ. Because it requires no device drivers, the RAIDcase is essentially operating-system independent. It has been tested with systems running Windows 3.x, 95, 98 and NT, as well as OS/2, DOS, Unix, Linux, Solaris386, BSDI and Novell NetWare. Manufacturer's suggested retail $435

www.arcoide.com

News from E-Commerce Minute

News from E-Commerce Minutewww.ecommercetimes.com

Linux vendor MandrakeSoft announced a strategic partnership with LinuxOne this week. They intend to develop Chinese language business and personal software solutions, advancing the cause of open-source in a potentially explosive Internet and computing market...

www.ecommercetimes.com/news/articles/990903-2.shtml

E-commerce solution provider Unify Corp., announced this week that two of its forthcoming Internet software releases will be certified to run on the Red Hat, Inc. (Nasdaq: RHAT) distribution of the Linux operating system (OS)...

www.ecommercetimes.com/news/articles/990910-8.shtml

Navarre, a business-to-business e-commerce company that offers music and software, announced today that it has entered into a distribution deal with Linux developer tools provider Cygnus Solutions. The deal is Navarre's sixth distribution agreement for the Linux operation system and related products...

www.ecommercetimes.com/news/articles/990903-6.shtml

Magic Software Enterprises unveiled the latest version of its e-commerce server Friday, a product powered by the Red Hat, Inc. (Nasdaq: RHAT) distribution of the red-hot Linux operating system (OS)...

www.ecommercetimes.com/news/articles/990920-3.shtml

Oracle Corp. has announced that its Oracle8i for Linux, a database designed specifically for the Internet, has been certified to run on the Red Hat, Inc. (Nasdaq: RHAT) distribution of the open-source operating system (OS). The announcement officially launches a strategic partnership between the two companies that is aimed at advancing corporate adoption of Linux...

www.ecommercetimes.com/news/articles/990922-7.shtml

New Linux Bulletin Board

New Linux Bulletin BoardANNOUNCEMENT FROM: WM. Baker Associates

On 09/13/99 WM. Baker Associates launched the Linux Bulletin Board at:

http://www.w-b-a.com/linux.html

This new Linux Bulletin Board provides a forum for visitors to ask questions, learn, and share ideas about Linux related issues and events.

Bulletin Board Categories include:

Linux Technical Information Linux News, Events & Publications Linux Investment Information

Ziatech and Intel Sponsor Applied Computing Software Seminars

Ziatech and Intel Sponsor Applied Computing Software SeminarsSan Luis Obispo, CA, September 20, 1999 -- Ziatech Corporation, with sponsorship from Intel Corporation, is hosting a continuing series of one-day seminars focusing on real-time operating system solutions for applied computing applications, it was announced today. Beginning in late October, the 1999 Applied Computing Software Seminar Series will feature presentations from leading software companies, including Wind River Systems (VxWorks=AE, Tornado(tm)), QNX Software Systems (QNX=AE, Neutrino=AE), and MontaVista Software (Hard Hat(tm) Linux), in addition to presentations by Ziatech and Intel. The seminar series begins in San Jose on October 29, and continues in San Diego (November 4), Tokyo (November 8), Dallas (November 30), and Boston (December 2). Each one-day session begin with registration at 7:30 a.m., includes lunch, and concludes at 5 p.m.

www.ziatech.com

Linux C Programming Mailing Lists

Linux C Programming Mailing ListsThe Linux C Programming Lists now officially exist. They will be archived on-line and also via majordomo. The Linux C Programming Lists aim to help people programming linux with C. Hopefully no question will be too simple nor too difficult for someone on the list to answer. For anyone learning how to program linux with C these lists will be a valuable resource to help you in your learning.

David Lloyd has agreed to host a common home page for the linux c programming lists. It is at users.senet.com.au/~lloy0076/linux_c_programming/index.html

The easiest way to become a member of the (dual) lists is to e-mail .

LJ sopnsors Atlanta Linux Showcase conference and tutorials

LJ sopnsors Atlanta Linux Showcase conference and tutorialsLinux Journal, the monthly magazine of the Linux community, is proud to announce its leading sponsorship role in the 1999 Atlanta Linux Showcase.

The Atlanta Linux Enthusiasts and the USENIX association, in cooperation with Linux International, are pleased to announce the Conference and Tutorials Schedule for the 3rd Annual Atlanta Linux Showcase.

The tutorial program, sponsored and managed by the USENIX Association will feature two days of top rate instruction in the following subjects:

The Conference program will consist of 41 sessions with up to five sessions in each track. Our tracks cover all of the cutting edge topics in the Linux Community today: Distributed Computing, Kernel Internals, Applications, Security, System Administration, and Development. The sessions are lead by a top notch line of speakers including Bernie Thompson, Eric Raymond, Phil Hughes, Matthew O'Keefe, Jes Sorensen, Michael Hammel, Miguel de Icaza, Mike Warfield, Steve Oualline, Dirk Hondel. Full details on the conference program are available and online at: www.linuxshowcase.org.

In addition to Jeremy Allison's Keynote, Norm Shryer from AT&T Research will be giving a keynote entitled: "The Pain of Success, The Joy of Defeat: Unix History"-the story of what happened to Unix on the way from Ken Thompson's mind to the marketplace, and how this affects Linux.

ALS activities for attendees include 3 days of free vendor exhibits, freeform birds-of-a- feather sessions, and the listed tutorials, keynotes, and conference sessions.

RMS Software Legislation Initiative

RMS Software Legislation InitiativeDate: Wed, 15 Sep 1999 04:31:52 +0000 (UTC)

From: Dwight Johnson <[email protected]>

To: [email protected]

Richard Stallman is promoting an initiative to campaign against UCITA and other legislation that is damaging to the free software movement.

Considering the many legislative issues that affect free software, some, like myself, believe there is a need for a Ralph Nader type of organization to both be a watchdog and also lobby legislators to protect the interests of free software.

Richard Stallman has volunteered himself to lead off the initiative and Below is his latest correspondence.

Because the free software movement encompasses both commercial and non-commercial interests and Linux International is an association of commercial Linux interests, it is probably not appropriate for Linux International to attempt to serve the watchdog and lobbying function which is needed.

There are two ways Linux International may become involved:

1) Individual companies within Linux International may wish to commit themselves to sponsor the Richard Stallman initiative;

2) Linux International may collectively endorse and sponsor the Richard Stallman initiative.

Richard recognizes that his leadership may be controversial to some and has told me he wants to join with 'open-source' people to support the common cause.

As Linux and free software/open-source solutions move into center stage in the technology arena, the agendas of those who support the older model of intellectual property to oppose the inevitability of this evolution are becoming more sharply defined and dangerous -- they are seeking legislative solutions.

We need to pursue our own agenda and our own legislative solutions and time is of the essence. A cursory search of the Linux Today news archives will reveal that there are several bills on their way through the U.S. Congress right now, in addition to UCITA, which could be disruptive to the free software movement.

I urge the Linux International Board of Directors both collectively and individually to take action and support the Richard Stallman initiative to defend open-source/free software against damaging legislation.

Andover.Net Files Registration for Open IPO

Andover.Net Files Registration for Open IPOActon, Mass.--September 17, 1999-- Andover.Net www.andover.net, a network of Linux/Open Source web sites which include Slashdot.org, today announced that it has filed a Registration Statement on Form S-1 with respect to a proposed initial public offering of 4,000,000 shares of Andover.Net common stock. All 4,000,000 shares are being offered by Andover.Net at a proposed price range of $12 to $15 per share.

Information regarding the OpenIPO process may be obtained through www.wrhambrecht.com. Copies of the preliminary prospectus relating to the offering may be obtained when available through the web site.

Linux Breakfast

Linux BreakfastNew Age Consulting Service, Inc., a Network consulting corporation and Tier 2 Internet Service Provider that has been providing corporate Linux solutions for nearly five years, is introducing Linux to Cleveland at a Breakfast on September 30, 1999. The Linux Breakfast is designed to educate the quickly expanding Linux market in Cleveland about the exciting commercial applications of the open source operating system and how it increases network efficiency in conjunction with or as an alternative to other network operating systems such as Novell and Microsoft NT server solutions.

NACS.NET's goal is to provide business owners and managers with information that demonstrates how Linux is quickly building a strong hold on the enterprise market and that it is being rolled out in a very strong and well supported manner. Caldera Systems, Inc. and Cobalt Networks, Inc., national leaders in Linux technology, will be presenters at the event.

Caldera Systems, Inc. is the leader in providing Linux-based business solutions through its award winning OpenLinux line of products and services. OpenLinux for business solutions are full-featured, proven, tested, stable and supported. Through these solutions, the total cost of ownership and management for small-to-medium size businesses is greatly reduced while expanding network capabilities.

Cobalt Networks, Inc. is a leading developer of server appliances that enable organizations to establish an online presence easily, cost effectively, and reliably. Cobalt's product lines - the Cobalt Qube, Cobalt Cache, Cobalt RaQ, and Cobalt NASRaQ - are widely used as Internet and Web hosting server appliances at businesses, Internet Service Providers, and educational institutions. Cobalt's solutions are delivered through a global network of distributors, value-added resellers and ISPs. Founded in 1996, Cobalt networks, Inc. is located in Mountain View, California-the heart of Silicon Valley - with international offices in Germany, Japan, the United Kingdom, and the Netherlands.

The presentation will be held in the Cleveland Flats at Shooters on the Water. A full buffet style breakfast will be offered to all registered attendees.

Franklin Institute Linux web server

Franklin Institute Linux web serverPhiladelphia PA -- The Franklin Institute Science Museum has installed a Linux Web server built by LinuxForce Inc. The server is now on line and being used by The Franklin Institute in their Keystone Science Network. The network has been designed to create a professional community of science educators throughout the Eastern half of Pennsylvania.

Christopher Fearnley Senior VP Technology LinuxForce Inc. said that "LinuxForce is proud to have built the server and its integrated Linux software that will aid the Keystone Science Network in promoting teacher professional growth." The Network has been designed to promote teacher professional growth through the implementation of K-8 standards-based science kits supported by the application of network technology.

The Web Server built for the program by LinuxForce Inc.'s Hardware Division includes the Debian GNU/Linux operating system. Fearnley commented that the powerful Keystone web server will meet all current requirements and the challenge of any expansion beyond the ten core sites located in school districts throughout the Eastern half of Pennsylvania.

www.linuxforce2000.com

Java EnterpriseBeans: no-cost developer's version + contest

Java EnterpriseBeans: no-cost developer's version + contestP-P-P-Promotion --- don't start stuttering. If you are on Linux, it's alright for you to laugh! Penguin, ProSyst, PSION: CARESS the Penguin, DOWNLOAD the EJB application server from ProSyst: EnterpriseBeans Server, Developer Edition without any charge and WIN one of three PSION Series 5mx Pro palmtop computers every month!

Download EnterpriseBeans Server, Developer Edition for Linux and register to Win! All download registrations and answered questionnaires received by October 15, November 15 and December 15, 1999 will be entered in a drawing to win any one time one of three PSION Series 5mx Pro palmtop computers. The winners will be posted on ProSyst's Web site at www.prosyst.com every month.

WIN again: If you have developed some nice Enterprise JavaBeans or services and you are planning to deploy it, purchase EnterpriseBeans Server, any Server Edition for Linux by December 31, 1999 and get 50% off. You save up to US $ 5,500.

Linux Links

Linux LinksLinuxPR. From the web page: "Linux PR is a website for organizations to publish press releases to the enormous market that is the Linux community. Linux PR is backed by the resources of Linux Today and is offered at no charge. Journalists from large media organizations can monitor Linux PR as a source for their Linux-related information."

C.O.L.A software news

C.O.L.A software newstsinvest is for the real time programmed day trading of stocks. "Quantitative financial analysis of equities. The optimal gains of multiple equity investments are computed. The program decides which of all available equities to invest in at any single time, by calculating the instantaneous Shannon probability of all equities..." Freely redistributable, but cannot sell or include in a commercial product.

www2.inow.com/~conover/ntropix

xshipwars game (GPL). Uses the latest Linux joystick driver. Nice-looking lettering and graphics at web site.

Flight Gear flight simulator game (GPL). "A large portion of the world (in Flight Gear scenery format) is now available for download." Development version is 0.7.0; stable version is 0.6.2.

wvDecrypt decrypts Word97 documents (given the correct password). A library version (part of wv library) is also available at www.csn.ul.ie/~caolan/publink/mswordview/development.

hc-cron (GPL) is a modification of Paul Vixie's cron daemon that remembers when it was shut down and catches up on any jobs that were missed while the computer was off. The author is looking for programmers who can take over its further development.

The Linux Product Guide by FirstLinux is "a comprehensive guide to commercial Linux resources."

suckmt is a multi-threaded version of suck (an NNTP news puller). Its purpose is to make fuller use of dialup modem capacity, to cut down on connect-time charges. It is more of a feasability study than application at this point.

Jackal/MEC is a video streaming client/server pair for Linux.

Personal Genealogy Database project

Personal Genealogy Database projectThis project is just beginning and needs help in coding. The complete program objectives and wishlist are at the project home page. For more information, subscribe to the development mailing list or email the project manager.

Home Page: www.msn.fullfeed.com/~slambo/genes/ FTP site: none yet; will be announced when code is released. License: GPL Development: C++, Qt and Berkeley DB; this may change as development progresses. Project Manager: Sean Lamb - [email protected] Mailing List: www.onelist.com/community/genes-devel Subscribe: blank message to or visit the list home page.

Caldera open-sources Lizard install

Caldera open-sources Lizard installOrem, UT -September 7, 1999 - Caldera Systems, Inc. today announced that its award-winning LInux wiZARD (LIZARD) - the industry's first point and click install of Linux -is now available under the Q Public License (QPL) for download from www.openlinux.org.

The LIZARD install was developed for OpenLinux by Caldera Systems Engineers in Germany, and by Troll Tech, a leading software tools development company in Oslo, Norway.

LIZARD makes the transition from Windows to Linux easier for the new user and reduces down time created by command line installation. "We're happy to contribute back to the Open Source community and Linux industry," said Ransom Love, CEO of Caldera Systems, Inc. "We're particularly grateful to Troll Tech for their support of-and contributions to-this effort. LIZARD will help Linux move further into the enterprise as others develop to the technology" "We congratulate Caldera Systems on their bold move of open-sourcing LIZARD," said Haavard Nord, CEO of Troll Tech. "LIZARD is the easiest to use Linux installer available today, and it demonstrates the versatility of Qt, our GUI application framework."

Under the Q Public License LIZARD may be copied and distributed in unmodified form provided that the entire package, including-but not restricted to-copyright, trademark notices and disclaimers, as released by the initial developer, is distributed. For more information about the Q Public License and distribution options, please visit www.openlinux.org/lizard/qpl.html

Xpresso LINUX 2000

Xpresso LINUX 2000Xpresso LINUX 2000 is a safe, simple, stable computer OS with a full set of programs (Star Office 5.1, WordPerfect 8, Netscape 4.51, Chess and more). Everything you need and all made simple for the Linux user. All on a single CD Rom with small pocket-sized manual.

It is based on Red Hat 6 with the KDE 1.1 graphical interface and sells for just UK Pounds 15.95, delivered to your door world-wide.

www.xpresso.org

News from Loki (games and video libraries)

News from Loki (games and video libraries)TUSTIN, CA -- September 8, 1999 -- Loki Entertainment Software announces their third Open-Source project, the SDL Motion JPEG Library (SMJPEG) .

SMJPEG creates and displays full motion video using an open, non-proprietary format created by Loki. It is based on a modified version of the Independent JPEG Group's library for JPEG image manipulation and freely available source code for ADPCM audio compression. Among its many benefits, SMJPEG allows for arbitrary video sizes and frame-rates, user-tuneable compression levels, and facilities for frame-skipping and time synchronization.

Loki developed SMJPEG in the course of porting Railroad Tycoon II: Gold Edition by PopTop Software and Gathering of Developers. While Loki is contractually bound to protect the publisher's original game code, Loki shares any improvements to the underlying Linux software code with the Open Source community.

Loki's first Open Source project, the SDL MPEG Player Library (SMPEG) is a general purpose MPEG video/audio player for Linux, developed while porting their first title, Civilization: Call to Power by Activision. The second project is Fenris, Loki's bug system based on Bugzilla from the Mozilla codebase.

SMJPEG, SMPEG and Fenris are freely available for download from www.lokigames.com, and are offered under the GNU Library Public License (LGPL).

About Loki Entertainment Software: Based in Orange County, CA, Loki works with leading game publishers to port their best-selling PC and Macintosh titles to the Linux platform. Loki meets a pent-up need in the Linux community by providing fully-supported, shrink-wrapped games for sale through traditional retail channels. For more information, visit www.lokigames.com.

Tustin, CA. -- September 17, 1999 -- Loki Entertainment Software, in cooperation with Activision, Inc. and the Atlanta Linux Enthusiasts, announces Loki Hack 1999 to be held on October 11 through 13 at the Cobb Galleria Centre in Atlanta in conjunction with the Atlanta Linux Showcase.

Loki Entertainment Software launched the Linux version of Activision's popular strategy game Civilization: Call to Power(TM) in May 1999 to strong reviews. During Loki Hack, up to 30 qualified hackers will have 48 hours in a secure setting to make alterations to the Linux source code for this game. In turn Loki will make available in binary form all resulting work from the contest. Winners of this unique contest will be announced during the Atlanta Linux Showcase. First prize will be a dual-processor workstation (running Linux of course).

Qualified hackers may apply to participate on the Loki web site (above).

New Linux Distribution from EMJ Embedded Systems

New Linux Distribution from EMJ Embedded SystemsApex, NC -- Using Linux for embedded applications on the world's smallest computer just got easier, thanks to the new EMJ-linux distribution, developed by EMJ Embedded Systems.

EMJ has compiled a small distribution that runs Linux on JUMPtec®'s DIMM-PC/486, saving hardware developers the hours of time required to modify Linux to run on the world’s smallest PC. The DIMM PC is a full featured 486 PC in the size of a 144-pin memory DIMM.