Linux Gazette, a member of the Linux Documentation Project, is an on-line WWW publication that is dedicated to two simple ideas:

TWDT 1 (text)

TWDT 2 (HTML)

are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version.

Got any great ideas for improvements! Send your

This page written and maintained by the Editor of Linux Gazette,

Write the Gazette at

|

Contents: |

Date: Sun, 17 Nov 1996 18:49:56 -0600

Date: Sun, 17 Nov 1996 18:49:56 -0600

Subject: a reply type thing...

From: Glenn E. Satan,

> Subject: Xwindows depth > From: James Amendolagine [email protected] > > I have recently been messing with my x-server, and have managed > to get a depth of 16, ie 2^16 colors. This works > really nice with Netscape, but some programs (doom, abuse, and > other games) wont work with this many colors. Do > you know of a fix? I have tried to get X to support multiple > depths--to no avail. The man-page suggests that some > video cards support multiple depths and some don't. How do I know > if mine does. > > I would really like to see an article on this subject,I would like to say, yes, please someone help.... thought maybe a reply would motivate someone a little more to write a article on this.

(All right a second request for help in this area. Anybody out there with suggestions and/or wanting to write an article? --Editor)

Date: Sun, 01 Dec 1996 00:20:12 +1000

Date: Sun, 01 Dec 1996 00:20:12 +1000

Subject: Quilting and geometry

From: Chris Hennessy,

I liked your comment about quilting being an interest. We tend to forget that people have interests outside of computers in general (and linux in particular).

Just like to say thanks for what is obviously an enormous effort you are putting into the gazette. I'm new(ish) to linux and I find it a great resource, not to say entertaining.

Has anyone suggested an article on the use of Xresources? As I said I'm fairly new and find this a bit confusing... maybe someone would be interested in an example or three?

Oh and with the quilting and geometry ... better make sure its not the 80x25+1-1 variety.

(Thanks, LG is a lot of work, as well as a lot of fun. And yes, I do have a life outside of Linux. Anyone interested in writing about Xresources? Thanks for writing. It's always nice to know we are attracting new readers. --Editor)

Date: Wed, 4 Dec 1996 13:33:26 +0200 (EET)

Date: Wed, 4 Dec 1996 13:33:26 +0200 (EET)

Subject: security issue!

From: Arto Repola,

Hi there!

I was wondering that could you write in some Gazette something about Linux security...how to improve it, how to setup firewall,shadow password systems etc?

I'm considering to build up my own linux-server and i really would like to make it as secure as possible!

Nothing more this time!

http://raahenet.ratol.fi/~arepola

(And another great idea for an article. Any takers? --Editor)

Date: Wed, 04 Dec 1996 08:08:06 -0700

Date: Wed, 04 Dec 1996 08:08:06 -0700

Subject: Reader Response

From: James Cannon,

Organization: JADS JTF

Great Resource,

I really like the resource Linux offers new users. I have already applied a few tricks to my PC. I wish some one would explain how to use the GNU C/C++ compiler with Linux. It is a tool resting in my hard drive. With commercial compilers, there is a programming environment that links libraries automatically. Are there any tricks to command line C/C++ programming with Linux?? Stay online!

James Cannon

(Thanks for the tip. Online is the best place to be. Anyone out there got some C++ help for this guy? --Editor)

Date: Mon, 9 Dec 1996 23:27:21 +0000 (GMT)

Date: Mon, 9 Dec 1996 23:27:21 +0000 (GMT)

Subject: Linux InfraRed Support

From: Hong Kim,

Hi,

I have been so far unsuccessful in finding information for InfraRed support on Linux.

I am particularly interested in hooking up Caldera Linux on a Thinkpad 560 using Extended Systems JetEye Net Plus. Caldera on Thinkpad I can handle but the JetEye allows connection to ethernet or token ring networks via IR.

My searches of Linux Resources page come up negative. I have posted to USENET and also emailed any web master that has any mention of ThinkPad or IR on their pages. Still no answer.

Can you help me to find information. If I am successful, I would be willing to write an article about it.

Hong

(I have sent your question on to Linux Journal's Tech Support Column. Answers from this source can be slow as author contacts companys involved. Sounds like you have covered all the bases in your search -- can anyone out there help him? If you write the article, I'll be happy to post it in the LG so next person who needs this information will have a quicker answer. --Editor)

Date: Thu, 5 Dec 96 13:00:01 MET

Date: Thu, 5 Dec 96 13:00:01 MET

Subject: Linux networking problem ...

From:

Hi there,

First I have to apologize for writing to this address with my problem, but I don't no where to search for an answer and university's network is so damned slow that surfing through the net searching for an answer makes no fun. Another reason is that I've got no access to Usenet... means can't post in comp.os.linux.networking... 8-((

I tried to find a news server near to Germany which allows posting without using that damned -> identd <- but found none, may be you know where to find a list with (free) news servers ?

Here's the problem:

I want to setup Linux in our University's LAN but ran into problems, because the LAN is VINES-IP based so that normal TCP/IP packet drivers won't work. The admin says I do need a driver which can tunnel the normal Linux TCP/IP packets into those VINES-IP packets, so that they can be send over the LAN to that box which has Internet connection....

Maybe you know if such thing is available and/or where I can get it. Or maybe you can give some Email-addresses for asking people which real knowledge 'bout Linux (maybe even that of Linus T. himself) and it's drivers.

Hope you can help me 8-))

Thanks in advantage

Stefan 8-))

(I've sent your problem on to Linux Journal's Technical Support column and will post it in Linux Gazette's Mailbag next month. Neither one will give you a fast answer.I did a search of LG, LJ and SSC's Linux Resources using VINES as the keyword. I found only one entry from an author's biography. It's old -- March 1995 -- and the guy was in the marine corp then so may or may not be a good address. Anyway here's what it said:

"Jon Frievald ... manages Wide Area Network running Banyan VINES. ... e-mail to [email protected]"Anyway you might give him a try for help ideas.

For faster access to LG have you tried any of LG's mirror sites in Germany:

- http://www.cs.uni-dusseldorf.de/~stein/lg

- http://vina12.va.htw-dresden.de/lg

- http://www.fokus.gmd.de/linux/lg

Please note that mirror sites wont help search time -- all searching is done on SSC site. --Editor)

Date: Sat, 30 Nov 1996 20:35:17 -0600 (CST)

Date: Sat, 30 Nov 1996 20:35:17 -0600 (CST)

Subject: Re: Slang Applications for Linux

From: Duncan Hill,

To: Larry Ayers,

On Sat, 30 Nov 1996, Duncan Hill wrote:

Greetings. I was reading your article in the Linux Gazette, and thought you might be interested to know that Lynx also has its own web site now at:

http://lynx.browser.org/

It's up to version 2.6 now, and is rather nice, specially with slang included :)

Duncan Hill, Student of the Barbados Community College

(Thanks for the tip! I really appreciate responses from readers; confirms that there are really readers out there! --Larry Ayers)

Date: Sat, 30 Nov 96 16:42:58 0200

Date: Sat, 30 Nov 96 16:42:58 0200

Subject: Linux Gazette

From: Paul Beard,

Hello from Zimbabwe.

Very nice production. Keep up the good work.

Regards,

Paul Beard.

(Thanks. --Editor)

Date: Thu, 28 Nov 1996 23:54:38 +0000

Date: Thu, 28 Nov 1996 23:54:38 +0000

Subject: Thanks!

From: Russ Spooner,

Organization: Kontagx

Hi,

I have been an avid reader of Linux Gazette since its inception! I would just like to say that it has helped me a lot and that I am really glad that it has become more regular :-)

The Image you have developed now has come a long way and it is now one of the best organized sites I visit!

Also I would like to thank you for the link to my site :-) it was a real surprise to "see myself up in lights" :)

Best regards!

Russ Spooner, http://www.pssltd.co.uk/kontagx

Date: Thu, 28 Nov 1996 12:49:12 -0500

Date: Thu, 28 Nov 1996 12:49:12 -0500

Subject: LG Width

From: frank haynes,

Organization: The Vatmom Organization

Re: LG page width complaint, LG looks great here, and I don't think my window is particularly large. Keep up the fine work.

--Frank, http://www.mindspring.com/~fmh

(Good to hear. --Editor)

Date: Fri, 29 Nov 1996 10:30:32 +0000

Date: Fri, 29 Nov 1996 10:30:32 +0000

Subject: LG #12

From: Adam D. Moss.

Nice job on the Gazette, as usual. :)

Adam D. Moss / Consulting

( :-) --Editor)

Date: Tue, 3 Dec 1996 12:55:18 -0800 (PST)

Date: Tue, 3 Dec 1996 12:55:18 -0800 (PST)

Subject: Re: images in tcsh article

From: Scott Call,

Most of the images in the TCSH article in issue 12 are broken

-Scott

(You must be looking at one of the mirror sites. I inadvertently left those images out of the issue12 tar file that I made for the mirror sites. When I discovered it yesterday, I made an update file for the mirrors. Unfortunately, I have found that not all the mirrors are willing to update LG more than once a month, so my mistakes remain until the next month. Sorry for the inconvenience and thanks for writing. --Editor)

Date: Fri, 06 Dec 1996 21:21:00 +0600

Date: Fri, 06 Dec 1996 21:21:00 +0600

Subject: 12? why can you make so bad distributive?????????????

From: Sergey A. Panskih,

i ftpgeted lg12 and untar.gz it as made with lg11. lg11 was read as is: with graphics and so, but lg12... all graphics was loosed. i've verified hrefs and found out that href was written with principial errors : i must copy all it to /images in my httpd server!!!!

this a pre-alpha version!!!

i can't do so unfixed products!!!

i'm sorry, but you forgotten how make a http-ready distrbutions... :)

Sergey Panskih

P.S. email me if i'm not true.

(I'm having a little trouble with your English and don't quite understand what "all graphics was loosed" means. You shouldn't have to copy anything anywhere: what are you copying to /images?There is one problem I had that may apply to you. Are you throwing away previous issues and only getting the current one? If so, I apologize most humbly. I was not aware until this month that people were doing this and when I made the tar file I included only new files and those that had been changed since the last month. To correct this problem I put a new tar file on the ftp site called standard_gifs.html. It's not that I've forgotten how to make http-ready distributions, it's that I'm just learning all the complexities. In the future I will make the tar file to include all files needed for the current single issue, whether they were changed or not.

I am very sorry to have caused you such problems and distress. --Editor)

Date: Mon, 02 Dec 96 18:13:48

Date: Mon, 02 Dec 96 18:13:48

Subject: spiral trashes letters

From:

It's clever and pretty, but the spiral notebook graphic still trashes the left edge of letters printed in the issue 12 Mailbag.

Problem occurs using OS/2's Web Explorer version 1.2 (comes with OS/2 Warp 4.0). Problem does NOT occur using Netscape 2.02 for OS/2 beta 2 (the latest beta for OS/2).

Problem occurs even while accessing www.ssc.com/lg

Jep Hill

(Problem will always occur with versions of either Microsoft Explorer or Netscape before 2.0. It is caused by a bug in TABLES that was fixed in the 2.0 versions. I don't have access to OS/2's Web Explorer, so I can only guess that it's the same problem. I'd recommend always using the latest version of your browser. --Editor)

Date Mon, 9 Dec 1996 10:14:04 -0800 PST

Date Mon, 9 Dec 1996 10:14:04 -0800 PST

Subject: Background

From:

I run at a resolution of 1152x846 (a bit odd I suppose) and although the Gazette pages look very nice indeed, it is a bit hard to read when I have my Netscape window maximized. The bindings part of the background seems to be optimized for a width of 1024 and thus tiles over again on the right side of the page. This makes reading a bit difficult as some of the text now overlaps the bindings on the far right.

I'm not sure if that's a great description of the problem, but I can easily make you a screenshot if you want to see what I mean.

Anyhow, this is only a minor annoyance--certainly one I'm willing to live with in order to read your great 'zine. :)

Ray Van Dolson -=-=- Bludgeon Creations (Web Design) - DALnet #Bludgeon -- http://www.shocking.com/~rayvd/

(Screen shot wont be necessary. When the web master first put the spiral out there, the same thing happened to me -- I use a large window too, but not as large as yours. He was able to expand it to fix it at that time. I notified him of your problem, but not sure if he can expand it even more or not. We'll see. Glad it's a problem you can live with. :-) --Editor)

Date: Sat, 7 Dec 1996 22:16:55 +0100 (MET)

Date: Sat, 7 Dec 1996 22:16:55 +0100 (MET)

Subject: Problem with Printing.

From:

Hi,

This is just to let you people know, that there might be a slight problem. I want to point out and make it perfectly clear that this is NOT a complaint. I feel perfectly satisfied with the Linux Gazette as it is.

However sometimes I prefer to have a printed copy to take with me. Therefore I used to print the LG. from Netscape. I'm using the new 3.1 version now. With the last two issues I have difficulties doing so. All the pages with this new nice look don't print too well. The graphics show up at all the wrong places and only one page is printed on the paper. The rest is swallowed. Did you ever try to print it?

I had to use an ancient copy of Mosaic, that doesn't know anything about tables, to print these pages. They don't look too good this way too, and never did. I know this old Mosaic is buggy. At least it doesn't swallow half of the stuff. This could as well be a bug in Netscape. I know next to nothing about html.

Anyway, have fun.

Regards Friedhelm

(No, I don't try to print it, but will look into it. Are you printing out "TWDT" from the TOC or trying to do it page by page? It is out there in multi-file format and so if you print from say the Front Page, the front page is all you'll get. "TWDT" is one single file containing the whole issue, and the spiral and table stuff are removed so it should print out for you okay. Let me know if this is already what you are printing, so I'll know where to look for the problem. --Editor)

Date: Wed, 18 Dec 1996 04:02:37 +0200

Date: Wed, 18 Dec 1996 04:02:37 +0200

Subject: Greetings

From: Trucza Csaba, [email protected]

To: [email protected]

Well, Hi there!

Amazing. I've just read the Linux Gazette from the first issue to this one, the 12th (actually I read just the first 7 issues through, because the others were not downloaded correctly).

It's 4 in the morning and I'm enthusiastic. I knew Linux was good, I'm using it for a year (this is because of the lack of my english grammar, I mean the previous sentence, well...), so I knew it was good, but I didn't expect to see something so nice like this Gazette.

It's good to see that there are a WHOLE LOT of people with huge will to share.

I think we owe You a lot of thanks for starting it.

Merry Christmas, a Happy New Year, and keep it up!

Trucza Csaba, Romania

(Thanks, I will. -- Editor)

Date: Mon, 23 Dec 1996 12:16:30 -0800 (PST)

Date: Mon, 23 Dec 1996 12:16:30 -0800 (PST)

Subject: lg issue 12 via ftp?

From: [email protected] (Christian Schwarz)

I just saw that issue #12 is out and accessible via WWW, but I can't find the file on your ftp server nor on any mirrors.

(Sorry for the problems. We changed web servers and I went on vacation. Somehow in the web server change, some of the December files got left behind. I didn't realize until today that this had happened. Sorry for the inconvenience. --Editor)

Date: Fri, 20 Dec 1996 00:31:45 -0500

Date: Fri, 20 Dec 1996 00:31:45 -0500

Subject: Great IDEA

From: Pedro A Cruz, [email protected]

Hi:

I visited your site recently and was astounded by the wealth of information there. I have lots of bandwidth to read your site. I noticed that you have issues for download. I Think it will be a great service to the LINUX community if you consider publishing a CDROM (maybe from walnut creek cdrom) as a subscription item.

pedro

(Yes, that is a good idea. I'll talk to my publisher about it. --Editor)

Date: Sun, 22 Dec 1996 20:24:51 -0600

Date: Sun, 22 Dec 1996 20:24:51 -0600

Subject: Linux as router

From: Robert Binz, [email protected]

I have found myself trying to learn how to use Linux as a usenet server to provide news feeds to people, and to use Linux as a IRC server. Information on these topics are hard to come buy. If you have any sources on these subjects that you can point me to I would be most appreciative.

But any how, I have found an article in SysAdmin (Jan 96 (5.1)) that is titled Using Linux as a Router, by johnathon Feldman. Is it possible to reprint this article or get the author to write a new one for you?

TIA

Robert Binz

(I'll look into it. In the meantime, I've forwarded your letter to a guy I think may be able to help you. --Editor)

Date: Fri, 13 Dec 1996 03:57:09 -0500 (EST)

Date: Fri, 13 Dec 1996 03:57:09 -0500 (EST)

Subject: Correction for LG #12

From: Joe Hohertz, [email protected]

Organization: Golden Triangle On-Line

Noticed the folowing in the News section.

A couple of new Linux Resources sites:

(Seems I had Joe's address wrong. Sorry. --Editor)

Date: Tue, 17 Dec 1996 01:19:43 -0500

Date: Tue, 17 Dec 1996 01:19:43 -0500

Subject: One-shot downloads

From: David M. Razler, [email protected]

Folks:

While I realize that the economies of the LINUX biz require that there be some method of making money even on the distribution of free and "free" software, I have a request for them of us who 1) are currently scraping for the cash for our Internet accounts and 2) would like to try LINUX.

How about a one-shot download? I mean, oh, everything needed to establish a LINUX system in one ZIP'ed (or tar/gz'd, though zip is a more compatible format) file, one for each distribution?

I'm currently looking to establish LINUX on my "spare" PC, a 386DX-16 w/4 meg and a scavenged 2500MB IDE drive, etc. It will be relatively slow, limited, lacks a CD-rom drive, but it's free, since the machine is currently serving as a paperweight.

I could go out and buy a used CD-rom for the beast, or run a bastard connection from my primary, indispensable work machine and buy the CDs. But I am currently disabled and spending for these things has to be weighed against other expenses (admittedly, I am certainly lucky and not destitute, it would just be better)

I could get a web robot and download umpteen little files, puzzle them out and put them together, though the load on your server would be higher.

Or, under my proposed system, I could download Distribution Code, Documents, and Major accessories in one group, then go back for the individual bits and pieces I need to build my system.

Again, I realize that running your site costs money, and that people make money, admirably little money, distributing LINUX on CDs, with the big bucks (grin) of LINUX coming in non-free software, support and book sales.

But if the system is to spread, providing a series of one-shot downloads, possibly available only to individuals (I believe one could copyright the *package* and require someone downloading to agree to use it only on a single non-commercial system and not to redistribute, but I am not an intellectual properties lawyer), to make life easier for them of us who need to learn a UNIX-style system and build one on the cheap.

dmr

More 2¢ Tips!

More 2¢ Tips! Another 2cent Script for LG

Another 2cent Script for LGHello LG people,

here comes a short script which will check from time to time that there is enough free space available on anything which shows up in mount (disks, cdrom, floppy...)

If space runs out, a message is printed every X seconds to the screen and 1 mail message per filled device is fired up.

Enjoy!

Hans

#!/bin/sh

#

# $Id: issue13.html,v 1.1.1.1 1997/09/14 15:01:39 schwarz Exp $

#

#

# Since I got mysterious error messages during compile when

# tmp files filled up my disks, I wrote this to get a warning

# before disks are full.

#

# If this stuff saved your servers from exploding,

# send praising email to [email protected].

# If your site burns down because of this, sorry but I

# warned you: no comps.

# If you really know how to handle sed, please forgive me :)

#

#

# Shoot and forget: Put 'check_hdspace &' in rc.local.

# Checks for free space on devices every $SLEEPTIME sec.

# You even might check your floppies or tape drives. :)

# If free space is below $MINFREE (kb), it will echo a warning

# and send one mail for each triggering device to $MAIL_TO_ME.

# If there is more free space than trigger limit again,

# mail action is also armed again.

#

# TODO: Different $MINFREE for each device.

# Free /*tmp dirs securely from old junk stuff if no more free space.

DEVICES='/dev/sda2 /dev/sda8 /dev/sda9' # device; your put disks here

MINFREE=20480 # kb; below this do warning

SLEEPTIME=10 # sec; sleep between checks

MAIL_TO_ME='root@localhost' # fool; to whom mail warning

# ------- no changes needed below this line (hopefully :) -------

MINMB=0

ISFREE=0

MAILED=""

let MINMB=$MINFREE/1024 # yep, we are strict :)

while [ 1 ]; do

DF="`/bin/df`"

for DEVICE in $DEVICES ; do

ISFREE=`echo $DF | sed s#.\*$DEVICE" "\*[0-9]\*" "\*[0-9]\*" "\*## | sed s#" ".\*##`

if [ $ISFREE -le $MINFREE ] ; then

let ISMB=$ISFREE/1024

echo "WARNING: $DEVICE only $ISMB mb free." >&2

#echo "more stuff here" >&2

echo -e "\a\a\a\a"

if [ -z "`echo $MAILED | grep -w $DEVICE`" ] ; then

echo "WARNING: $DEVICE only $ISMB mb free.

(Trigger is set to $MINMB mb)" \

| mail -s "WARNING: $DEVICE only $ISMB mb free!" $MAIL_TO_ME

MAILEDH="$MAILED $DEVICE"

MAILED=$MAILEDH

# put further action here like cleaning

# up */tmp dirs...

fi

elif [ -n "`echo $MAILED | grep -w $DEVICE`" ] ; then

# Remove mailed marker if enough disk space

# again. So we are ready for new mailing action.

MAILEDH="`echo $MAILED | sed s#$DEVICE##`"

MAILED=$MAILEDH

fi

done

sleep $SLEEPTIME

done

Console Trick Follow-up

Console Trick Follow-upJust finished reading issue #12, nice work.

A followup to the "Console Tricks" 2-cent tip:

What I like to do is have a line in /etc/syslog.conf that says:

*.* /dev/tty10that sends all messages to VC 10, so I can know what's going on whether in X or text mode. Very useful IMHO.

-- Elliot, http://www.redhat.com/

GIF Animations

GIF AnimationsI too thought WhirlGIF (Graphics Muse, issue 12) was the greatest thing since sliced bread (well, aside from PNG) when I first discovered it, but for creating animations, it's considerably inferior to Andy Wardley's MultiGIF. The latter can specify tiny sprite images as parts of the animation, not just full images. For my PNG-balls animation (see http://quest.jpl.nasa.gov/PNG/), this resulted in well over a factor-of-two reduction in size (577k to 233k). For another animation with a small, horizontally oscillating (Cylon eyes) sprite, the savings was more than a factor of 20(!).

MultiGIF is available as source code, of course. (And I had nothing to do with it, but I do find it darned handy.)

Regards,

Greg Roelofs, http://pobox.com/~newt/

Newtware, Info-ZIP, PNG Group, U Chicago, Philips Research, ...

Re: How to close and reopen a new /var/adm/messages file

Re: How to close and reopen a new /var/adm/messages fileRegarding the posting in issue #12 of your gazette, how to backup the current messages file & recreate, here is an alternative method...

Place the lines at the end of this messages in a shell script (/root/cron/swaplogs in this example). Don't forget to make it +x! Execute it with 'sh scriptname', or by adding the following lines to your (root's) crontab:

# Swap logfiles every day at 1 am, local time 0 01 * * * /root/cron/swaplogsThe advantage to this method over renaming the logfile and creating a new one is that in this method, syslogd is not required to be restarted.

#!/bin/sh cp /var/adm/messages /var/adm/messages.`date +%d-%m-%y_%T` cat /dev/null >/var/adm/messages cp /var/adm/syslog /var/adm/syslog.`date +%d-%m-%y_%T` cat /dev/null >/var/adm/syslog cp /var/adm/debug /var/adm/debug.`date +%d-%m-%y_%T` cat /dev/null >/var/adm/debug

How to truncate /var/adm/messages

How to truncate /var/adm/messages>In answer to the question: > > What is the proper way to close and reopen a new >/var/adm/messages > file from a running system? > > Step one: rename the file. Syslog will still be writing in it >after renaming so you don't > lose messages. Step two: create a new one. After re-initializing >syslogd it will be used. >just re-initialize. > > 1.mv /var/adm/messages /var/adm/messages.prev > 2.touch /var/adm/messages > 3.kill -1 pid-of-syslogd > > This should work on a decent Unix(like) system, and I know Linux >is one of them.This is NOT an proper way of truncate /var/adm/messages.

It is better to do:

Best of regards,

Eje Gustafsson, System Administrator

THE AERONAUTICAL RESEARCH INSTITUTE OF SWEDEN

Info-ZIP encryption code

Info-ZIP encryption codeThis is a relatively minor point, but Info-ZIP's Zip/UnZip encryption code is *not* DES as reported in Robert Savage's article (LG issue 12). It's actually considerably weaker, so much so that Paul Kocher has pub- lished a known-plaintext attack (the existence of which is undoubtedly the reason PKWARE was granted an export license for the code). While the encryption is good enough to keep your mom and probably your boss from reading your files, those who desire *real* security should look to PGP (which is also based on Info-ZIP code, but only for compression).

And while I'm at it, Linux users will be happy to learn that the upcoming releases of UnZip 5.3 and Zip 2.2 will be noticeably faster than the cur- rent publicly released code. In Zip's case this is due to a work-around for a gcc bug that prevented a key assembler routine from being used--Zip is now 30-40% faster on large files. In UnZip's case the improvement is due to a couple of things, one of which is simply better-optimized CRC code. UnZip 5.3 is about 10-20% faster than 5.2, I believe. The new ver- sions should be released in early January, if all goes well. And then... we start working on multi-part archives. :-)

Greg Roelofs, http://pobox.com/~newt/

Newtware, Info-ZIP, PNG Group, U Chicago, Philips Research, ...

Kernel Compile Woes

Kernel Compile WoesGreetings. Having been through hell after a recompile of my kernel, I thought I'd pass this on.

It all started with me compiling a kernel for JAVA binary support..who tell me do that. Somehow I think I got experimental code in..even worse :> Anyway, it resulted in a crash, and I couldn't recompile since then.

Well, after several cries for help, and trying all sorts of stuff, I upgraded binutils to 2.7.0.3, and told the kernel to build elf support and in elf format, and hey presto. I'd been wrestling with the problem for well over a week, and every time, I'd get an error. Unfortunately, I had to take out sound support, so I'm going to see if it'll add back in.

I have to say thank you to the folks on the linux-kernel mailing list at vger.rutgers.edu. I posted there once, and had back at least 5 replies in an hour. (One came back in 10 minutes).

As for the LG, it looks very nice seen thru Lynx 2-6 (no graphics to get messed up :>) I love the Weekend Mechanic, and the 2 cent tips mainly. Perhaps one day I'll contribute something,.

Duncan Hill, Student of the Barbados Community College http://www.sunbeach.net/personal/dhill/dhill.htm http://www.sunbeach.net/personal/dhill/lynx/lynx-main.html

Letter 1 to the LJ Editor re Titlebar

Letter 1 to the LJ Editor re TitlebarI finally used the following script:

if [ ${SHELL##/*/} = "ksh" ] ; then

if [[ $TERM = x"term" ]] ; then

HOSTNAME=`uname -n`

label () { echo "\\033]2;$*\\007\\c"; }

alias stripe='label $LOGNAME on $HOSTNAME - ${PWD#$HOME/}'

cds () { "cd" $*; eval stripe; }

alias cd=cds

eval stripe

fi

fi

I don't use vi, so I left out that functionality.

The functional changes I made are all in the arguments to the echo command. The changes are to use \\033 rather than what was shown in the original tip as ^[, to use \\007 rather than ^G, and to terminate the string with \\c rather than use the option -n.

On AIX 4.1, the command "echo -n hi" echoes "-n hi"; in other words, -n is not a portable command-line option to the echo command. I tested the above script on AIX 3.2, AIX 4.1, HPUX 9.0, HPUX 10.0, Solaris 2.4 and Solaris 2.5. I'm still trying to get Linux and my Wintel box mutually configured, so I haven't tested it on Linux.

I have noticed a problem with this script. I use the rlogin command to log in to a remote box. When I exit from the remote box, the caption is not updated, and still shows the hostname and path that was valid just before I exited. I tried adding

exits () { "exit" $*; eval stripe; }

alias exit=exits

and

rlogins () { "rlogin" $*; eval stripe; }

alias rlogin=rlogins

Neither addition updated the caption to the host/path returned to. Any suggestions?

Roger Booth, [email protected]

Letter 2 to the LJ Editor re Titlebar

Letter 2 to the LJ Editor re TitlebarSome further clarification is needed with respect to the X Term Titlebar Function tip in the Linux Gazette Two Cent Tips column of the January 1997 issue. With regard to the -print option to find, Michael Hammel says, "Linux does require this." This is yet another example of "Your mileage may vary." Some versions of Linux do not require the -print option. And, although Solaris may not, SunOS 4.1.3_U1 and 4.1.4 do require the -print option. Also, if running csh or tcsh, remember to escape wildcards in the file specification ( e.g. find ./ -name \*txt\* ) so that the shell doesn't attempt to expand them.

Second, for those tcsh fans out there, here is an xterm title bar function for tcsh.

NOTE: This works on Slackware 3.0 with tcsh version 6.04.00, under the tab, fv, and OpenLook window managers. Your mileage may vary.

if ( $TERM == xterm ) then

set prompt="%h> "

alias cwdcmd 'echo -n "^[]2;`whoami` on ${HOST} - $cwd^G^[]1;${HOST}^G"'

alias vi 'echo -n "^[]2;${HOST} - editing file-> \!*^G" ; vim \!* ;

cwdcmd'

alias telnet '/bin/telnet \!* ; cwdcmd'

alias rlogin '/usr/bin/rlogin \!* ; cwdcmd'

cwdcmd

else

set prompt="[%m]%~% "

endif

PPP redialer script--A Quick Hack

PPP redialer script--A Quick HackThis here is the way I do it, but don't use it if your area has some regulations about redialing the same phone numbers over and over:

#!/bin/sh

# A quick hack for redialing with ppp by

# Tries 2 numbers sequentially until connected

# Takes 1 cmdline parm, the interface (ppp0, ppp1...)

# You need 2 copies of the ppp-on script (here called modemon{1,2}) with

# different telephone numbers for the ISP. These scripts should be

slightly

# customized so that the passwd is _not_ written in them, but is taken

# separately from the user in the main (a.k.a. this) script.

# Here's how (from the customized ppp-on a.k.a. modemon1):

# ...

# TELEPHONE=your.isp.number1 # Then make a copy of this script ->

modemon2

# and change this to your.isp.number2

# ACCOUNT=your.account

# PASSWD=$1 # This gets the passwd from the main

script.

# ...

# /sbin/ifconfig must be user-executable for this hack to work.

wd1=1 # counter start

stty -echo # echo off

echo -n "Password: " # for the ISP

account

read wd2

stty echo # back on

echo

echo "Trying..."

echo 'ATE V1 M0 &K3 &C1 ^M' > /dev/modem # modem init,

# change as

needed

/usr/sbin/modemon1 $wd2 # first try

flag=1 # locked

while [ 1 ]; do # just keep on

going

if [ "$flag" = 1 ]; then # locked?

bar=$(ifconfig | grep -c $1) # check for a link

if [ "$bar" = 1 ]; then # connected?

echo "Connected!" # if so, then

exit 0 # get outta here

else

foo=$(ps ax) # already

running?

blaat=$(echo $foo | grep "/usr/sbin/pppd")

if [ "$blaat" = "" ]; then # if not, then

flag=0 # unset lock

fi

fi

else # no lock, ready

# to continue

wd1=$[wd1+1]

echo "Trying again... $wd1"

if [ $[wd1%2] = 1 ]; then # this modulo

test

/usr/sbin/modemon1 $wd2 # does the

switching

else # between the 2

numbers

/usr/sbin/modemon2 $wd2 # we are using

fi

flag=1 # locked again

fi

done # All done!

There. Customize as needed & be an excellent person. Ant DON'T break any laws if redialing is illegal in your area!

Mark

TABLE tags in HTML

TABLE tags in HTMLG'day,

Just browsing through the mailbox, and I noticed your reply to a user about HTML standard compliance and long download times. You replied that you use the spiral image (a common thing these days) inside a <TABLE>.

I hope you are aware that a browser cannot display any contents of a <TABLE> until it has received the </TABLE> tag (no matter what version of any browser - it is a limitation of the HTML tag) because the browser cannot run its algorithm until it has received all of the <TR> and <TD> tags, and it can't be sure of that until the </TABLE> tag comes through. I have seen many complex sites, using many images (thankfully they at least used the HEIGHT and WIDTH tags on those images to tell the browser how big the image will be so it didn't have to download it to find out) but still, putting it in a table nullifies much of the speediness that users require.

A solution I often offer the HTML designers under me is to use a <DL><DD> combination. Though this doesn't technically fit the HTML DTD (certain elements are not allowed in a <DL>) and I use an editor that will not allow illegal HTML, so I can't do it myself (without going via a backdoor - but that's bad quality in my opionion). The downside of the this is of course that you don't know what sized FONT the user has set on the browser, and the FONT size affects the indetation width of the <DD> element. But if your spiral image is not too wide, then that could be made a NULL factor. The plus to the <DL><DD> is that the page can be displayed instantly as it comes down (again..providing the developer uses the HEIGHT and WIDTH attributes on *ALL* images so that the browser doesn't have to pause it's display to get the image and work out how to lay out around the image)

Michael O'Keefe

Text File undelete

Text File undeleteHere's a trick I've had to use a few times.

Desperate person's text file undelete.

If you accidentally remove a text file, for example, some email, or the results of a late night programming session, all may not be lost. If the file ever made it to disk, ie it was around for more than 30 seconds, its contents may still be in the disk partition.

You can use the grep command to search the raw disk partition for the contents of file.

For example, recently, I accidentally deleted a piece of email. So I immediately ceased any activity that could modify that partition: in this case I just refrained from saving any files or doing any compiles etc. On other occasions, I've actually gone to the trouble of bring the system down to single user mode, and unmounted the filesystem.

I then used the egrep command on the disk partition: in my case the email message was in /usr/local/home/michael/, so from the output from df, I could see this was in /dev/hdb5

sputnik3:~ % df Filesystem 1024-blocks Used Available Capacity Mounted on /dev/hda3 18621 9759 7901 55% / /dev/hdb3 308852 258443 34458 88% /usr /dev/hdb5 466896 407062 35720 92% /usr/local sputnik3:~ % su Password: [michael@sputnik3 michael]# egrep -50 'ftp.+COL' /dev/hdb5 > /tmp/xNow I'm ultra careful when fooling around with disk partitions, so I paused to make sure I understood the command syntax BEFORE pressing return. In this case the email contained the word 'ftp' followed by some text followed by the word 'COL'. The message was about 20 lines long, so I used -50 to get all the lines around the phrase. In the past I've used -3000 to make sure I got all the lines of some source code. I directed the output from the egrep to a different disk partition - this prevented it from over writing the message I was looking for.

I then used strings to help me inspect the output

strings /tmp/x | lessSure enough the email was in there.

This method can't be relied on, all, or some, of the disk space may have already been re-used.

This trick is probably only useful on single user systems. On multi-users systems with high disk activity, the space you free'ed up may have already been reused. And most of use can't just rip the box out from under our users when ever we need to recover a file.

On my home system this trick has come in handy on about three occasions in the past few years - usually when I accidentally trash some of the days work. If what I'm working survives to a point where I feel I made significant progress, it get's backed up onto floppy, so I haven't needed this trick very often.

Michael

Truncating /var/adm/messages

Truncating /var/adm/messagesHi !

About the topic "How to truncate /var/adm/messages", here's the way to do it with a shell script :

mv /var/adm/messages /var/adm/messages.prev

touch /var/adm/messages

mv /var/adm/syslog /var/adm/syslog.prev

touch /var/adm/syslog

kill -1 `ps x | grep syslog | grep -v grep | awk '{ print $1 }'`

Happy new year ! 2c Host Trick

2c Host TrickIn order to make DHCPD by ISC/Vixie to run under Linux, you should have route to host 255.255.255.255. Standard "route" from Slackware distribution does not like the string "route add -host 255.255.255.255 dev eth0". But you can add hostname to your /etc/hosts file with address 255.255.255.255, and use "route add hostname dev eth0" instead. It works.

Paul.

Use of TCSH's :e and :r Extensions

Use of TCSH's :e and :r ExtensionsI'd like to congratulate Jesper Pedersen on his article on tcsh tricks. Tcsh has long been my favorite shell. But most of the features Jesper hit upon are also found in bash. Tcsh's most useful and unique features are its variable/history suffixes.

For example, if after applying a patch one wishes to undo things, by moving the *.orig files to there base names, the :r extension which means to strip the extension comes in handy. e.g.

foreach a ( *.orig )

mv $a $a:r

end

The same loop for ksh looks like:

for a in *.orig; do=20

mv $a `echo $a|sed -e 's,\.orig$,,g'`

done

Even better, one can use the :e extension to extract the file extension. For example, lets say we we want to do the same thing on compressed files:

foreach a ( *.orig.{gz,Z} )

mv $a $a:r:r.$a:e

end

The $a:r:r is the filename without .orig.gz and .orig.Z, we tack the .gz or .Z back on with .$a:e.

Bill

Various notes on 2c tips, Gazette 12

Various notes on 2c tips, Gazette 12I noticed a few overly difficult or unnecessary procedures recommended in the 2c tips section of Issue 12. Since there is more than one, I'm sending it to you:

#!/bin/sh

# lowerit

# convert all file names in the current directory to lower case

# only operates on plain files--does not change the name of

directories

# will ask for verification before overwriting an existing file

for x in `ls`

do

if [ ! -f $x ]; then

continue

fi

lc=`echo $x | tr '[A-Z]' '[a-z]'`

if [ $lc != $x ]; then

mv -i $x $lc

fi

done

Wow. That's a long script. I wouldn't write a script to do that; instead, I would use this command:

for i in * ; do [ -f $i ] && mv -i $i `echo $i | tr '[A-Z]' '[a-z]'`; done;on the command line.

The contributor says he wrote the script how he did for understandability (see below).

On the next tip, this one about adding and removing users, Geoff is doing fine until that last step. Reboot? Boy, I hope he doesn't reboot every time he removes a user. All you have to do is the first two steps. What sort of processes would that user have going, anyway? An irc bot? Killing the processes with a simple

kill -9 `ps -aux |grep ^ |tr -s " " |cut -d " " -f2`Example, username is foo

kill -9 `ps -aux |grep ^foo |tr -s " " |cut -d " " -f2`That taken care of, let us move to the forgotten root password.

The solution given in the Gazette is the most universal one, but not the easiest one. With both LILO and loadlin, one may provide the boot parameter "single" to boot directly into the default shell with no login or password prompt. From there, one may change or remove any passwords before typing ``init 3``to start multiuser mode. Number of reboots: 1 The other way Number of reboots: 2

That's just about it. Thanks for the great magazine and continuing contribution to the Linux community. The Gazette is a needed element for many linux users on the 'net.

Justin Dossey

Date: Wed, 4 Dec 1996 08:46:24 -0800 (PST)

Subject: Re: lowerit shell script in the LG

From: Phil Hughes,

The amazing Justin Dossey wrote:

> #!/bin/sh > for i in * ; do [ -f $i ] && mv -i $i `echo $i | tr '[A-Z]' '[a-z]'`; > done; > > may be more cryptic than ... > > but it is a lot nicer to the system (speed & memory-wise) too.Can't argue. If I had written it for what I considered a high usage situation I would have done it more like you suggested. The intent, however, was to make something that could be easily understood.

Phil Hughes

Viewing HOWTO Documents

Viewing HOWTO Documents>From a newbie to another, here is a short script that eases looking for and viewing howto documents. My howto's are in /usr/doc/faq/howto/ and are gzipped. The file names are XXX-HOWTO.gz, XXX being the subject. I created the following script called "howto" in the /usr/local/sbin directory:

#!/bin/sh

if [ "$1" = "" ]; then

ls /usr/doc/faq/howto | less

else

gunzip -c /usr/doc/faq/howto/$1-HOWTO.gz | less

fi

When called without argument, it displays a directory of the available howto's. Then when entered with the first part of the file name (before the hyphen) as an argument, it unzips (keeping the original intact) then displays the document.

For instance, to view the Serial-HOWTO.gz document, enter: $ howto Serial

Keep up the good work.

Didier

Xaw-XPM .Xresources troubleshooting tip.

Xaw-XPM .Xresources troubleshooting tip.I'm sure a lot of you folks out there have installed the new Xaw-XPM and like it a lot. But I've had some trouble with it. If I don't install the supplied .Xresources-file, xcalc and some other apps (ghostview is one) segfaults whenever you try to use them.

I found out that the entry which causes this, is this:

*setPixmap: /path/to/an/xpm-fileIf this entry isn't in your .Xresources, xcalc and ghostview won't work. Hope some of you out there need this.

And while you're at ghostview, remember to upgrade ghostscript to the latest version to get the new and improved fonts, they certainly look better on paper than the old versions.

Ciao!

Robin

PS: Great mag, now I'm just waiting for the arrival of my copy of LJ

xterm title bar

xterm title barHi Guys,

I noticed the "alias for cd xterm title bar tip" from Michael Hammel in the Linux Gazette and wanted to offer a possible improvement for your .bashrc file. A similar solution might work for ksh, but you may need to substitute $HOSTNAME for \h, etc:

if [ "x$TERM" = "xxterm" ]; then PS1='\h \w-> \[\033]0;\h \w\007\]' else PS1='\h \w-> ' fiPS1 is an environment variable used in bash and ksh for storing the normal prompt. \h and \w are shorthand for hostname and working directory in bash. The \[ and \] strings enclose non-printing characters from the prompt so that command line editing will work correctly. The \O33]0; and \007 strings enclose a string which xterm will use for the title bar and icon name. Sorry, I don't remember the codes for setting these independently. (ksh users note: \033 is octal for ESC and \007 is octal for CTRL-G.) This example just changes the title bar and icon names to match the prompt before the cursor.

Any program which changes the xterm title will cause inconsistencies if you try an alias for cd instead of PS1. Consider rlogin to another machine which changes the xterm title. When you quit rlogin, there is nothing to force the xterm title back to the correct value when using the cd alias (at least not until the next cd). This is not a problem when using PS1.

You could still alias vi to change the xterm title bar, but it may not always be correct. If you use ":e filename" to edit a new file, vi will not update the xterm title. I would suggest upgrading to vim (VI iMproved). It has many nice new features in addition to displaying the current filename on the xterm title.

Hopefully this tip is a good starting point for some more experimenting. Good luck!

Lee Bradshaw, [email protected]

|

Contents: |

SECURITY: (linux-alert) LSF Update#14: Vulnerability of the lpr program.

SECURITY: (linux-alert) LSF Update#14: Vulnerability of the lpr program.Date: Sat, 26 Nov 1996

Linux Security FAQ Update -- lpr Vulnerability

A vulnerability exists in the lpr program version 0.06. If installed suid to root, the lpr program allows local users to gain access to a super-user account.

Local users can gain root privileges. The exploits that exercise this vulnerability were made available.

lpr utility from the lpr 0.06 suffers from the buffer overrun problem. Installing lpr as a suid-to-root is needed to allow print spooling.

This LSF Update is based on the information originally posted to linux-security mailing list.

For additional information and distribution corrections:

Linux Security WWW: http://bach.cis.temple.edu/linux/linux-security linux-security & linux-alert mailing list archives: ftp://linux.nrao.edu/pub/linux/security/list-archive

LINUXEXPO '97 TECHNICAL CONFERENCE

LINUXEXPO '97 TECHNICAL CONFERENCEDurham, N.C. December 31,1996-- It was announced today that the third annual LinuxExpo Technical Conference will be held at the N.C. Biotechnology Center in Research Triangle Park, NC on April 4-5, 1997. The conference will consist of fourteen elite developers who will give technical talks on various topics all related to the development of Linux. This year the event is expected to draw 1,000 attendees who will be coming not only for the conference, but to visit the estimated 30 Linux companies and organizations that will be selling their own Linux products and giving demonstrations. The event will also include a Linux User's Group meeting, an install fair, and a job fair for all of the computer programming hopefuls. LinuxExpo '97 will be complete with refreshments and entertainment from the Class Action Jugglers.

For addtional information: Anna Selvia,

LinuxExpo '97 Technical Conference,

3201 Yorktown Ave. Suite 113

Durham, NC 27713

WWW: Linux Archive Search Site

WWW: Linux Archive Search SiteDate: Thu, 21 Nov 1996

Tired of searching sunsite or tsx-11 for some program you heard about on irc? Well, the Linux Archive Search (LAS) is here. It is a search engine that searches an updated database of the files contained on sunsite.unc.edu, tsx-11.mit.edu, ftp.funet.fi, and ftp.redhat.com. You can now quickly find out where the files are hiding! The LAS is living at http://torgo.ml.org/las (It may take a second to respond, its on a slow link). So give it a whirl, who knows, you may use it a lot!

For additional information:

Jeff Trout,

The Internet Access Company, Inc.

Netherlands - Linux Book On-line

Netherlands - Linux Book On-lineDate: Thu, 05 Dec 1996

The very first book to appear in Holland on the Linux operating system has gone on-line and can be found at:

http://www.cv.ruu.nl/~eric/linux/boek/

And of course from every (paper) copy sold, one dollar is sent to the Free Software Foundation. For additional information:

Hans Paijmans, KUB-University, Tilburg, the Netherlands

, http://purl.oclc.org/NET/PAAI/

New O'Reilly Linux WWW Site

New O'Reilly Linux WWW SiteCheck out the new O'Reilly & Associates, Inc. Linux web site at http://www.ora.com/info/linux/

It has:

PCTV Reminder

PCTV ReminderThe "Unix III - Linux" show will air on the Jones Computer Network (JCN) and the Mind Extension University Channel (MEU) the week of January 20, 1997.

The scheduled times are:

It is best to call your local cable operator to find the appropriate channel.

Tom Schauer, Production Assoc. PCTV

daVinci V2.0.2 - Graph Visualization System

daVinci V2.0.2 - Graph Visualization SystemNovember 20, 1996 (Bremen, Germany) - The University of Bremen announces daVinci V2.0.2, the new edition of the noted visualization tool for generating high-quality drawings of directed graphs with more than 2000 installations worldwide. Users in the commercial and educational domain have already integrated daVinci as user interface for their application programs to visualize hierarchies, dependency structures, networks, configuration diagrams, dataflows, etc. daVinci combines hierarchical graph layout with powerful interactive capabilities and an API for remote access from a connected application. In daVinci V2.0.2, a few extensions related to improving performance and usage of the previous V2.0.1 release have been made based on user feedback.

daVinci V2.0.2 is licensed free of charge for non-profit use and is immediately available Linux. The daVinci system can be downloaded with this form:

http://www.informatik.uni-bremen.de/~davinci/daVinci_get_daVinci.html

For additional information:

Michael Froehlich, daVinci Graph Visualization Project

Computer Science Department, University of Bremen, Germany

http://www.informatik.uni-bremen.de/~davinci ,

WWW: getwww 1.3 - download an entire HTML source tree

WWW: getwww 1.3 - download an entire HTML source treeDate: Wed, 04 Dec 1996

Getwww is designed to download an entire HTML source tree from a remote URL, recursively changing image and hypertext links.

From the LSM:

Primary-site: ftp.kaist.ac.kr /incoming/www 25kB getwww++-1.3.tar.gz

Alternate-site: sunsite.unc.edu /pub/Linux/system/Network/info-systems/www 25kB getwww++-1.3.tar.gz

Platform: Linux-2.0.24

Copying-policy: GPL

For additional information:

In-sung Kim, Network Tool Group,

Motif Interface Builder on Unifix 2.0

Motif Interface Builder on Unifix 2.0Date: Sun, 01 Dec 1996

Unifix Software GmbH is proud to announce View Designer/X, a new Motif interface builder available for Linux. A demo version of VDX is included on Unifix Linux 2.0.

With object oriented and interactive application development tools, the software developer is able to design applications with better quality and in shorter times.

For more information and to download the latest demo version, see:

http://www.unifix.de/products/vdx

For additional information: Unifix Software GmbH,

View Designer/X

View Designer/XView Designer/X, a new Motif Interface Builder for Linux has been released. It enables application developers to design user interfaces with Motif 2.0 widgets and to generate C and C++ code. The VDX provides an interactive Wysiwyg View and a Widget Tree Browser which can be used to modify the structure of the user interface. All resources are adjustable by Widget Resource Editor and by using template files the code generation of VDX is more flexible than those of other interface builders.

Bredex GmbH, Germany is distributing the View Designer/X via Web service. Please see following web page for more information and downloading the free demo version:

http://www.bredex.de/EN/vdx/

Dirk Laessig,

X-Files 1.21 - graphical file manager in tcl/tk

X-Files 1.21 - graphical file manager in tcl/tkX-Files is a graphical file management program for Unix/X-Window environment developed on Linux.

For more information and packages see:

http://pinhead.tky.hut.fi/~xf_adm/ http://www.hut.fi/~mkivinie/xfindex.html ftp://java.inf.tu-dresden.de:/pub/unix/x-files/

For questions:

For additional information:

Mikko Kiviniemi, ,

Helsinki University of Technology

The Answer Guy

The Answer Guy

Combining modems for more speed

Combining modems for more speedThanks for reading this post. I have heard that it's possible to set up Linux to combine two analog modems into one so as to double the speed of a connection. Is this true, how does this work and where can I get more info, guidance, how-to, etc.? I have Slackware 96 from Infomagic. Your truly, Keith Bell

I've heard of this as well. I've never used it but let's look it up...Ahh... that would be the EQL option in the kernel. Here's an excerpt from the 'make menuconfig' help pages (in the 2.0.27 kernel sources):

Linux Kernel v2.0.27 Configuration

EQL (serial line load balancing) support:

If you have two serial connections to some other computer (this usually requires two modems and two telephone lines) and you use SLIP (= the protocol for sending internet traffic over telephone lines) or PPP (= a better SLIP) on them, you can make them behave has to be supported at the other end as well, either with a similar EQL Linux driver or with a Livingston Portmaster 2e. Say Yes if you want this and read drivers/net/README.eql.So that file is :

EQL Driver: Serial IP Load Balancing HOWTO

Simon "Guru Aleph-Null" Janes, [email protected]

v1.1, February 27, 1995(After reading this you'll know about as much on this subject as I do -- after using any of this you'll know *much* more).

Dialup Problem

Dialup ProblemI don't know if you can, or even are willing, help me witha problem i have. I'm running redhat 4.0, on a p120 w/24 megs of ram, kernel 2.0.18

I'm willing.anyway...i have this ppp connection problem and no I know knows what the problem is, i've looked through the FAQS, HOWTO's, tried #linux on irc, etc etc...no one knows what my problem is, so now i'm desperate.

When i try to dial my isp, i get logged in fine, but its REALLY slow. i'm using the 'network module' ppp thing in control panel on X. mru=1500, asyncmap=0,speed=115000, i couldn't find a place to insert mtu, and when i tried putting that in /etc/ppp/options the script this program was using wouldn't work.

Usually I see these symptoms when there is an IRQ conflict. Some of the data gets through -- with lots of errors and lots of retransmits but any activity on the rest of the machine -- or even just sitting there -- and you get really bad throughput and very unreliable connections.I noticed that after i input something and then move the cursor off of the windows, it runs at a much faster speed, and it gets annoying moving the cursor back and forth. I tried dip, minicom, and this 'network module' thing...all are slow

I would do all of your troubleshooting from outside of X. Just use the virtual consoles until everything else works right. (Fewer layers of things to conflict with one another).if you can shed any light on this, it would be much appreciated. thanks

Take a really thorough look at the hardware settings for everything in the machine. Make a list of all the cards and interfaces -- go through the docs for each one and map out which ones are using which interfaces.I ended up going through several combinations of video cards and I/O cards before I got my main system all integrated. Luckily newer systems are getting better (this is a 386DX33 with 32Mb of RAM and a 2Mb video cards -- two IDE's, two floppy drives, two SCSI hardisks, an internal CD-ROM, and external magneto optical drive, a serial mouse, a modem (used for dial-in dial-out, uucp, and ppp) and null modem (I hook a laptop to it as a terminal for my wife) and an ethernet card.

Another thing to check is the cabling between your serial connector and your modem. If you're configured for XON/XOFF you're in trouble. If you're configured for hardware flow control and you don't have the appropriate wires in your cable than you're in worse trouble.

Troubleshooting of this sort really is best done over voice or in person. There are too many steps to the troubleshooting and testing to do effectively via e-mail.

File Referencing

File Referencing

> "A month of sundays ago L.U.S.T List wrote:"

>> 1. I do not know why on Linux some program could not run

>> correctly.

>> for example

>> #include

>> main()

>> {

>> printf("test\n");

>> fflush(stdout);

>> }

>> They will not echo what I print.

>

> Oh yes it will. I bet you named the executable "test" ... :-)

> (this is a UNIX faq).

>

I really suggest that people learn the tao of "./"This is easy -- any time you mean to refer to any file in the current directory precede it with "./" -- this forces all common Unix shells to refer to the file in THIS directory. It solves all the problems with files that start with dashes and it allows you to remove :.: from your path (which *all* sysadmins should do right NOW).

That is the tao of "./" -- the two keystrokes that can save you many hours of grief and maybe save your whole filesystem too.

WWW Server?

WWW Server?Where can I get (or buy) a WWW server for LINUX?

Please, help me.

Web servers are included with most distributions of Linux. The most popular one right now is called Apache. You can look on your CD's (if you bought a set) or you can point a web client (browser) at http://www.apache.org for more information and for an opportunity to download a copy.thank youThere are several others available -- however Apache is the most well known -- so it will be the best for you to start with. It is also widely considered to offer the best performance and feature set (of course that is a matter of considerable controversy among "connosieurs" just as is the ongoing debate about 'vi' vs. 'emacs').

You're welcome.

Comdex/Fall '96 has come and gone once again. COMDEX is the second largest computer trade show in the world, offering multiple convention floors with 2000 exhibitors plying their new computer products to approximately 220,000 attendees in Las Vegas, Nevada in November of 1996.

This year's show was a great success for Linux in general. The first ever ``Linux Pavilion'' was organized at the Sands Convention Center and Linux vendors from all over the country participated. The Linux International (LI) booth was in the center, giving away literature and information for all the Linux Vendors. Linux International is a not-for-profit organization formed to promote Linux to computer users and organizations. Staffed by volunteers including Jon ``Maddog'' Hall and Steve Harrington, the LI booth was a great place for people to go to have their questions answered. Needless to say, the Linux International Booth was never empty. Surrounding LI, were Red Hat Software and WorkGroup Solutions.

Other vendors in the pavilion included Craftwork Solutions, DCG Computers, Digital Equipment Corporation, Frank Kasper & Associates, Infomagic, Linux Hardware Solutions, SSC (publishers of Linux Journal), and Yggdrasil Computing. Caldera, Pacific HiTech, and Walnut Creek both exhibited at Comdex, but not as part of the Linux Pavilion.

SSC gave out Linux Journals at the show and actually ran out of magazines early Thursday morning. Luckily, we were able to have some more shipped to us, but we still ran out again on Friday, the last day of the show. Comdex ran five full days and the Sands pavilion was open from 8:30 to 6 most show days which meant long days for all the exhibitors there.

Show management put up signs, directing attendees to the Linux Pavilion and to "more Linux vendors". The show was so large that it was easy to get lost.

At the LI booth and at SSC's booth, the response to Linux was overwhelmingly positive. Questions ranged from ``I've heard a lot about Linux, but I'm not sure what it is, can you enlighten me?'' to ``I haven't checked for a few days---what is the latest development kernel?''

For next year's Comdex in November '97, Linux vendors, coordinated by Linux International, are already working to put together a Linux pavilion at least three times as big as the one this year.

Vendors interested in being part of the Linux pavilion in November '97 may contact Softbank who put on Comdex at [email protected] or to do this through Linux International, contact ``Jon Maddog'' Hall via e-mail at .

Lately, a lot of Web pages have begun selling ad space "banners." Wasting valuable bandwidth, these banners often hawk products I don't care to hear about. I'd rather not see them, and not have to download their contents.

There are two ways of filtering out these banners. The first is to deny all pictures that are wider than tall and generally towards the top or bottom of the page. The second is to simply block all the accesses to and from the web sites that are the notorious advertisers. This second approach is the one I'm going to take.

When searching around the web, you will see that many of the banners come from the site ad.linkexchange.com. This is the site we will want to ban.

Our first order of business is to set up our firewall. We won't be using it for security, although this doesn't prohibit also using the firewall for security. First, we recompile the kernel, saying "Yes" to CONFIG_FIREWALL. This allows us to use the built in kernel firewalling.

Then, we need to get the IPFWADM utility. You can find it at: http://www.xos.nl/linux/ipfwadm . Untar, compile and install this utility.

Since we are doing no other firewalling, this should be sufficient.

Now, we come to the meat of the maneuver. We need now to block access to our machine from ad.linkexchange.com. First, block out access to the sight, so that our requests don't even make it there. ipfwadm -O -a reject -P tcp -S 0.0.0.0/0 -D ad.linkexchange.com 80

This tells ipfwadm to append a rule to the Output filter. The rule says to reject all packets of protocol TCP from anywhere to ad.linkexchange.com on port 80. If you don't get this, read Chris Kostick's excellent article on IP firewalling at http://www.ssc.com/lj/issue24/1212.html.

The next rule is to keep any stuff from ad.linkexchange.com from coming in. Technically, this shouldn't be necessary. If we haven't requested it, it shouldn't come. But, better safe than sorry. ipfwadm -I -a reject -P tcp -S ad.linkexchange.com 80 -D 0.0.0.0/0

Now, all access to and from ad.linkexchange.com is rejected.

Note: this will only work when web browsing from that machine. To filter for a whole network, do them same but with -F instead of -O and -I.

To test, visit the site http://www.reply.net. They have a banner on top which should either not appear or appear as a broken icon. Either way, no network bandwidth will be wasted downloading the picture, and all requests will be rejected immediately.

Not all banners are so easily dealt with. Many companies, like Netscape, host their own banners. You don't want to block access to Netscape, so this approach won't work. But, you will find a number of different advertisers set up like linkexchange. As you find more, add them to the list of rejected sites.

Good luck, and happy filtering!Although more computers are becoming network connected every day, there are many instances where you need to transfer files by the ol' sneaker-net method. Here are some hints, tips and short-cuts for doing this, aimed at users who are new to Linux or Unix. (There may even be some information useful to old-timers...)

What do I use floppies for? As a consultant, I frequently do contract work for companies which, because of security policies, do not connect to the 'Net. So, FTP'ing files which I need from my network at home is out of the question.

My current contract as an example, I am using Linux as an X-Windows terminal for developing software on their DEC Alphas running OSF. (I blew away the Windoze '95 which they had loaded on the computer they gave me.) I often need to bring files with me from my office at home, or backup my work to take back home for work in the evening. (Consultants sometimes work flex-hours, which generally means more hours...)

Why use cpio(1) or tar(1) when copying files? Because it is a portable method of transferring files from a group of subdirectories with the file dates left intact. The cp(1) command may or may not do the job depending on Operating Systems and versions you are dealing with. In addition, specifying certain options will only copy files which are new or have changed.

The first thing you need to do to make the floppies useful is to format them, and usually lay down a filesystem. There are also some preliminary steps which make using floppy disks much easier, which is the point of this article.

I find it useful to make my username part of the floppy group in the /etc/group file. This saves you from needing to su to root much of the time. (You will need to log out and log back in again for this to take effect.) I also use the same username both on the client's machine and my home office which saves time. The line should now look like this:

floppy::11:root,username

The following setup is assumed for the examples I present here. The root user must have the system directories in the PATH environment variable. Add the following to the .profile file in /root if not already there by su'ing to root.

You can also use your favorite editor to do this... I prefer vim(1) and have this symlinked to /usr/bin/vi instead of elvis(1) which is usually the default on many distributions. VIM has online help, and multiple window support which is very useful! (A symlink is created with a -s option to ln(1), and is actually called a symbolic link.)su - # this should ask for the root password. cat >> .profile PATH=/sbin:/usr/sbin:$PATH <ctrl>-D

Next, add the following lines to the /etc/fstab file: (I have all the user mountable partitions in one place under /mnt. You may want a different convention, but this is useful. I also have /mnt/cdrom symlinked to /cd for convenience.)

/dev/fd0 /mnt/fd0 ext2 noauto,user 1 2

Still logged in as root, make the following symlink: (If you have more than one floppy drive, then add the floppy number as well.)

ln -s /mnt/fd0 /fd

-or-

ln -s /mnt/fd0 /fd0

These two things make mounting and unmounting floppies a cinch. The mount(8) command follows the symlink and accesses the /etc/fstab file for any missing parameters, making it a useful shortcut.

To make the floppy usable as an ext2fs Linux filesystem, do the following as root: (The username is whatever username you use on regularly on the system. You, of course, should not use the root user for normal use!)

You may need to specify the geometry of the floppy you are using. If it is the standard 3.5 inch double sided disk, you may need to substitute /dev/fd0H1440 for the device name (in 1.2.x kernels). If you have a newer 2.xx kernel and superformat(1), you may want to substitute this for fdformat. See the notes in the Miscellaneous section below, or look at the man page. You may now exit out of su(1) by typing:export PATH=/sbin:/usr/sbin:$PATH # not needed if you set environment fdformat /dev/fd0 mke2fs /dev/fd0 mount /dev/fd0 /mnt/fd0 chown username /mnt/fd0

exit

From this point on, you may use the mount(8) and umount(8) commands logged in as your normal username by typing the following:

mount /fd umount /fd

For backing up my work to take home or to take back to the office I use cpio(1) instead of tar(1) as it is far more flexible, and better at handling errors etc. To use this on a regular basis, first create all the files you need by specifying the command below without the -mtime -1 switch. Then you can make daily backups from the base directory of your work using the following commands:

cd directory mount /fd find . -mtime -1 -print | cpio -pmdv /fd sync umount /fd

When the floppy stops spinning, and the light goes out, you have your work backed up. The -mtime option to find(1) specifies files which have been modified (or created) within one day (the -1 parameter). The options for cpio(1) specify copy-pass mode, which retain previous file modification times, create directories where needed, and do so verbosely. Without a -u (unconditional) flag, it will not overwrite files which are the same age or newer.

This operation may also be done over a network, either from NFS mounted filesystems, or by using a remote shell as the next example shows.

mount /fd

cd /fd

rsh remotesystem '(cd directory; find . -mtime -1 -print | cpio -oc)' |

cpio -imdcv

sync

cd

umount /fd

This example uses cpio(1) to send files from the remote system, and update the files on the floppy disk mounted on the local system. Note the pipe (or veritical bar) symbol at the end of the remote shell line. The arguments which are enclosed in quotes are executed remotely, with everything enclosed in braces happening in a subshell. The archive is sent as a stream across the network, and used as input to the cpio(1) command executing on the local machine. (If both systems are using a recent version of GNU cpio, then specify -Hcrc instead of c for the archive type. This will do error checking, and won't truncate inode numbers.)To restore the newer files to the other computer, change directories to the base directory of your work, and type the following:

cd directory mount -r /fd cd /fd find . -mtime -1 -print | cpio -pmdv ~- cd - umount /fd

If you needed to restore the files completely, you would of course leave out the -mtime parameter to find(1).

The previous examples assume that you are using the bash(1) shell, and uses a few quick tricks for specifying directories. The "~-" parameter to cpio is translated to the previous default directory. In other words, where you were before cd'ing to the /fd directory. (Try typing: echo ~- to see the effect, after you have changed directories at least once.) The cd ~- or just cd - command is another shortcut to switch directories to the previous default. These shortcuts often save a lot of time and typing, as you frequently need to work with two directories, using this command to alternate between them or reference files from where you were.

If the directory which you are tranferring or backing up is larger than a single floppy disk, you may need to resort to using a compressed archive. I still prefer using cpio(1) for this, although tar(1) will work too. Change directories to your work directory, and issue the following commands:

The -Hcrc option to cpio(1) is a new type of archive which older versions of cpio might not understand. This allows error checking, and inode numbers with more than 16 bits.cd directory mount /fd find . -mtime -1 -print | cpio -ovHcrc | gzip -v > /fd/backup.cpio.gz sync umount /fd

Of course, your original archive should be created using find(1) without the -mtime -1 options.

Sometimes it is necessary to backup or transfer a file or directories which are larger than a floppy disk, even when compressed. For this, we finally need to resort to using tar.

Prepare as many floppies as you think you'll need by using the fdformat(8) command. You do not need to make filesystems on them however, as you will be using them in raw mode.

If you are backing up a large set of subdirectories, switch to the base subdirectory and issue the following command:

This command will prompt you when to change floppies. Wait for the floppy drive light to go out of course!cd directory tar -cv -L 1440 -M -f /dev/fd0 .

If you need to backup or transfer multiple files or directories, or just a single large file, then specify them instead of the period at the end of the tar command above.

Unpacking the archive is similar to the above command:

cd directory tar -xv -L 1440 -M -f /dev/fd0

Finally, here are some assorted tips for using floppies.

The mtools(1) package is great for dealing with MS-DOG floppies, as we sometimes must. You can also mount(8) them as a Linux filesystem with either msdos or umsdos filesystem types. Add another entry to the /etc/fstab entry you made before, so that the two lines will look like this:

You can now mount an MS-DOS floppy using the command:/dev/fd0 /mnt/fd0 ext2 noauto,user 1 2 /dev/fd0 /mnt/dos msdos noauto,user 1 2

You can also symlink this to another name as a further shortcut.mount /mnt/dos

ln -s /mnt/dos /dos mount /dos

The danger of using the mount(8) commands rather than mtools(1) for users who are more familiar with MSDOS, is that you need to explicitly unmount floppies before taking them out of the drive using umount(8). Forgetting this step can make the floppy unusable! If you are in the habit of forgetting, a simple low-tech yellow Post-it note in a strategic place beside your floppy drive might save you a few headaches. If your version of Post-it notes has the <BLINK> tag, use it! ;-)

""

Newer systems based on the 2.xx kernel are probably shipped with fdutils. Check to see if you have a /usr/doc/fdutils-xxx directory, where xxx is a version number. (Mine is 4.3). Also check for the superformat(1) man page. This supersedes fdformat(1) and gives you options for packing much more data on floppies. If you have an older system, check the ftp://ftp.imag.fr/pub/Linux/ZLIBC/fdutils/ ftp site for more information.

The naming convention for floppies in newer 2.xx kernels has also changed, although the fd(4) man page has not been updated in my distribution. If you do not have a /dev/fd0H1440 device, then you probably have the newer system.

|

|

|

|

|

||

|

muse:

|

Last month I introduced a new format to this column. The response was mixed, but generally positive. I'm still getting more comments on the format of the column rather than the content. I don't know if this means I'm covering all the issues people want to hear about or people just aren't reading the column. Gads. I hope its not the latter. This months issue will include another book review, a discussion on adding fonts to your system, a Gimp user's story, and a review of the AC3D modeller. The holiday season is always busy one for me. I would have liked to do a little more, but there just never seems to be enough time in the day. |

|

Disclaimer: Before I get too far into this I should note that any of the news items I post in this section are just that - news. Either I happened to run across them via some mailing list I was on, via some Usenet newsgroup, or via email from someone. I'm not necessarily endorsing these products (some of which may be commercial), I'm just letting you know I'd heard about them in the past month. I went wondering through a local computer book store this month and scanned the graphics texts section. I found a few new tidbits that might be of interest to some of you. |

|||

3D Graphic File Formats: A Programmers ReferenceKeith Rule has written a new book on 3D Graphics File Formats. The book, which contains over 500 pages, has been published by Addison-Wesley Developers Press and is listed at $39.95. It includes a CD-ROM with a software library for processing various 3D file formats (both reading and writing), but the code is written for MS systems. Keith states there isn't any reason why the code shouldn't be portable to other platforms such as Linux. Any takers out there?ISBN 0-201-48835-3 |

OpenGL Programming for the X Window SystemI noticed a new text on the shelf of a local book store (Softpro, in Englewood, Colorado) this past month - Mark J. Kilgard's OpenGL Programming for the X Window System. This book, from Addison Wesley Developers Press, appears to have a very good coverage of how to write OpenGL applications that make use of X Windows API's. I haven't read it yet (or even purchased it - yet, but I will) so can't say how good it is. Mark is the author of the GLUT toolkit for OpenGL. GLUT is to OpenGL what Xt or Motif is to Xlib. Well, sort of. |

||

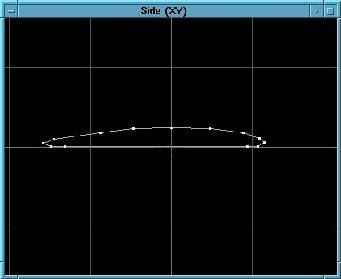

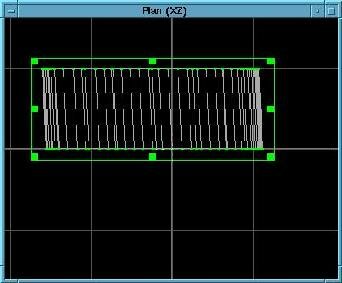

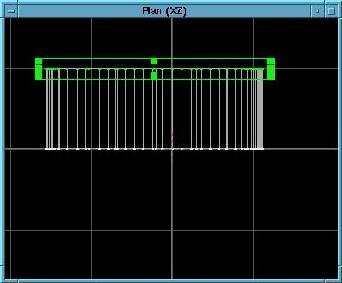

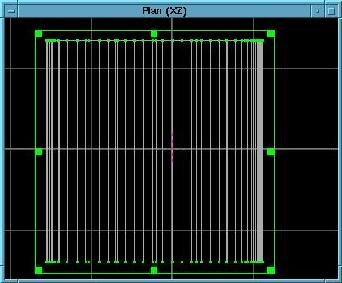

Fast Algorithms for 3D-GraphicsThis book, by Georg Glaeser and published by Springer, includes a 3.5" diskette of source for Unix systems. The diskette, however, is DOS formatted. All the algorithms in the text are written using pseudocode, so readers could convert the algorithms to the language of choice. |